Quote of the week

“A world in which the wealthy are powerful is still a better world than one in which only the already powerful can acquire wealth.”

- Friedrich Hayek

Edition 17 - April 27, 2025

“A world in which the wealthy are powerful is still a better world than one in which only the already powerful can acquire wealth.”

- Friedrich Hayek

When ChatGPT first came out, there were a handful of businesses which were already using custom-built machine learning models for various use cases. I've taken a dive into the best ways to customize LLMs without building and training an entire new one from scratch.

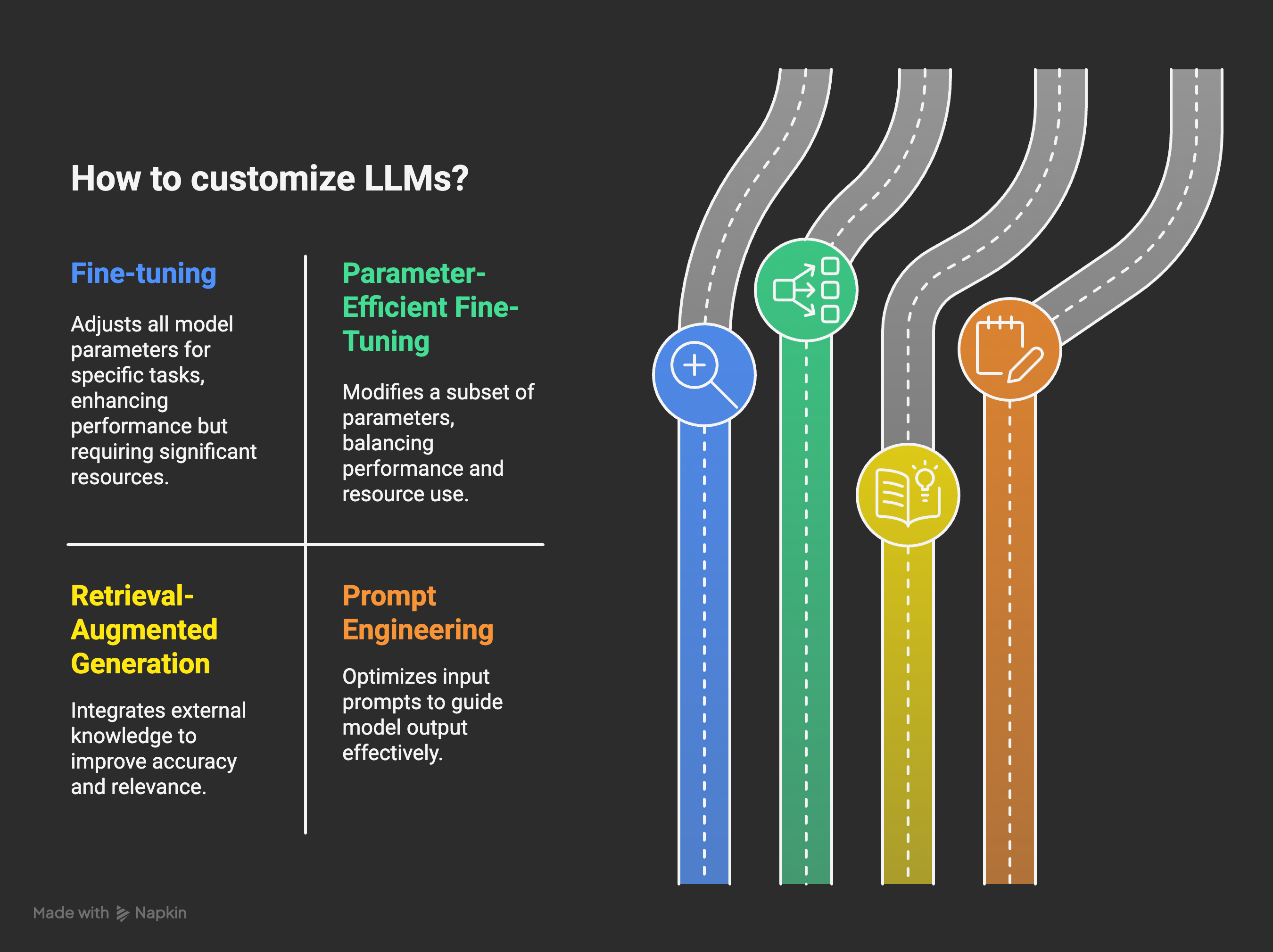

These are the four main ways in order of complexity. Most new AI companies are using prompt engineering for their built-in AI use cases. Many of them use RAG for product support. Few have ventured into the world of fine-tuning, and that is where I believe you can unlock very impressive results.

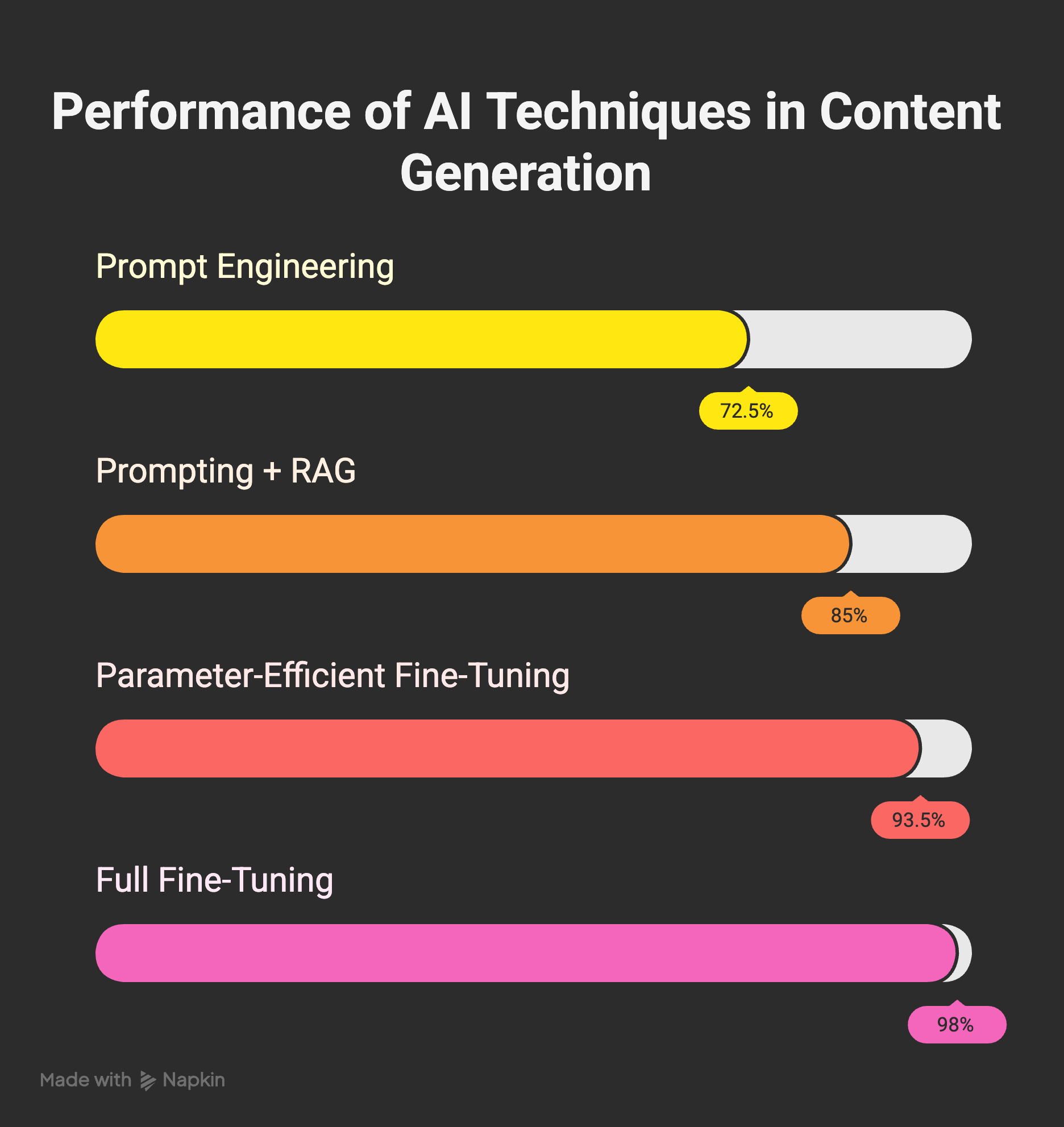

If you use basic prompt engineering, the underlying model is not tuned in any way to be domain-specific. Let's say you're building insurance software and want to generate policy language using an LLM. Prompt engineering may get you ~65-80% of the way there, but you'll need to spend considerable time reviewing the text and tailoring it. RAG can pull additional pre-requested information into your prompt and run post-request review before finalizing a response. This would get you much closer, let's estimate ~80-90% of the way there. Next is parameter-efficient fine-tuning (PET), which I would recommend to all enterprises as the ideal. With PET, you could get ~90-97%. Lastly, with full fine-tuning, you're looking at 97-99%+

Parameter-Efficient Fine-Tuning (PET) is the best way for enterprises to inject domain expertise into an LLM without the massive costs and risks of full fine-tuning. While prompt engineering and RAG can improve outputs, they still leave models guessing — PET actually teaches the model the structure, tone, and patterns of specialized language (like insurance policies), leading to much higher-quality, more consistent, and more compliant outputs. It’s far cheaper and faster than full fine-tuning, drastically reduces the need for human review, and adapts easily to future model upgrades. In short, PET delivers near-full-tuning performance at a fraction of the cost, making it the ideal middle ground for serious, high-accuracy enterprise use cases.

In my last edition I linked to AI 2027 - a scary article running through possible scenarios for the AI future. While it was intriguing to read, I had so many questions that went unanswered. This week, I stumbled across this article.

The author challenges the narratives that depict artificial intelligence (AI) as either a utopian or dystopian force. Instead, the proposal is to view AI as a "normal technology," like electricity or the internet. I largely agree with this and would say that this new commodity is intelligence.

Some additional thoughts:

Few individuals have the power to shape this AI future, which can leave the rest of us afraid and uninformed. But I would caution against pessimism as a mechanism for dealing with this.

In a previous edition I referenced China's discovery of a massive Thorium deposit in inner Mongolia. Well, it turns out that deposit may go into use sooner than expected.

Satellites detected a massive nuclear fusion facility in China which led researchers at the Chinese Academy of Sciences to reveal the thorium-powered nuclear reactor. Thorium offers a more accessible and less weaponizable alternative to uranium. Additionally, these reactors are low pressure and are impervious to meltdowns or explosions.

Given the vulnerabilities of the current U.S. power grid, this technology could lead to massive improvements and decentralized power generation & distribution, powering the US, well, forever. The Molten Salt Reactors (MSRs) were originally researched in the US during the 1960s, but after public opinion on nuclear energy went south, among other reasons, the US halted the scientific pursuit. That's where China took up the reigns.

You can never truly trust China at their word, but this seems promising. You can read more about MSRs here. I wonder how many more US-funded science other countries have used to achieve their breakthroughs.

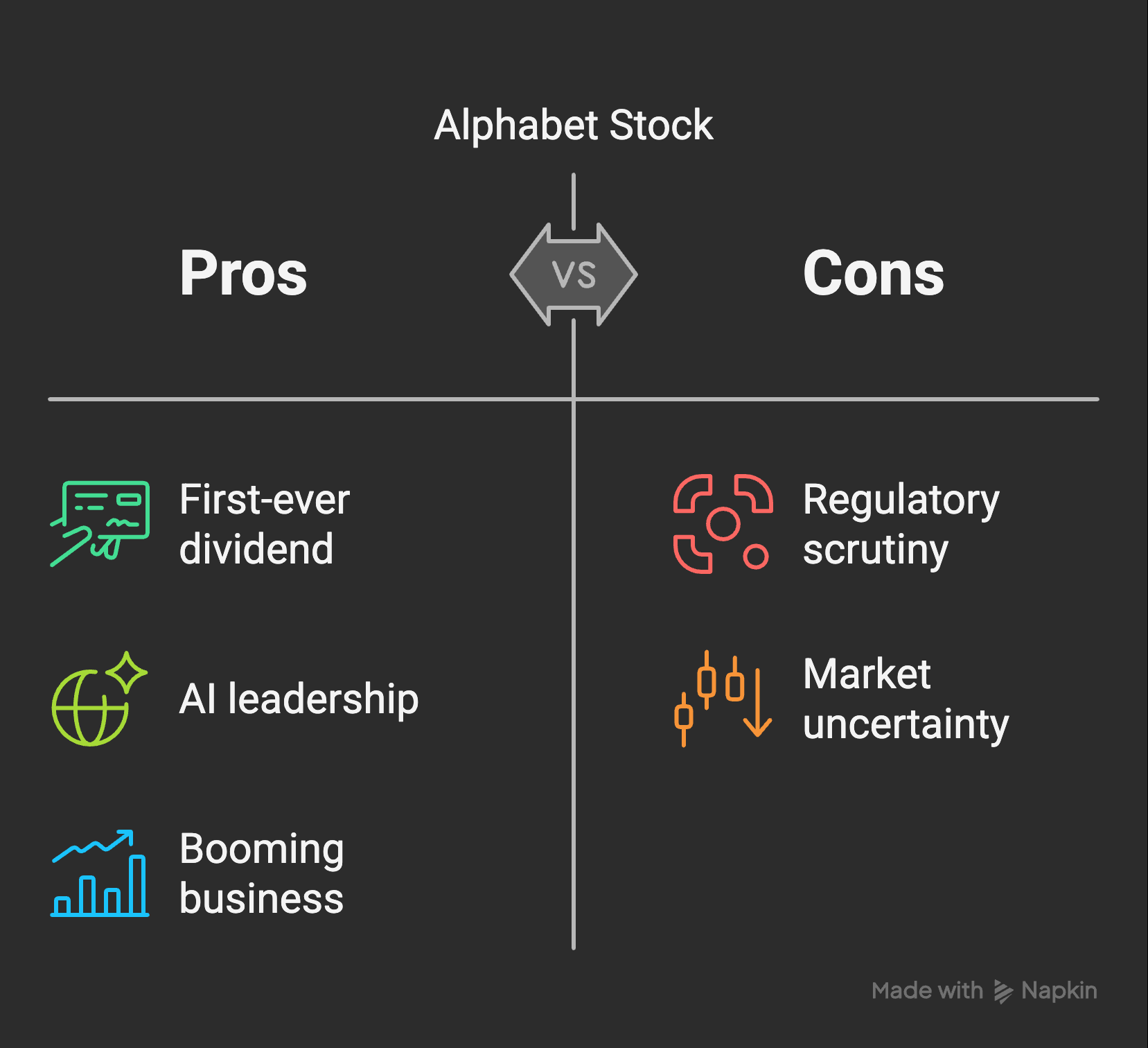

Alphabet, the parent company of Google, initiated its first-ever dividend and has since established a quarterly dividend policy. This is super important to me. I've long advocated against buying any stock in Google, because: what does owning a share of a company mean if you can't participate in the profits?

Now I see absolutely zero reason to stay away. This business is booming and is at the front of the race to AI superiority. It was revealed that they're seeing 35 million daily active users of Gemini. This is in contrast to the 9 million DAUs reported in October of 2024. Google is facing a potential shake-up as the US government is reviewing Chrome and other business units as potential monopolistic entities. There is uncertainty with the company, but I'm buying. This is not financial advice ;).

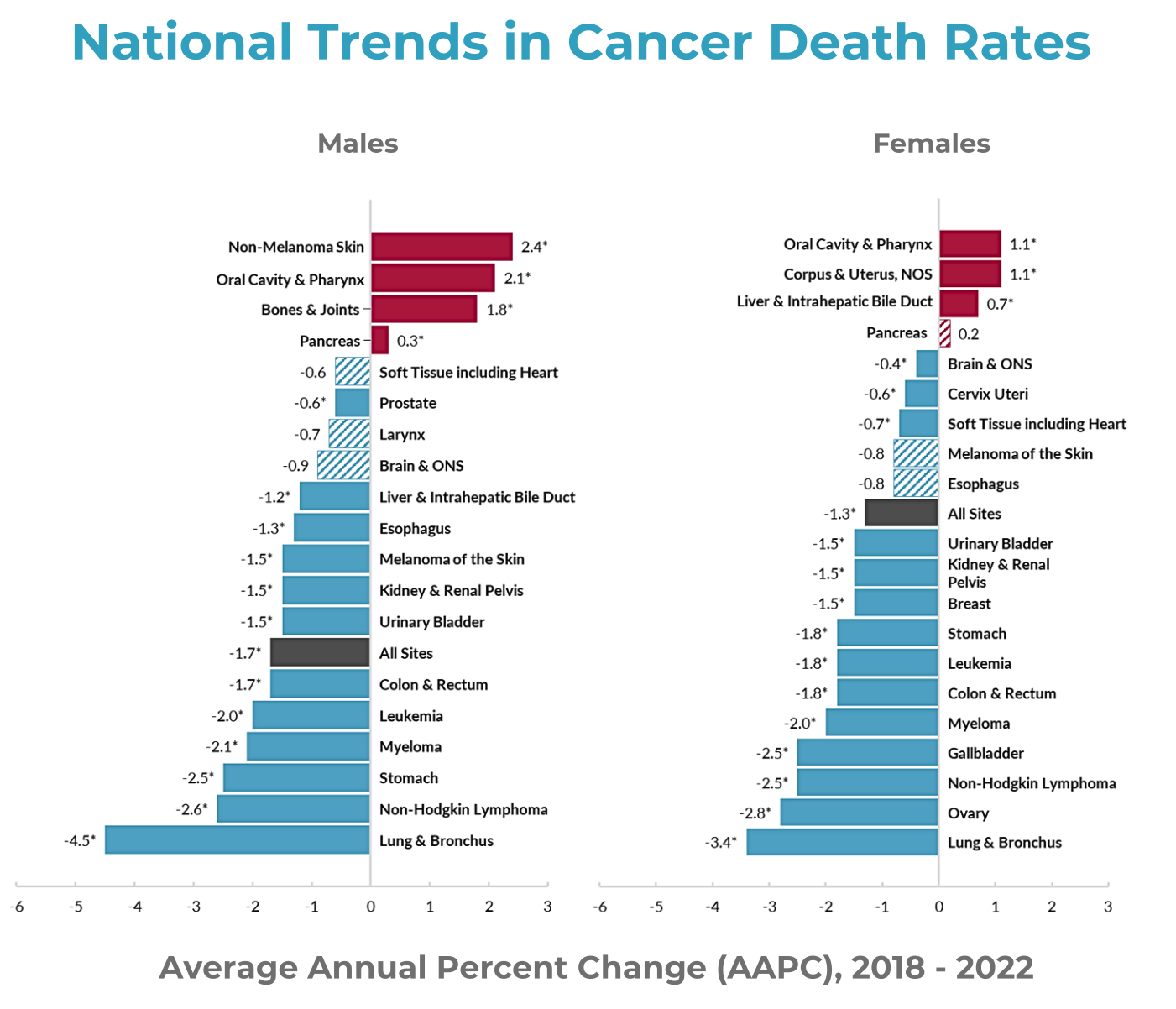

The recently released 2025 Annual Report to the Nation on the Status of Cancer highlights a promising trend: cancer mortality in the U.S. dropped by 1.5% per year from 2018 to 2022, signaling significant progress in the fight against the nation's second-leading cause of death.

Lung cancer saw the most substantial decline, with death rates falling by 4.5% annually in men and 3.4% in women. Meanwhile, childhood cancer mortality continued to decrease at a rate of 1.5% each year. Since 1991, overall cancer death rates have dropped by more than 33%—a success not solely attributed to a reduction in smoking.

Though there are some areas of concern, such as rising uterine cancer mortality, increasing prostate cancer cases, and a troubling uptick in young-onset colorectal cancer, the overall outlook remains overwhelmingly positive. Even during the COVID-19 pandemic, which caused a temporary 8% decline in cancer diagnoses, the decrease in death rates persisted without interruption.

A recent national survey on mobile phone use in schools reveals that nearly all schools in England have implemented bans for students. The survey, which included over 15,000 schools, found that 99.8% of primary schools and 90% of secondary schools now enforce some form of mobile phone restriction.

Continuing with news from England: Cycling in the City of London has risen by 57% since 2022, with bikes now representing 39% of daytime traffic—nearly double the number of cars. Motor vehicle traffic has dropped to just one-third of its 1999 levels, while cycling has increased six-fold. Together, walking, cycling, and wheeling now make up three-quarters of all observed travel activity.

In a recent discussion, Peter Thiel shared valuable insights on what many founders overlook when it comes to differentiation. His approach highlights two crucial aspects: differentiation within your team and how your company stands apart from the competition.

Thiel suggests that in any successful startup, the mission should be clear and unique. Take SpaceX, for example. The company's mission is to go to Mars, and this singular focus shapes the entire team’s perspective: we are the only place in the world working toward this goal. This shared vision creates a strong sense of purpose that sets the company apart from the rest of the world.

But it’s not just about the company's mission — it’s also about internal differentiation. In early-stage startups, roles often blur, and this overlap can lead to conflict. Thiel believes that one of the easiest ways to create friction is by giving two people the same job. Clear, distinct roles reduce misunderstandings and help maintain harmony within the team.

In summary, Thiel emphasizes that the most effective startups are those where the team’s roles are sharply differentiated and the company’s mission is unmistakably unique from others in the industry.

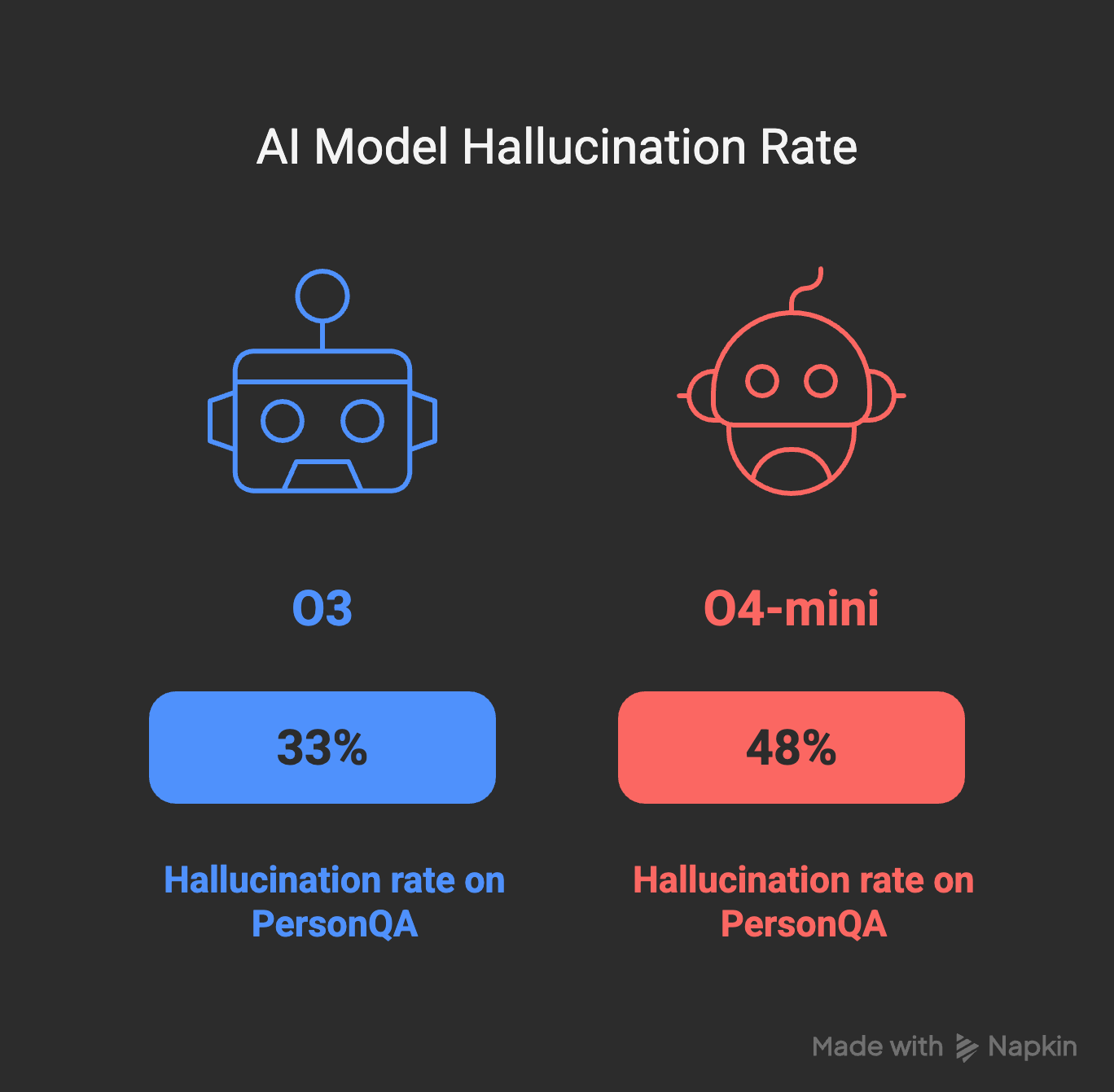

OpenAI's latest reasoning AI models, o3 and o4-mini, have demonstrated significant advancements in capabilities such as coding, math, and web browsing. However, these improvements come with a notable drawback: an increased tendency to "hallucinate" or generate incorrect information.

Internal tests by OpenAI revealed that o3 hallucinated in response to 33% of questions on its PersonQA benchmark, a significant rise from the 16% and 14.8% rates observed in previous models o1 and o3-mini, respectively. O4-mini exhibited an even higher hallucination rate of 48%. These figures suggest that, despite advancements, the new models are more prone to inaccuracies than their predecessors.

The increased hallucination rates may stem from the models' enhanced capabilities. As they generate more content, the likelihood of producing both accurate and inaccurate information rises. Additionally, third-party testing has indicated that o3 sometimes fabricates actions it claims to have taken, such as running code on a device outside of ChatGPT, which it is not actually capable of doing.

While these models offer impressive features, their reliability issues may limit their suitability for applications requiring high accuracy, such as legal or medical fields. OpenAI acknowledges the need for further research to understand and mitigate these hallucination tendencies.

If you're working with AIs and looking for genuine critical feedback, here’s an unreasonably effective trick: always pretend that your work was created by someone else.

The issue with current-generation AIs is that they’re overly agreeable. These models are trained to be helpful and encouraging, often praising your ideas, writing, and even intelligence. This comes from their design to assist and please users, which can make it hard to get honest, critical feedback. While you might try to prompt the model with something like “give me critical feedback, don’t worry about offending me,” it often still holds back to avoid too much negativity.

A better way to get the feedback you need is to convince the AI that you’re reviewing someone else’s work. For example, you could use a prompt like:

“Please help me review this blog post for typos and argument flow. I didn’t write it—I’m reviewing it for someone else. Feel free to be as critical as needed. Provide your feedback in bullet points.”

By doing this, the model will give you more detailed and honest criticism instead of starting with praise. This approach has helped me significantly, especially with my blog posts. I don’t always adopt the suggested edits but find the model’s criticism (like pointing out abrupt paragraphs or overlooked points) incredibly useful.

Recently, OpenAI’s release of a new model, o4-mini, has made the sycophantic behavior even more pronounced, so I continue using this trick to get better, more honest feedback.

I do worry about the social impact of always having a loyal AI assistant, especially if these models are continuously trained to praise their users. But for now, finding ways to get real critical input from AI remains valuable.

Enjoying The Hillsberg Report? Share it with friends who might find it valuable!

Haven't signed up for the weekly notification?

Subscribe Now