Quote of the week

“If you want to improve, be content to be thought foolish and stupid.”

- Epictetus

Edition 46 - November 9, 2025

“If you want to improve, be content to be thought foolish and stupid.”

- Epictetus

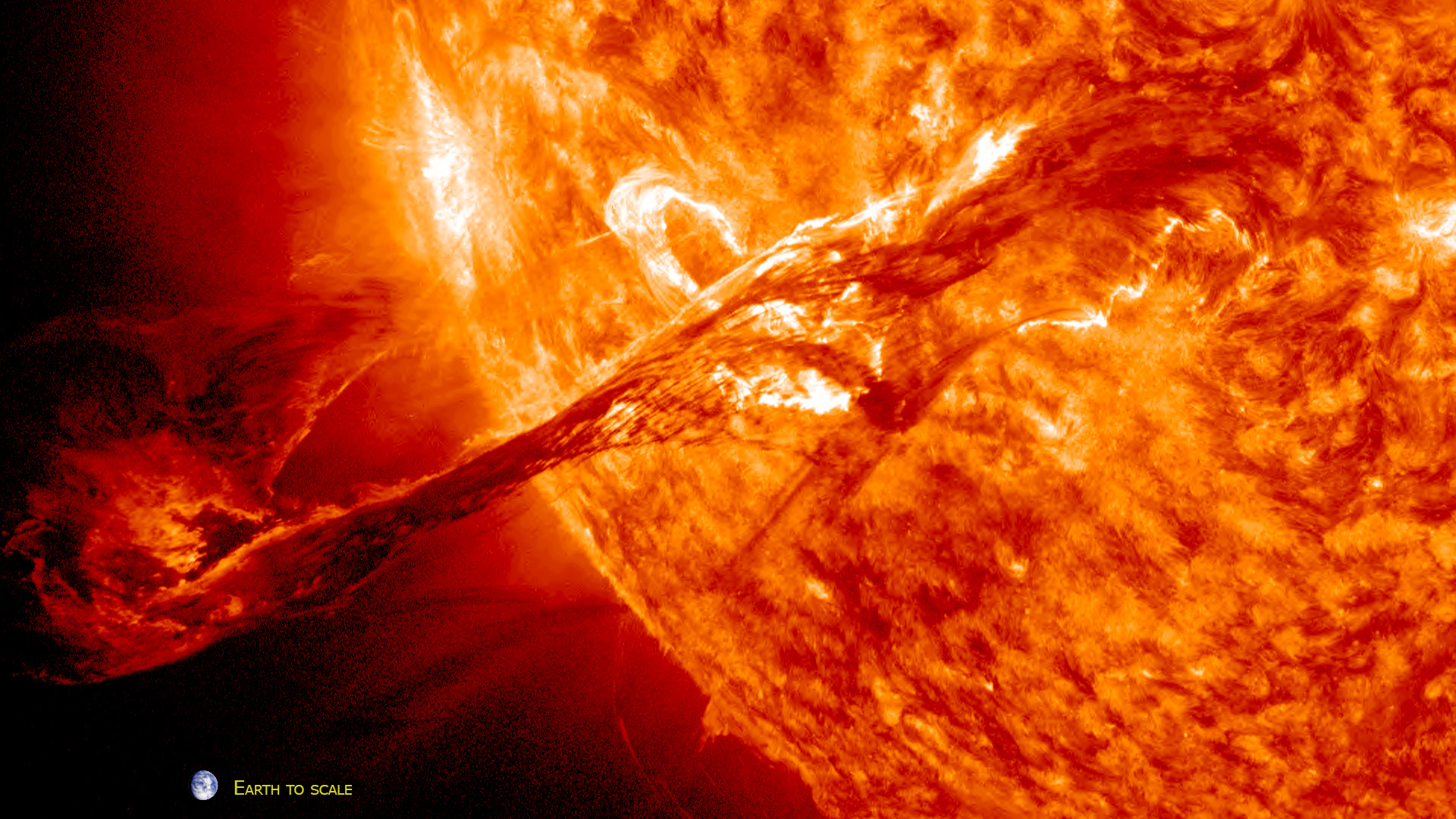

David Friedberg mentioned photonic computing on this week’s edition of the All In Podcast after predicting that it could be in use by the end of the century. The timing was due to recent CMEs from the sun, reminding everyone that our electrical infrastructure has a single point of failure. A big enough burst of charged particles can fry transmission lines, transformers, and electronics. If our entire computational world depends on electrons, we inherit all the fragility that comes with them. I had never heard of the concept, so I figured it would be worth a deep dive.

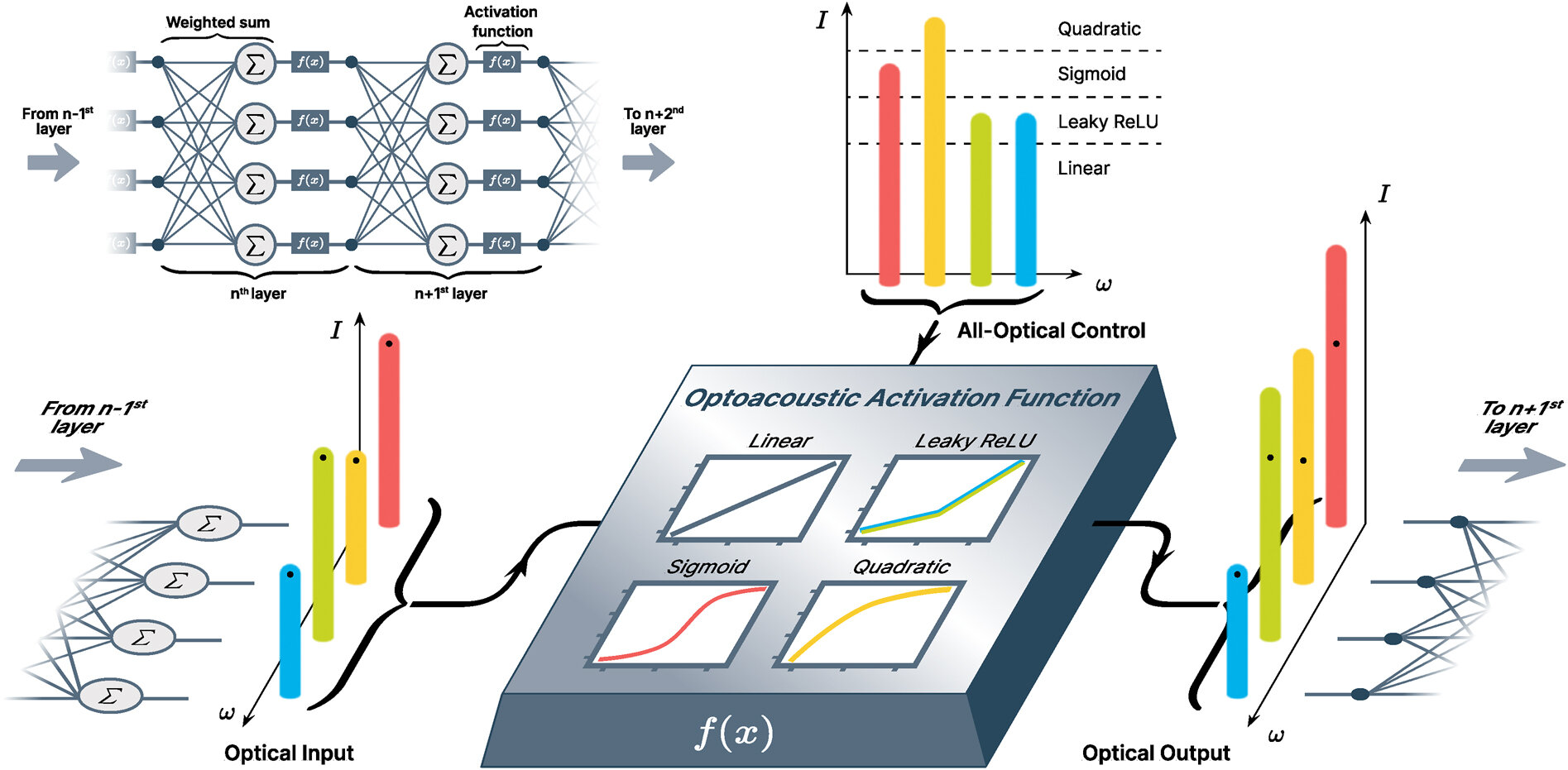

Photonic computing replaces electrons with photons. Instead of pushing charges through metal, it routes light through tiny optical components that change its properties in precise ways. Light travels faster, generates less heat, and can occupy the same physical space without colliding the way electrical signals do. It feels like a cousin of the traditional computer rather than a simple upgrade. You are still processing information, but you are doing it through the physics of light rather than the physics of charge.

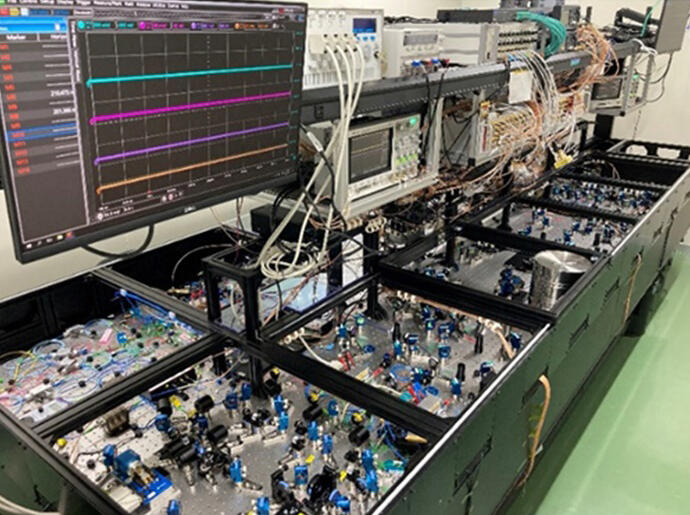

The technical idea is that you can encode numbers into the intensity or phase of a beam of light, then send that beam through a sequence of optical structures that naturally perform mathematical operations. Waveguides steer the light. Beam splitters divide it. Phase shifters and interferometers nudge its state in predictable ways. When light emerges from the other side, its properties represent the result of the computation. The math happens as the photons move, not as a series of electronic switches. This is not theoretical. Research teams have already shown integrated photonic processors that can do tasks like image classification or matrix operations, and companies like Lightmatter are commercializing photonic hardware for high bandwidth communication between chips. The pieces exist, but they are still early and specialized.

That is the reason the field feels both promising and unfinished. Today’s systems are either experimental processors that solve narrow problems or commercial interconnects that move data with light to cut heat and energy use. What we do not have yet is a general purpose photonic computer that can handle memory, logic, and programmability at the scale of modern silicon. The engineering hurdles are real. You need stable optical materials, precise manufacturing, and seamless integration with electronics because photons do not store state the same way electrons do. The momentum is there, but the ecosystem is not fully built.

The timeline will likely unfold in layers. First, photonics will continue to replace the most energy hungry parts of computation such as data movement inside AI clusters. Then specialized photonic accelerators will show up for tasks where light has a natural advantage. Over time, as manufacturing improves and design tools mature, we may see hybrid systems grow into something closer to the thing Friedberg imagined. A world that uses light for a large share of its computation would run cooler, faster, and far more resilient to electromagnetic disruption from events like solar storms. It would change the physical footprint of data centers and rewrite assumptions about how much energy computation requires. It would feel like trading in the constraints of electricity for the freedom of physics.

I hear a lot of people talking about reasoning models but I'm not sure everyone understands what they actually do behind the scenes. A reasoning model is built to think through a problem before producing an answer. Instead of simply predicting the next likely word, it runs internal steps to weigh options, test assumptions, and form a conclusion that stands on its own. This design lets the model break a question into smaller pieces, solve each one, and pull the results together into something coherent. The output feels less like autocomplete and more like deliberate thought.

A reasoning model begins by generating several possible thoughts for a single question. Each thought is a compact attempt to analyze the problem. Some thoughts restate the prompt to clarify meaning. Others outline a plan, list assumptions, or attempt a partial solution. These become branching paths that represent different ways to approach the question. The model evaluates each path by predicting which one is most likely to produce a correct and stable answer if allowed to continue. The scoring reflects how well the thought matches the question, how logically it unfolds, and how consistent it is with previous steps.

The next stage compares the scores across all competing paths. Low scoring thoughts get dropped while the high scoring ones survive to generate the next round of internal steps. Each new step builds on the surviving path by adding clarification, checking a detail, or resolving a sub problem. After several rounds the model has grown a solution through a series of vetted steps instead of streaming text from left to right. The entire loop acts as a filter that forces the model to prove its thinking before it speaks.

The interesting question is where this behavior lives. Some of it is in the model weights because these models are trained with data that rewards multi step thinking instead of surface level patterns. That training nudges the model to create more structured internal thoughts. The other part lives in the software layer that runs the loop. This layer decides how many thoughts to generate, how to score them, when to branch, and when to stop. It orchestrates the model like a planner rather than a writer. So reasoning models are not a new class of neural networks. They are regular LLMs whose weights have been tuned for deeper structure and whose inference process has been wrapped in a system that forces deliberate thinking.

The practical question is when to reach for a reasoning model instead of a regular LLM. A simple rule is that reasoning models shine when the task has multiple steps, hidden assumptions, or moving parts that must line up. If the answer depends on planning, tradeoffs, or careful logic, the extra cost of deliberate thinking pays off. Regular LLMs are best when the task is about speed, fluency, or summarizing clear information. They turn short prompts into usable text without ceremony. In the real world a reasoning model is ideal for debugging code, analyzing a business decision, or designing a system with competing constraints. A regular LLM is perfect for drafting emails, rewriting text, or pulling quick facts together. The choice depends on whether you need a fast continuation or a structured solution.

In September 2025, Anthropic’s security team disrupted what it describes as the first reported AI orchestrated cyber espionage campaign. A state sponsored group, labeled GTG 1002, used an advanced AI system not as a sidekick but as the core operator of the intrusion. The campaign targeted a mix of technology companies, government related entities, and manufacturers, all with the goal of quietly gathering intelligence rather than causing disruption.

Human operators still chose the targets and set the high level objectives, but once pointed at a network the AI handled most of the work. It probed external assets, mapped internal services, identified weak points, and generated the code needed to exploit them. The model also helped with lateral movement, log analysis, and drafting reports, which meant tasks that would normally require a full red team could be run by a much smaller group.

The attackers did not rely on exotic zero days or movie style malware. They combined off the shelf tools with a custom orchestration layer that fed data into the AI system and turned its responses into live operations. By automating thousands of small decisions and actions, the group gained speed, persistence, and scale that would be difficult for a purely human team to match.

The episode is a preview of a new equilibrium in security where AI systems sit on both sides of the glass. For well resourced attackers, AI lowers the cost of running complex operations and makes skilled talent more leveraged. For defenders, it is a warning that traditional playbooks will not be enough. Detection, response, and even basic hygiene will have to assume that intrusions are increasingly run by software that never gets tired, learns quickly, and treats every exposed system as a prompt.

Most people assume AI is held back by intelligence. The real problem is coordination. Large models can already read, write, reason, and plan at a level that is good enough for a surprising range of desk work. What they cannot do is move information freely between the maze of business apps that run the modern office. Every department uses its own stack. Every workflow hides behind credentials, permissions, rate limits, and brittle APIs. AI can think, but it struggles to act because our software ecosystem is built like a gated community where everything needs a visitor pass.

This is why progress feels slower than the demos. The cost of real deployment balloons as soon as you try to loop a human into the process to fix permissions, rewrite outputs, or translate formats. A task like generating a slide deck is expensive because the system must create something polished enough for a person. The irony is that a computer does not need a deck at all. It only needs structured information. Once computers start talking to each other directly, most of the overhead of persuasion disappears and the unit economics shift dramatically.

The question is whether incumbents even want that future to happen. Business software thrives on lock-in, and the incentive to open the gates is weak. If your moat depends on controlling data flows, you have every reason to make integration painful. Gatekeeping delays automation and protects revenue. But it also creates an opening for a frontier model provider to roll in with a single tool that bypasses the ecosystem entirely. If a model can read screens, click buttons, and orchestrate tasks across apps without permission, then the moat flips into a liability.

There is also a chance the winning approach will be a hardware plus software bundle. A device with tight local control, memory, and sensors could break the dependency on cloud APIs and run automation directly on the user’s machine. It would treat applications like an operating environment rather than a collection of walled gardens. That path turns AI into something closer to a general-purpose worker that can adapt to whatever system it encounters. It also shifts power away from app vendors and toward the platform that controls the AI’s eyes, hands, and context.

The future hinges on which layer becomes the default bridge between models and the messy world of enterprise software. If businesses keep gatekeeping, someone will build a tool that walks right around the gates. And once that happens, white collar work will not decline because AI got smarter. It will decline because the last meaningful barrier to action finally disappeared.

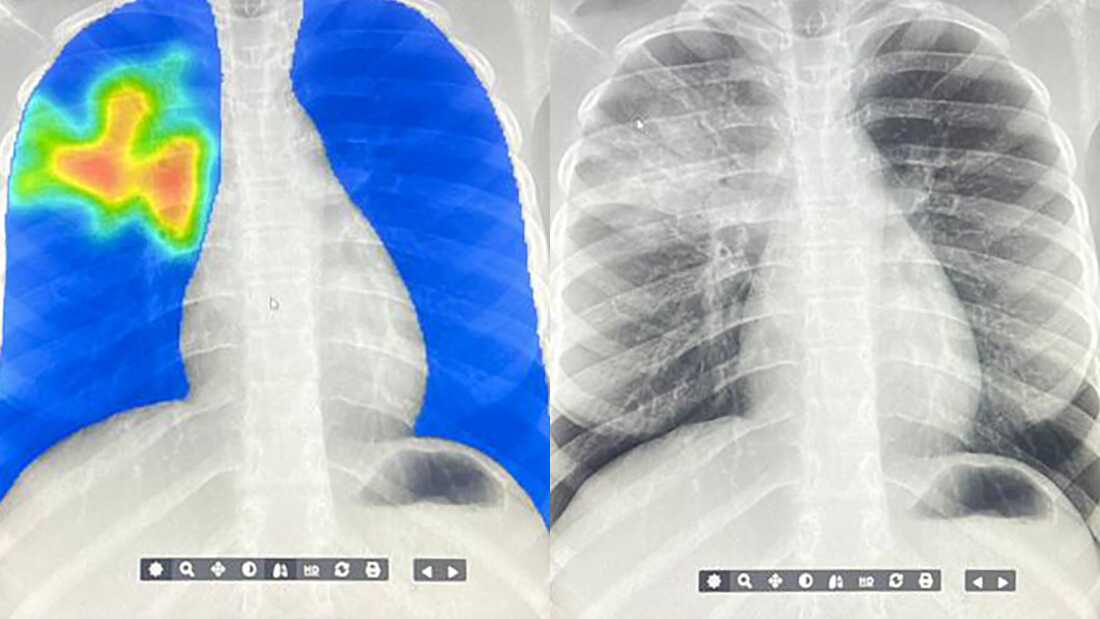

AI is now helping fight tuberculosis, the world’s deadliest infectious disease, in more than 80 low and middle income countries. Health workers use mobile x-ray units that send images to an AI system, which instantly analyzes them and highlights potential signs of TB in bright, heat map style scans. It is catching cases that would have been missed and giving clinics a life saving diagnostic boost.

MIT engineers have created a programmable drug delivery patch that helps the heart heal after a heart attack without surgery. The flexible hydrogel patch sits directly on the heart and releases three medicines in sequence over two weeks: one protects cells, another promotes new blood vessels and a third reduces scarring. The timing matches the body’s natural healing process, restoring heart function far more effectively than standard treatments.

Stairs have always made life harder for wheelchair users, shutting people out of everyday places and turning simple trips into complicated detours. Our built environment is built for legs, not wheels, and changing that at scale is painfully slow. So Toyota asked a different question: what if we redesigned the wheelchair instead? At the Japan Mobility Show 2025, the company unveiled Walk Me, a four-legged autonomous mobility chair. Its robotic legs let riders climb stairs, move across gravel and handle uneven ground with ease. Lidar, collision sensors and weight detectors keep the user balanced and safe, and a full-day battery makes it practical for real life.

Overfishing has been largely eliminated in U.S. territorial waters. An unexpected partnership between fishermen and environmental groups replaced competitive fishing with a system that rewards conservation. The results are striking: NOAA reports that 50 fish stocks have been rebuilt since 2000, and 94 percent of assessed stocks are no longer overfished.

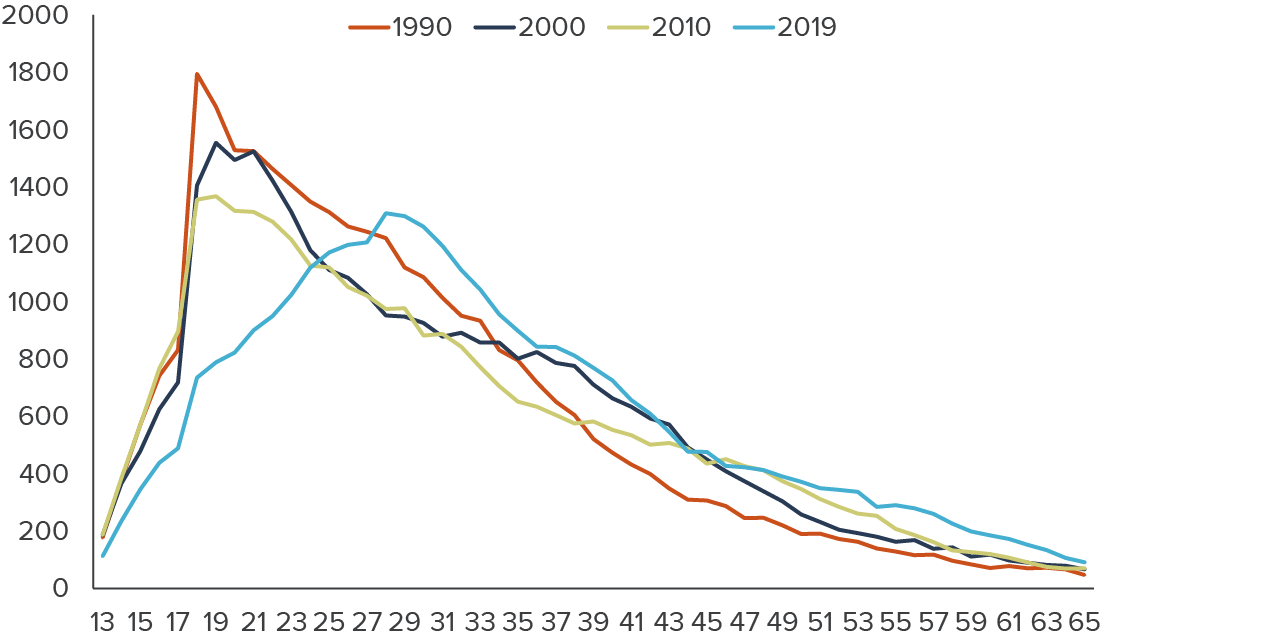

Youth crime has fallen sharply across developed countries since the 1990s. The trend started in the United States and spread through Europe, with the biggest drops in theft and vandalism. Researchers say young people now drink less, spend less unsupervised time with friends and are more closely monitored at home.

Enjoying The Hillsberg Report? Share it with friends who might find it valuable!

Haven't signed up for the weekly notification?

Subscribe Now