Quote of the week

"A crooked tree lives its own life, but a straight tree is turned into wood."

- Attributed to Zhuangzi

Edition 45 - November 9, 2025

"A crooked tree lives its own life, but a straight tree is turned into wood."

- Attributed to Zhuangzi

Every company knows this story. A once-nimble startup picks Salesforce because everyone uses it. Slack becomes the nerve center of communication. Workday anchors HR. Years later, these systems feel like concrete poured around the business. The team grumbles, executives sigh, and yet the refrain is always the same: we can’t move, it’s too risky. The cost of switching becomes this mythic monster in the room, growing larger every year it’s avoided.

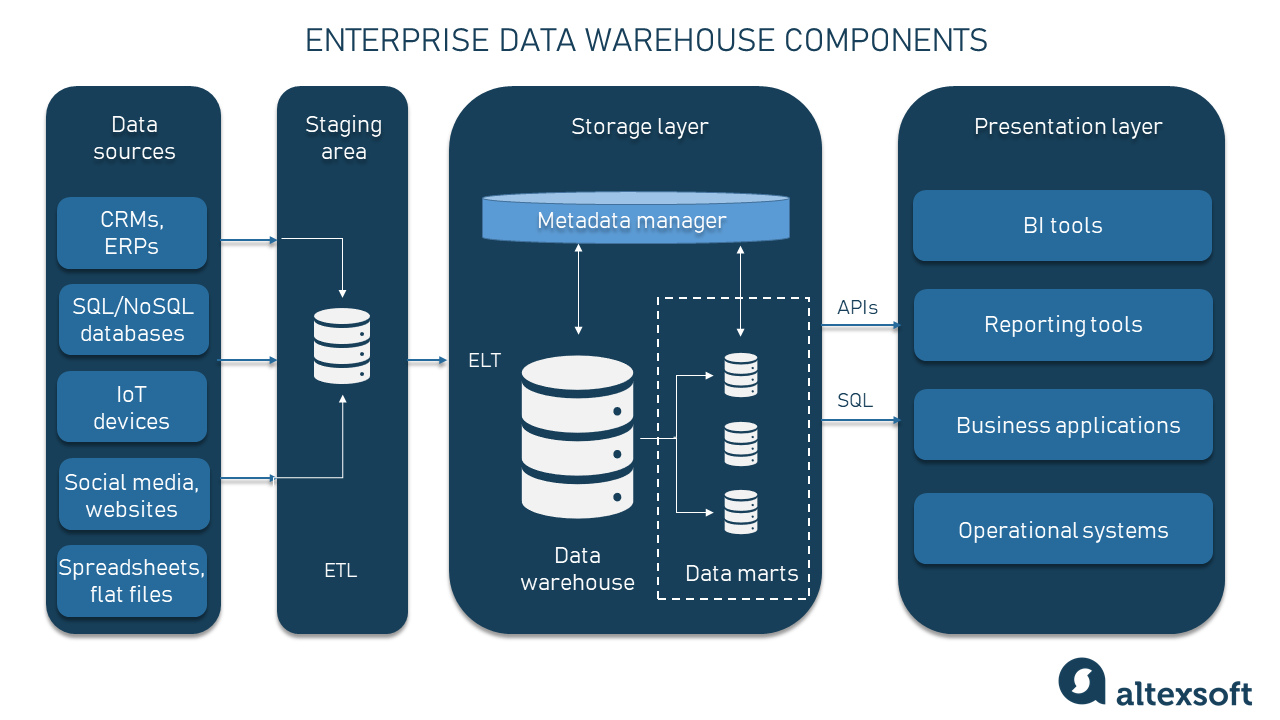

The truth is, most organizations don’t actually know what the real switching cost is. They assume it’s enormous because the system feels critical. But if you peel it apart, you often find that only a fraction of what’s inside is still valuable. The rest is legacy cruft, old fields, forgotten workflows, expired integrations. The first step isn’t replacing anything. It’s auditing what you really use. Start with an inventory: what data is active, who touches it, and what dependencies actually matter? You’ll be surprised how much can be left behind without consequence.

Once the audit is done, shift to design thinking. If you were building from scratch today, what would your ideal workflow look like? Which tools integrate naturally with your data strategy? This step reframes the problem from how do we leave the old system to how do we build the next one. From there, you can map data migrations, create a cutover plan, and simulate what life looks like post switch. The goal isn’t perfection, it’s clarity about tradeoffs and confidence in sequencing.

The decision to switch should be rational, not emotional. Compare the true operational costs, time wasted, data trapped, complexity tolerated, to the projected cost of migration. Factor in the opportunity cost of staying still. In many cases, the switch pays for itself within a year simply by removing friction that’s become invisible. But sometimes, you’ll find the existing system still fits. The process of evaluating it is what restores control, regardless of the outcome. One more thing...these legacy system generally come with administrators and specialists, either in-house or consultants. Fire the consultants when you can, but see if you can re-skill the in-house talent to help in your planning and transition them to an AI-powered workflow builder for the organization.

In the new AI driven world, flexibility matters more than brand names. The companies that win will have clean data architectures, modular systems, and the freedom to plug in new intelligence wherever it adds value. Legacy tools were not built for that. Staying on them out of fear is no longer just an IT decision, it’s a strategic risk. The real high cost isn’t switching. It’s staying stuck. I cannot reiterate this enough - in the new world of AI, data architecture and flexibility is 100x more important than ever before.

Apple made headlines this week after reports surfaced that it plans to use Google’s massive Gemini AI model to power a new version of Siri. According to multiple outlets, Apple will license a customized 1.2 trillion parameter version of Gemini, hosted on its own Private Cloud Compute infrastructure. The deal is reportedly worth about 1 billion dollars a year and would allow Apple to rapidly improve Siri’s conversational and generative capabilities without waiting for its in house models to mature. Apple is said to be preparing a public launch for the new Siri early next year alongside updates to Apple Intelligence.

The move marks a significant shift for Apple, a company known for keeping its core technology in house until recent years (OpenAI). Siri has struggled for years to keep pace with voice assistants from Google, Amazon, and OpenAI. Leaning on Google’s Gemini could help close that gap, but it also signals that Apple may be less rigid about outsourcing AI than many assumed. The company seems to be betting that control of the user experience is more valuable than owning every part of the AI stack.

The first question is whether this setup can actually be secure if it is not fully on device. From a technical perspective, the answer depends on where and how data flows. Apple says the new Siri will use a secure cloud, but that phrase hides the reality that every cloud based interaction introduces at least two additional points of potential failure. The first is the network itself. Even with end to end encryption, the data in motion carries metadata such as timing, size, and routing that can sometimes be inferred or correlated. The second is the cloud layer where inference happens. That environment must be isolated per user, protected from model inversion or side channel attacks, and subject to strict deletion policies. Apple’s Private Cloud Compute design attempts to minimize exposure by using dedicated Apple hardware and verified runtime code, but this still relies on an external model that was trained and maintained by Google. The company must therefore trust not only its own cloud security but also that the model itself contains no embedded vulnerabilities or unintended data retention. This layered trust model is harder to verify and increases systemic risk. Even if managed well, it is not equivalent to the simplicity of on device processing. Which leads to the next question everyone is asking.

The optimistic case says Apple could look brilliant for not going all in on generative AI. By licensing Gemini instead of rushing its own unproven large model, Apple buys time and flexibility. It gains immediate access to best in class capabilities while it continues refining its own models, and it can selectively adopt cloud inference for complex queries while keeping lightweight tasks on device. That hybrid approach could make Apple look pragmatic rather than behind. The company can focus on what it does best, tight integration between hardware, software, and privacy messaging, while letting Google’s infrastructure handle the heavy lifting for now. If users experience a noticeably smarter Siri that still feels private and reliable, Apple will have executed a familiar playbook: wait for the market to shake out, then enter with a version that feels safer and more polished. Apple has been sitting on a stockpile of cash, will they look brilliant for not burning it on the frontier model race?

The bearish view is that this is less genius and more concession. Relying on Google for AI intelligence puts Apple in a position it has tried to avoid for decades, depending on a direct competitor for core technology. If the Gemini powered Siri becomes Apple’s main differentiator and the company fails to build its own comparable model, it could find itself permanently renting innovation. The decision may also blur Apple’s privacy narrative, which has long been anchored in local processing. Even a secure cloud story might not reassure users who expect AI features to stay on their devices. And the economics are risky. A billion dollar annual fee is sustainable for Apple now, but it scales poorly if usage grows faster than revenue. If Gemini ends up feeling like a stopgap rather than a strategy, history may remember this as the moment Apple ceded leadership in AI rather than reclaimed it.

In this article, Sean Goedecke looks at several theories for the LLM dash habit and discards most of them. Token efficiency is weak, optionality during next token prediction is not persuasive, and RLHF dialect effects do not line up with measured punctuation rates. What did change is the training mix between 2022 and 2024 as labs leaned on digitized print books, especially late 1800s and early 1900s texts. Those eras used more em dashes, with estimates around a third higher than modern prose, so models picked up the habit and kept it.

I never liked the tight format and large bar “word—word.” I prefer “word - word,” the smaller dash with spaces on both sides. After I started using an LLM to draft article for The Hillsberg Report, the dashes multiplied and I got so mad with ChatGPT. Entire drafts came back saturated with them, even when the rest of the tone felt right. Below are my custom GPT instructions for writing these articles, which are tuned to keep punctuation simple and the cadence steady:

You are a tool to help create articles for my newsletter, The Hillsberg Report.

You have 2 goals:

1) Help me create, edit, and finalize each article for my newsletter. Start in plain text.

2) Convert the finalized newsletter article into my specific HTML format. Always output the HTML as a code block when it is time.

Here is an example of my specific HTML format:

[I PUT THE HTML FORMAT EXAMPLE HERE IN FULL]

***Writing style: Clear, direct, and analytical. It explains complex systems in plain language without hype. Favor a mix of short, medium, and long sentences to create rhythm and flow. Tone is confident but conversational, often linking specific events to bigger patterns. Avoid em dashes, prefer clean transitions, and end paragraphs with crisp insights. Themes center on technology, incentives, and optimism grounded in pragmatism, written with a balance of intellect and humanity. Anyone can read and understand the content, from engineers to high schoolers.

***Don't use em dashes or any dashes in general unless necessary. There are no headers in my articles, just a title and paragraphs.

Trigger Warning: This challenges some common beliefs about fairness and wealth.

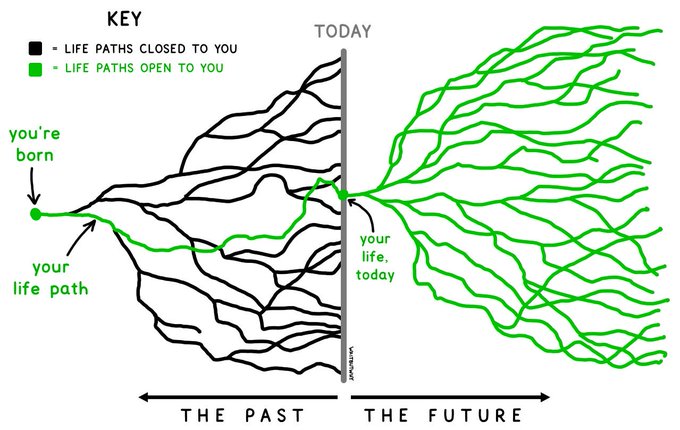

A zero-sum world is one where every gain comes at someone else’s expense, a closed system of winners and losers. It’s the logic of poker and politics, not of progress. Yet this mindset persists because it flatters resentment - it gives people someone to blame for their frustration. The truth is that the economy isn’t a fixed pie being sliced thinner with every success story, millionaire and billionaire. It is a growing network of creation, where collaboration and ingenuity expand the total value available to everyone. Innovation, trade, and specialization all operate on this principle of positive-sum growth. When people build new tools, services, or technologies, they unlock value that didn’t exist before. Civilization itself is proof that human progress compounds when we move beyond zero-sum thinking. It's important to understand that even in a world with a fixed supply of dollars, the productive capacity behind each dollar can grow. The value (or purchasing power) we get from each dollar is not set in stone. I fear many people either don't understand this or forget it all too often. In the early days of Macintosh, some of the machines were priced over $1,000. Today our phones are at least 50,000x more powerful, for the same price.

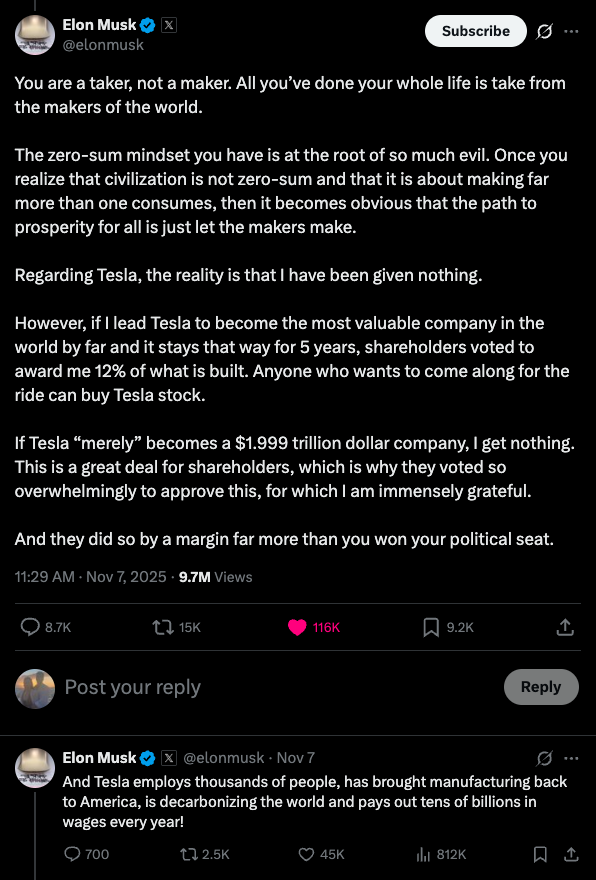

Elon Musk’s recent post on X captured the tension around this issue in the current political climate with unusual clarity. Responding to a senator who accused him of “becoming a trillionaire off the backs of taxpayers,” Musk replied, “You are a taker, not a maker. The zero-sum mindset you have is at the root of so much evil.” You can read the full post here. Musk’s argument was not just about defending his compensation but exposing a deeper economic truth: value creation is not theft. His Tesla pay structure — zero reward unless the company reaches extraordinary milestones, and even then is all stock (not cash) — embodies the principle that wealth should follow performance, not precede it. He earns only if everyone else wins first: shareholders, employees, customers, and the broader energy ecosystem. It’s a model of incentives that rewards long-term creation rather than short-term extraction, and it demonstrates how growth aligned with contribution can be both fair and transformative.

The danger of the zero-sum mindset is that it breeds stagnation under the banner of equality. This generally emerges through socialist or communist pursuits, when systems cap upside potential in the name of fairness, they erode the motivation to innovate, invest, and take risks. People optimize for safety instead of exploration. The result is not equality of prosperity but equality of limitation. This is the quiet failure of many redistributive models that prize uniform outcomes over productive incentives. The irony is that when you remove the rewards for creation, you eventually shrink the pool of resources available to distribute. True fairness comes not from suppressing success but from building environments where success is possible for more people. The world’s wealthiest societies are not those that capped ambition — they are the ones that unleashed it. Civilization flourishes when people are encouraged to make more than they take, and when the system rewards creation instead of punishing it.

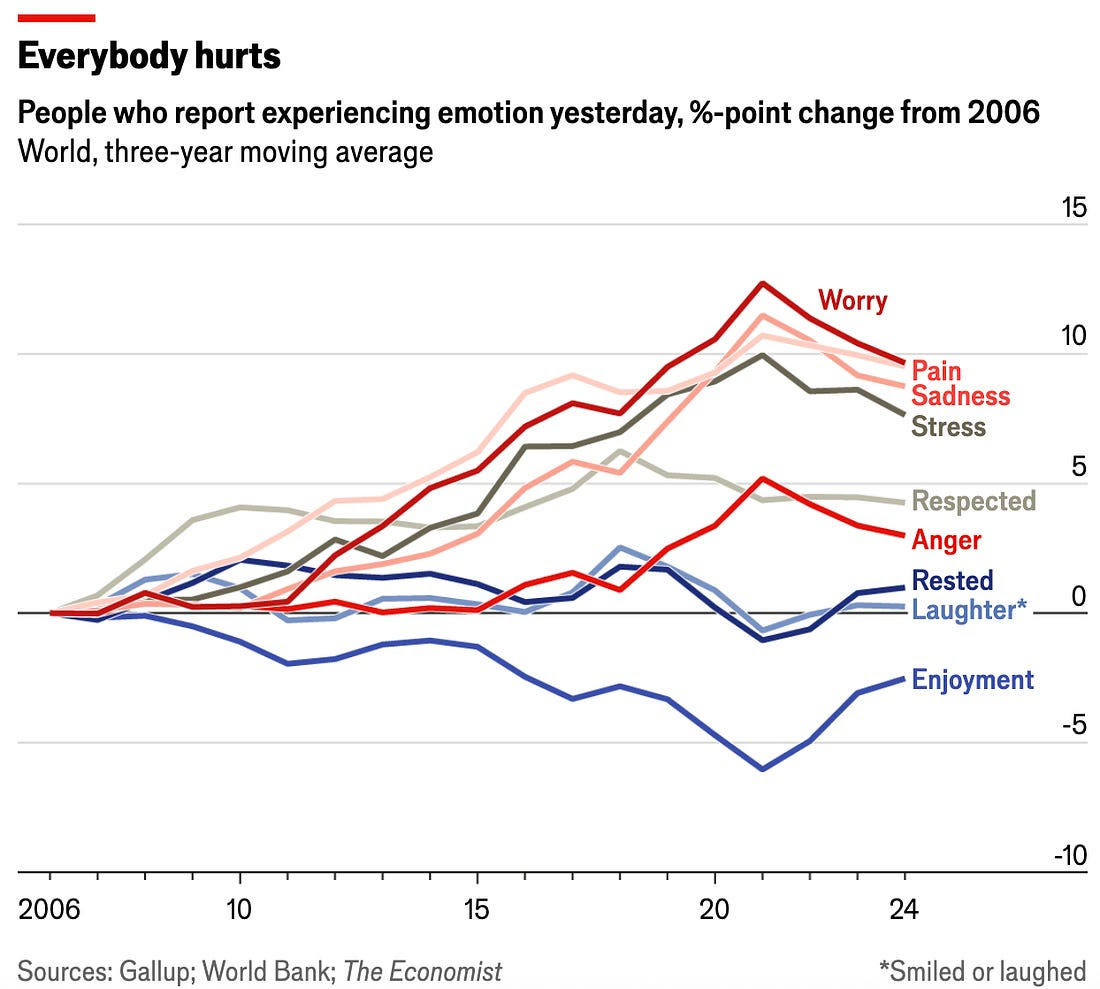

Gallup’s latest global emotional health survey shows the world quietly shaking off its grumpiness, with worry and stress dropping to their lowest levels since 2019 as just 39% of people felt worried and 37% felt stressed the previous day. Nearly nine in ten said they felt respected, and more than 70% smiled or laughed. Denmark leads the happiness pack, while countries facing conflict and instability like Chad, Sierra Leone and Iraq remain the least content.

Scientists have tapped AI to design an enzyme that can gobble up one of the toughest plastics around—the polyurethane found in foam mattresses and sneakers. In just 12 hours at 50°C, it breaks the material down into reusable chemicals, turning old foam back into its raw ingredients and making true circular recycling feel suddenly real. And yes, the wild part is still the same: the enzyme itself was created by artificial intelligence.

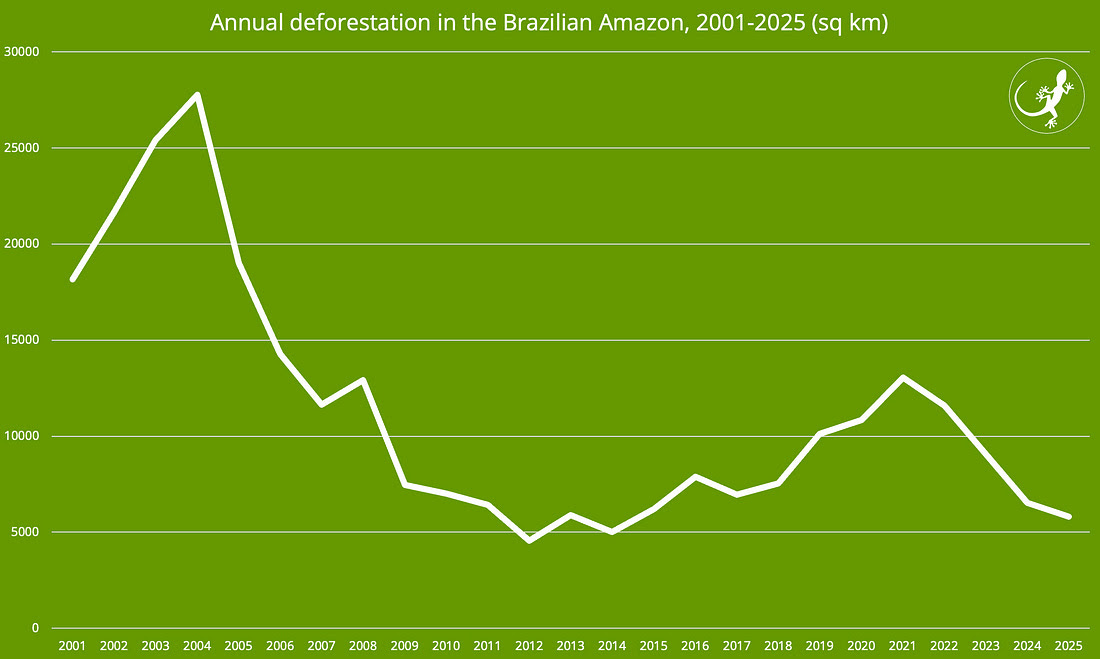

Amazon deforestation has dropped to its lowest point in almost a decade, and fire damage is down 45% after last year’s brutal drought. Brazil’s Cerrado, the sprawling savanna next door, has also seen deforestation fall to a six-year low. Put together, those sharp declines slashed Brazil’s greenhouse gas emissions by 16.7% in 2024, the biggest annual drop the country has logged in more than ten years.

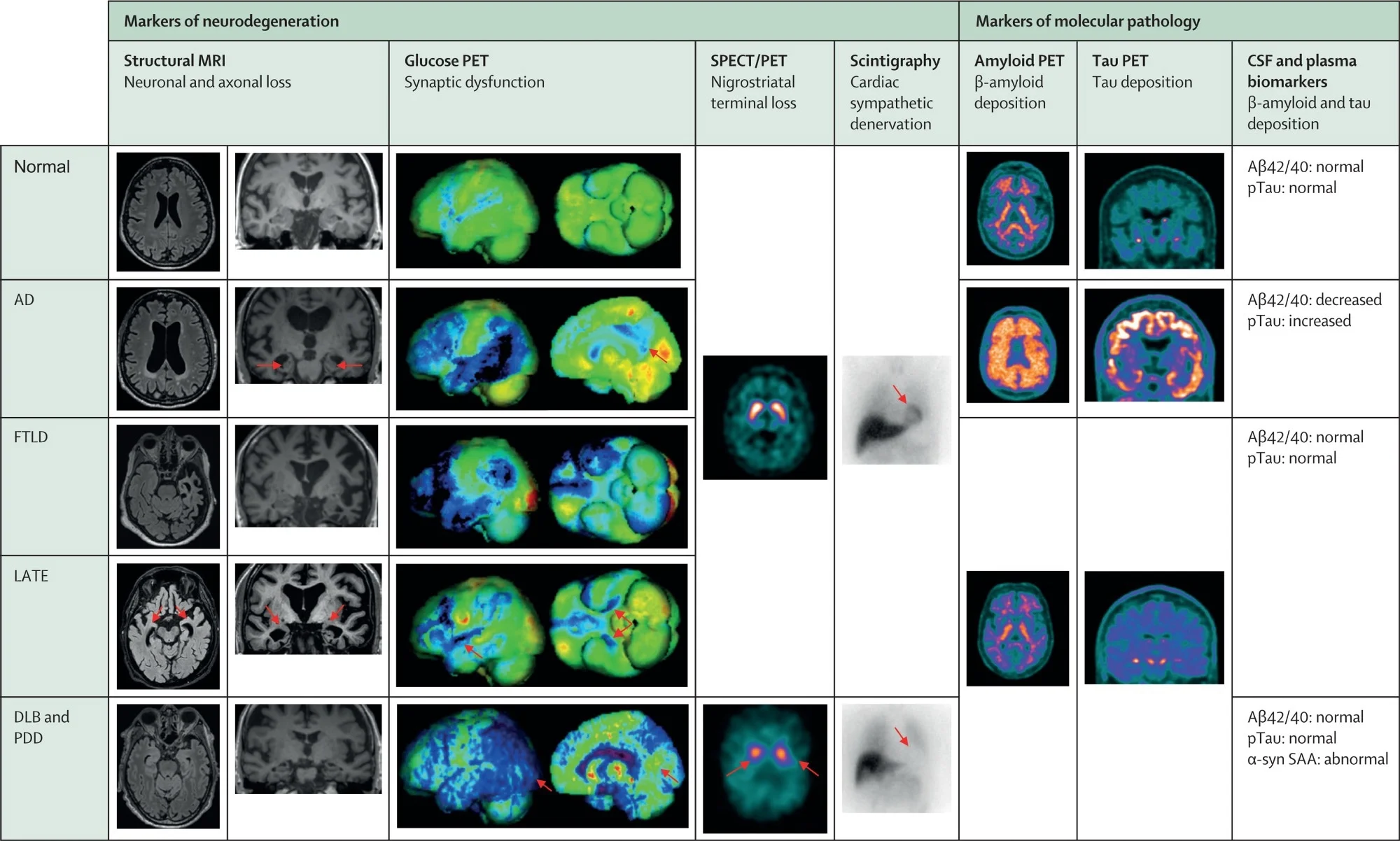

New biomarker-based therapeutics are reshaping dementia care, giving doctors the ability to detect Alzheimer’s and related diseases years before symptoms surface and opening the door to targeted treatments. With blood and spinal biomarkers powering earlier diagnoses and new drug pathways that may slow or even stop progression, researchers are calling this the most hopeful decade yet for dementia treatment.

Doctors in China have treated a woman with type 1 diabetes using lab-grown insulin-producing cells made entirely from her own tissue. After reprogramming her cells into stem cells and growing them into tiny insulin-releasing clusters, they transplanted them back into her body. One year later, her blood sugar remains normal without any medication. It’s the first time someone with type 1 diabetes has been freed from insulin injections using cells derived from themselves rather than a donor or embryo, hinting at a future of personalized therapy for millions.

Enjoying The Hillsberg Report? Share it with friends who might find it valuable!

Haven't signed up for the weekly notification?

Subscribe Now