Quote of the week

“Do not wait to strike till the iron is hot, but make it hot by striking.”

- William Butler Yeats

Edition 40 - October 5, 2025

“Do not wait to strike till the iron is hot, but make it hot by striking.”

- William Butler Yeats

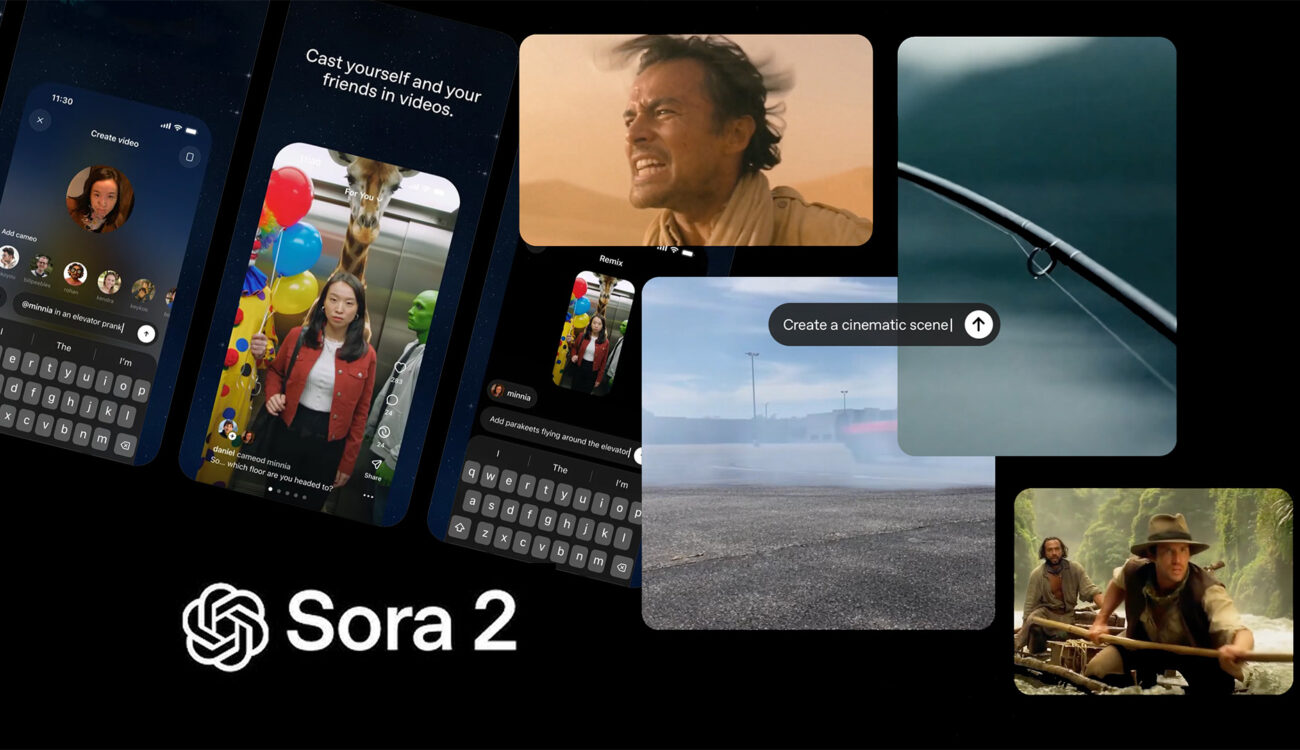

OpenAI’s new video model, Sora 2, is a strange mix of Hollywood magic and Silicon Valley math. It can generate photorealistic video, complete with audio, and even let you appear in the scene. The company’s companion app turns this tech into a social feed, part TikTok and part simulation lab. It is currently invite-only, which makes it feel more like a closed beta than a public launch.

The big new feature is “cameos,” where you can record a short clip of yourself once and then drop your likeness into AI-generated worlds. Here's a solid YouTube video walking through the app. I was lucky enough to get access from my good friend Ethan Steinberg. Take a look at the video I created below.

The Sora app feels familiar (think TikTok, YouTube Shorts, Meta Reels), with its endless scroll of remixable clips and algorithmic recommendations, but it is also unsettling. Every video carries a watermark and metadata tag to signal its AI origin, yet it is easy to imagine how this could blur the line between creator and creation. OpenAI says it has layered in moderation, parental controls, and consent rules for cameos, but safety systems are only as good as their enforcement.

What impressed me most was not the realism, but the accessibility — it took minutes to generate something that would have once required a studio and a team. That is the real story here: video creation, now as easy as typing a sentence. Whether that is exciting or terrifying may depend on how comfortable you are seeing yourself in worlds that never existed. The final key idea here - right now is the worst the app will ever be.

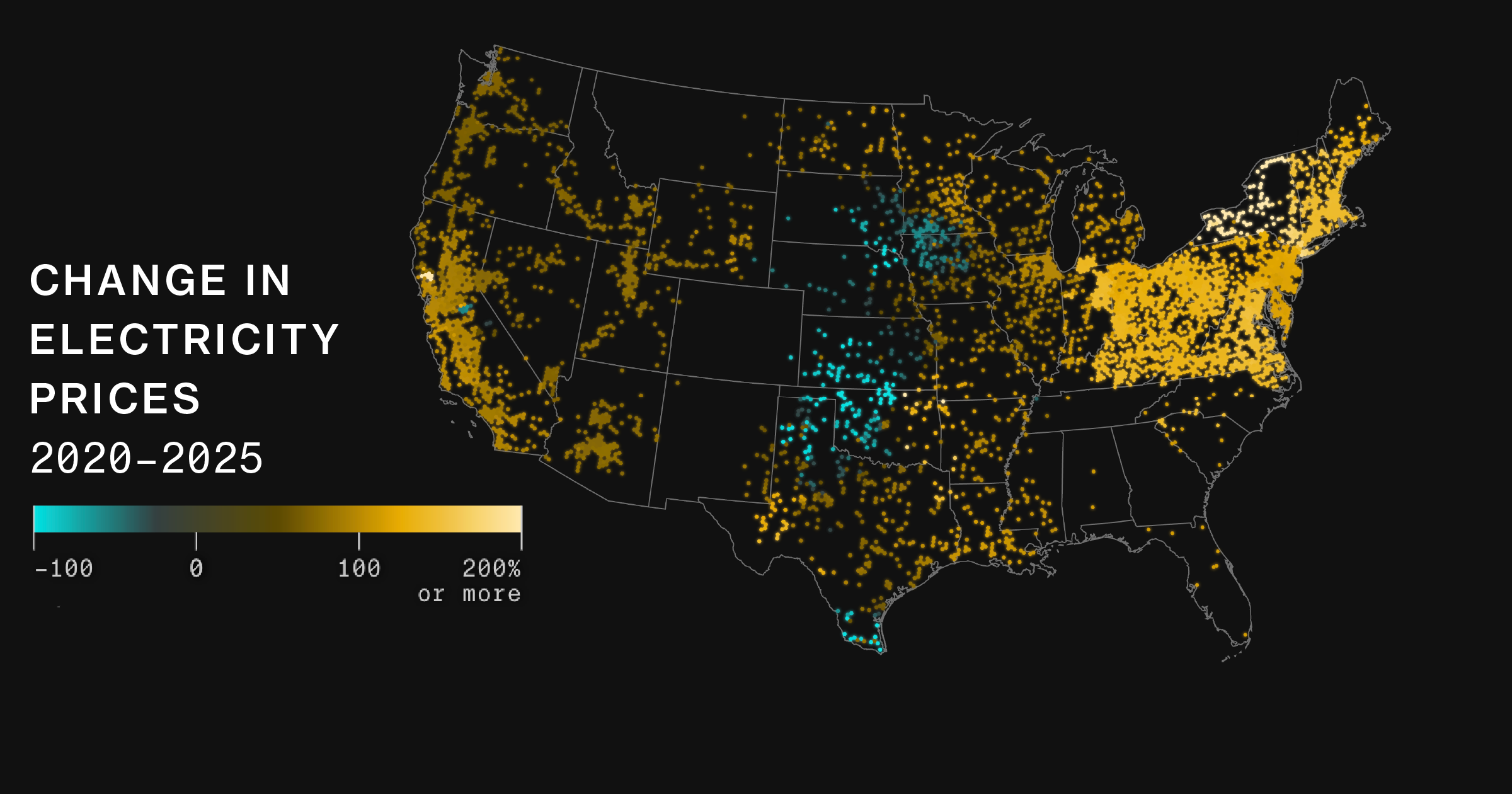

Consumer electricity prices could double within the next five years. This is simple economics, demand is rising much faster than supply. The surge in demand is not coming from households or EVs, it is coming from AI. Data centers that power large language models, image generation, and cloud inference are pulling round the clock megawatts that many utilities never planned for. Yet supply remains constrained, limited by slow regulatory approvals, grid congestion, and uneven investment in new generation capacity. Hydrocarbon plants that could stabilize the grid face permitting delays and political hesitation, which leaves fewer short term options to meet the spike.

The mechanics are straightforward. Interconnection queues are crowded, transmission lines are oversubscribed, and financing costs are higher than they were just a few years ago. Wind, solar, and storage are scaling, but their buildout and tie in timelines trail the pace of AI demand. New nuclear moves slowly, hydrocarbon capacity additions are contentious, and grid hardening competes for the same labor and materials. The result is a widening gap between how much power the system can deliver at any hour and how much data center load arrives with little flexibility.

Consumers feel the gap through rate cases, fuel adjustments, and new peak pricing structures. Utilities recover rising generation and grid costs through regulated frameworks, and that pass through lands on households and small businesses first. Time of use rates, demand charges, and reliability surcharges add up, especially in regions where AI buildouts cluster. In the aggressive scenarios, those layers compound into a simple outcome that matters at the kitchen table, power prices that double within five years even before any new carbon costs show up.

The fix is not mysterious, it is execution and pointed de-regulation. Accelerate permitting for generation and transmission in the short term, align incentives for storage and fast ramping resources, and reward flexible loads that can shift compute or cooling out of peak hours. Require siting AI campuses near firm or stranded generation, expand demand response for commercial buildings, and push efficiency upgrades that cut baseline consumption. Longer term (5+ years), we need to be ready with nuclear. Without those moves, the short term era of stable electricity prices ends quickly. With them, the grid can absorb AI’s appetite without turning every monthly bill into a shock. This was a tough realization for me, as I've written in the past that I see the price of compute and electricity going to near zero over time. I still believe that will happen, I've just reset my expectation of how long.

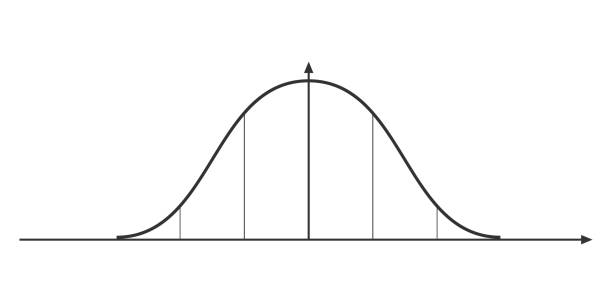

The secret sauce behind ChatGPT’s early virality was not mystical insight. It was randomization. Think about the first time you ever used ChatGPT. It was incredible not only because it could spit out semi-intelligent text, but that it felt like a spark of consciousness. When you asked it the same thing twice, it would spit out two completely different responses, much like a human might. I wonder if including temperature and other randomization were a deliberate choice from the beginning to optimize for virality.

Here's how it actually works: Instead of always choosing the single most likely next word, the model samples among several strong candidates using settings like temperature and nucleus sampling. That small dose of chance adds variety, makes replies feel conversational, and turns statistics into something that looks a lot like creativity.

This randomness also hides limits. When outputs change a little each time, it is easy to mistake fluency for understanding. You are seeing plausible continuations, not a model that truly knows. The charm comes from controlled unpredictability, not from reasoning about the world.

The path forward is balance. Keep randomness where it helps with tone and brainstorming, but rein it in when accuracy matters. That means better rewards for saying I do not know, tighter controls on sampling, and stronger guardrails like retrieval and tools. Preserve the spark, curb the fiction. Parameters such as Temperature allow you to control this randomness, to an extent. There are also mathematical limitations that result in some true randomness, which I won't get into further as I can't properly explain them (float limits).

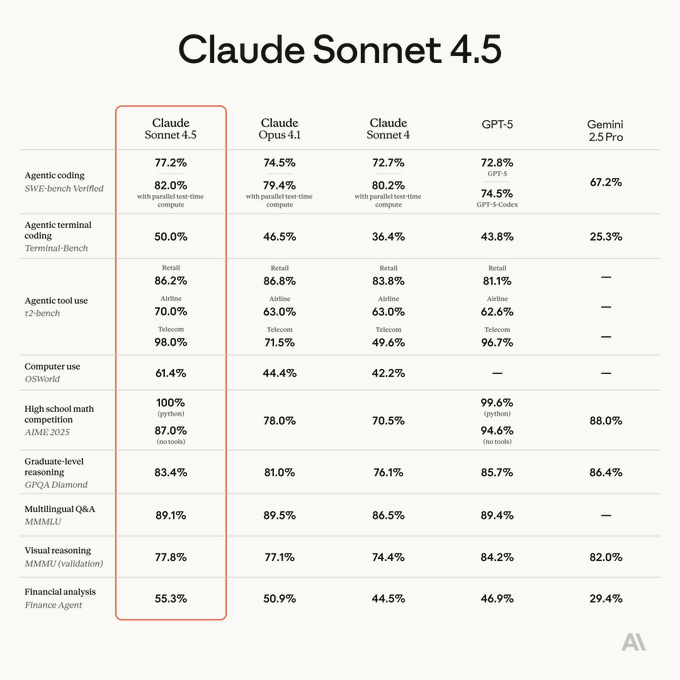

Anthropic has released Claude Sonnet 4.5, positioning it as its strongest coding model yet and the new backbone for complex AI agents. In public demos and reports, the model ran for roughly 30 hours on its own to build a Slack-style chat app, generating around 11,000 lines of code — a huge leap from the seven-hour runs seen earlier this year. Alongside the model came new tools: an Agent SDK for building multi-step workflows, long-context memory editing, code execution and file creation directly inside the Claude app, checkpoints in Claude Code, and a new VS Code extension. Pricing lands at $3 per million input tokens and $15 per million output tokens, and the model is already available by API and through Anthropic’s partners.

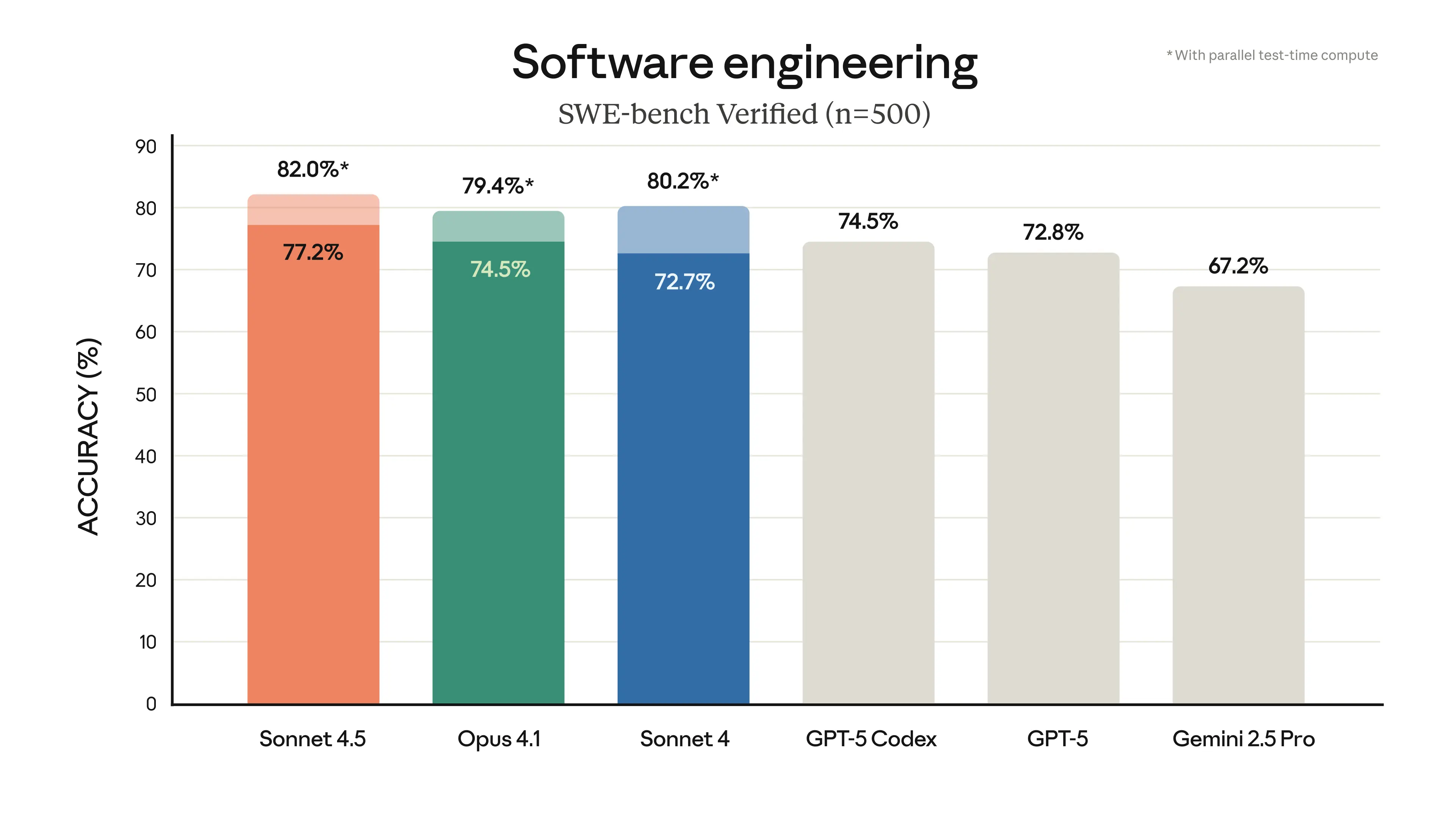

On benchmarks, Claude Sonnet 4.5 sets new highs. It scored 77.2 percent on SWE Bench Verified using a simple bash plus file edit scaffold, and up to 82 percent with higher compute. It also leads the OSWorld computer use test at 61.4 percent, up from 42.2 percent for Sonnet 4 earlier this year. Anthropic claims the model’s computer interaction abilities have roughly tripled over the past twelve months, which shows up in practical tasks like spreadsheet automation, browsing, and app management [read Anthropic's official post].

Early feedback from developers has been unusually positive. Teams testing the model through the new Claude Code environment say it now plans its work in phases, writes progress files, and resumes coding sessions without losing context. Cline’s engineers noted Sonnet 4.5 writes tests first, ships cleaner code, and avoids repetitive commentary — behavior that makes it feel more like a focused collaborator than a chatty assistant. Partners at GitHub and Canva reported stronger multi-step reasoning and better comprehension across large repositories.

Compared to OpenAI’s Codex and GPT-5, the differences are narrowing. Builder.io’s testing found Codex slightly faster in token generation and offering finer control over reasoning effort, while Claude’s new environment brings a more feature-rich setup and stricter permissions. Codex still leads in GitHub integration and open tooling [Builder.io], but several reviewers, including Zvi Mowshowitz, now consider Sonnet 4.5 the top coding model overall — though GPT-5 still wins for deep reasoning and tough debugging.

The real story is the infrastructure Anthropic built around the model. Between the Agent SDK, memory and context tools, and integration into Amazon Bedrock for enterprise routing and monitoring [AWS], Sonnet 4.5 is designed less as a chatbot and more as a coding cofounder. If Codex and GPT-5 are refining what great assistants can do, Claude seems to be quietly defining what autonomous software development might look like.

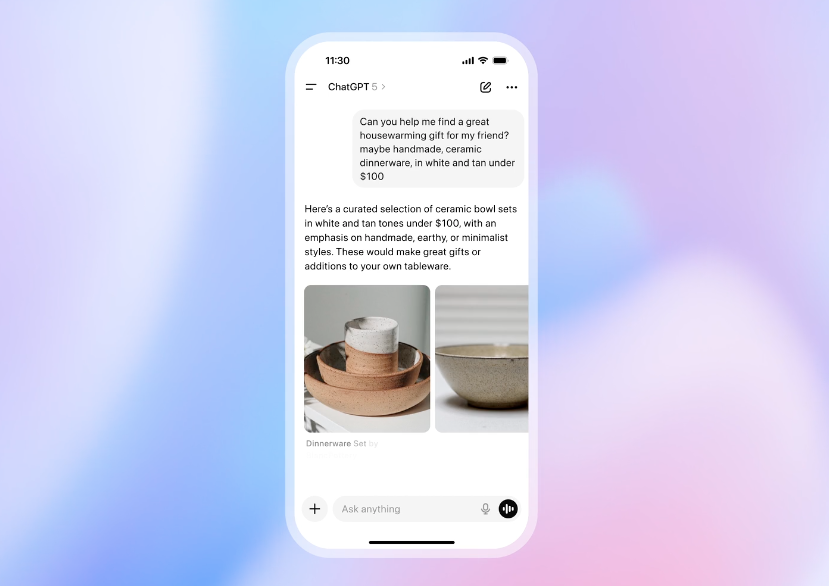

OpenAI turned ChatGPT into a point of sale with Instant Checkout, powered by the Agentic Commerce Protocol. As of September 29, 2025, logged in users in the United States can buy from U.S. Etsy sellers directly in chat, with more than one million Shopify merchants coming soon. Results remain organic and unsponsored, merchants pay a small fee per completed purchase, and ranking considers factors like availability, price, quality, primary seller status, and whether Instant Checkout is enabled. Multi item carts and additional regions are on the roadmap. Official OpenAI post.

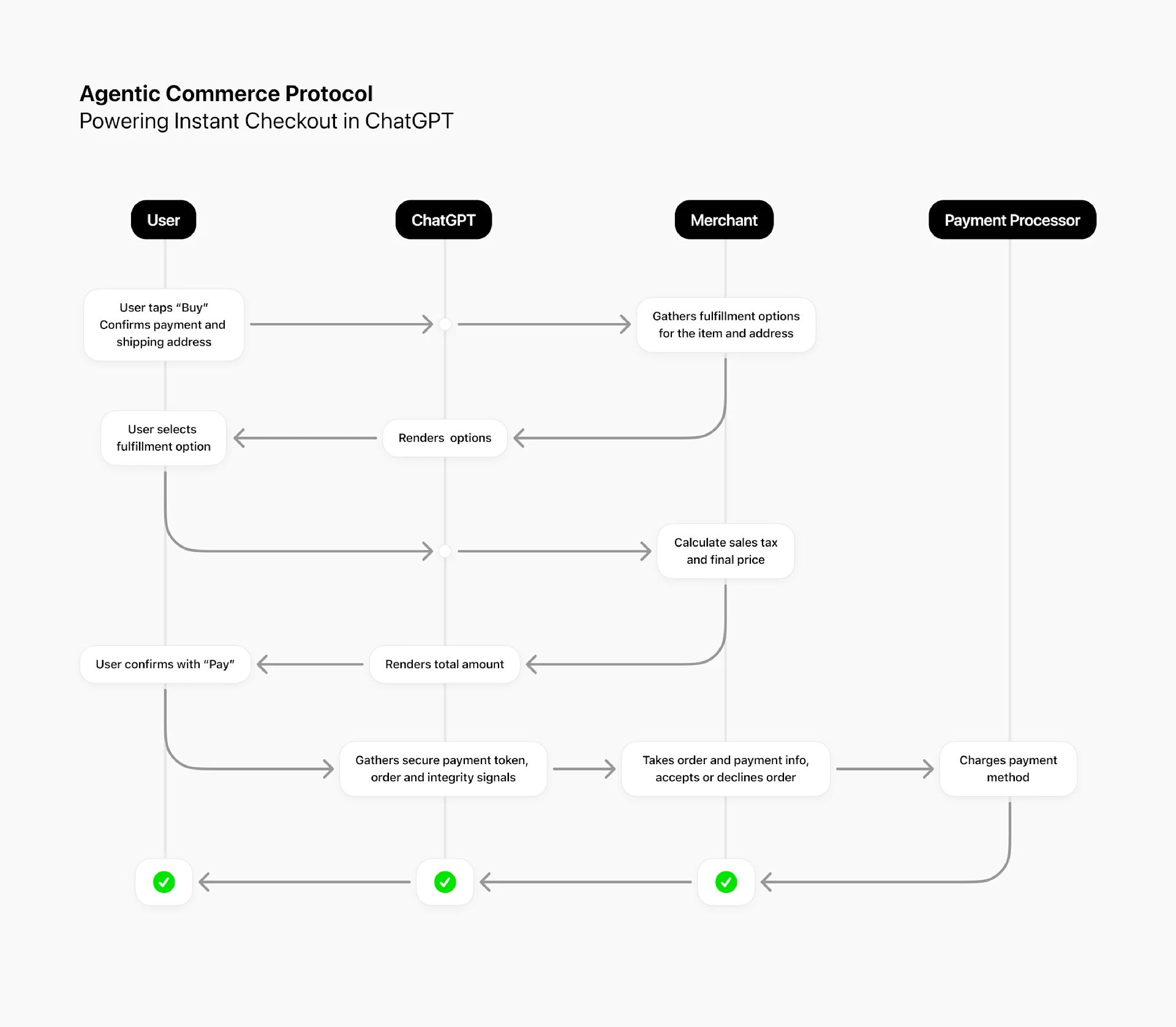

Under the hood, ACP is an open standard co-developed with Stripe that lets ChatGPT act as a user’s agent while you remain merchant of record and keep your existing stack. To participate, you share a structured Product Feed so ChatGPT can surface accurate price and availability, then expose a small REST surface for checkout. Payments run through your PSP. With Delegated Payments, ChatGPT passes a single use, amount capped token from a compatible PSP such as Stripe’s Shared Payment Token so you can authorize and settle as usual. Overview, Checkout spec, Delegated Payment, Product Feed.

The build path is straightforward. Apply for Instant Checkout access, ship your Product Feed, then stand up the endpoints using HTTPS and JSON with required headers for Authorization, API Version, Idempotency Key, Signature, Timestamp, and Request Id. Emit order lifecycle webhooks, handle the delegated payment payload from your PSP, and keep payments on your rails. Before launch, complete certification in a sandbox that covers session creation with and without addresses, shipping option changes, delegated tokenization, order completion, recoverable errors like out of stock or payment declined, and idempotency behavior. Enforce TLS 1.2 or newer, allowlist OpenAI egress, and verify webhook signatures. Get started, Production readiness, Endpoint details.

When should you implement? If your catalog is well structured, your fulfillment is predictable, and you want a new acquisition channel inside ChatGPT, ACP is a strong bet. Start with top sellers, measure conversion uplift, return rates, and fraud. If you rely on configurators, quotes, complex bundles, or multi ship workflows, consider piloting a limited subset while carts and richer flows mature. Etsy and Shopify merchants may qualify with minimal lift, everyone else can still prototype since ACP is open and designed to work across processors. The payoff is discovery that converts inside the conversation without giving up your payments, data, or customer relationship. Launch details, Key concepts.

Bone-02 is a breakthrough medical adhesive developed by Chinese scientists to repair broken bones without metal plates, screws, or major surgery. The glue is injected directly into the fracture, where it bonds bone fragments together within two to three minutes—even in areas with heavy bleeding—accelerating the healing process. Its design was inspired by oysters, which use a protein-rich natural glue to cling to hard surfaces in wet, salty, and constantly moving conditions. This biological adhesive forms an exceptionally strong, erosion-resistant bond, and Bone-02 replicates that mechanism for use inside the human body.

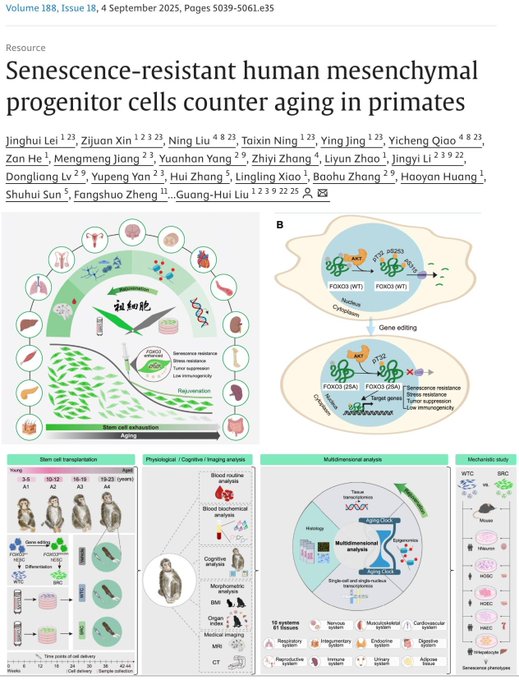

Senescence-resistant stem cells are a promising frontier in the fight against degenerative aging. Unlike regular stem cells, these specialized cells are more resilient to the cellular stress and damage that build up over time, which normally lead to senescence—a state where cells stop dividing and contribute to tissue decline. By maintaining their regenerative capacity for longer, senescence-resistant stem cells could help repair tissues more effectively, slow the progression of age-related diseases, and potentially extend healthy lifespan. Researchers are exploring ways to engineer or select for these cells to enhance therapies for conditions such as neurodegeneration, muscle wasting, and organ failure.

A major study by Imperial College London found that deaths from chronic diseases fell in four out of five countries between 2010 and 2019, driven by progress against heart disease, strokes, and several major cancers. Researchers called it a global “success story” but warned that progress has slowed due to rising deaths from dementia and harder-to-treat cancers, and that many people still lack access to effective treatments and screenings.

California has become the first U.S. state to pass a bill defining and banning ultra-processed foods from school dinners. These industrially manufactured products, often high in saturated fat, sugar, and salt, are linked to negative health effects. Assembly Bill 1264, which phases them out, passed with bipartisan support, with Democrat Jess Gabriel saying both parties are uniting to protect children’s health through a “bipartisan, science-based approach.”

Enjoying The Hillsberg Report? Share it with friends who might find it valuable!

Haven't signed up for the weekly notification?

Subscribe Now