Quote of the week

“Ships are safe in harbor, but that’s not what ships are for.”

- John A. Shedd

Edition 31 - August 3, 2025

“Ships are safe in harbor, but that’s not what ships are for.”

- John A. Shedd

Coinbase just announced a major expansion of its trading platform — one that could reshape how Americans interact with financial markets. In the coming months, US users will gain access to tokenized versions of real-world assets, including stocks, derivatives, early-stage token sales, and even prediction markets. The offering will roll out internationally after jurisdictional approvals. This brings Coinbase into more direct competition with Robinhood, Kraken, and Gemini. Meanwhile, the SEC is beginning to adjust its stance, launching an initiative to modernize securities regulations to account for crypto-based trading platforms.

One of my first ever LinkedIn posts was about fractional shares. I’ve always believed in lowering the barrier to entry for investing. I watched it unfold during the Robinhood wave in college, then again during the crypto boom (for better and worse). But now we’re entering a third chapter — one that’s potentially more profound. Tokenized assets can be traded around the clock. You don’t need thousands of dollars. You just need internet access and a few spare cents. It’s a version of financial access that barely seemed possible just a decade ago.

What hasn’t been solved yet is the world of private investing. Access to early-stage companies and private equity is still locked behind the “accredited investor” classification — a standard built for a different era. Yes, protections matter. But so does freedom. People should be able to use their own money how they see fit, especially in America. I hope players like Coinbase and Robinhood keep pushing the envelope here. They’re not just building apps — they’re laying the groundwork for a generation that demands more from its financial system.

A startup named Taara is offering a clever alternative to fiber optic cables: free-space optical lasers. Instead of burying cables through cities, mountains, or jungles, Taara’s system beams internet through the air using lasers that can send data at 20 gigabits per second over distances up to 20 kilometers. A smaller version is in the works — one that could operate indoors at 60 meters and outdoors at 1 kilometer with similar speeds. The only major limitation? Weather. But as long as both ends can “see” each other, the system typically performs well.

The story of the internet is a story of infrastructure. It started in universities with early mainframes and copper wiring, moved into public use with dial-up modems and telephone lines, and then exploded with the arrival of broadband. Wireless changed everything again — first with 3G, then 4G, and now 5G. Fiber optic networks brought faster and more stable connections, but they’re expensive to install and maintain. Meanwhile, satellite internet like Starlink opened new possibilities for remote areas. And now, we may be entering a new chapter, where internet rides on beams of light instead of wires or signals.

This kind of tech could change lives. Imagine beaming high-speed internet across a canyon in Nepal, between ships in the middle of the ocean, or from one side of a desert outpost to another. Even temporary setups like disaster relief zones or mobile events could benefit from instant internet, no cables required. As with all new infrastructure, we need to proceed carefully. Developers should rigorously test both short and long-term effects on human health, especially as these beams become more common indoors and in dense environments. I have conspiratorial concerns about the safety testing of all technology, even things we've grown accustomed to. It's expensive to test. More expensive to develop a new and useful technology, only for it to be dangerous to human health. We live in a world of misaligned incentives...one day I may even write out a theory for it.

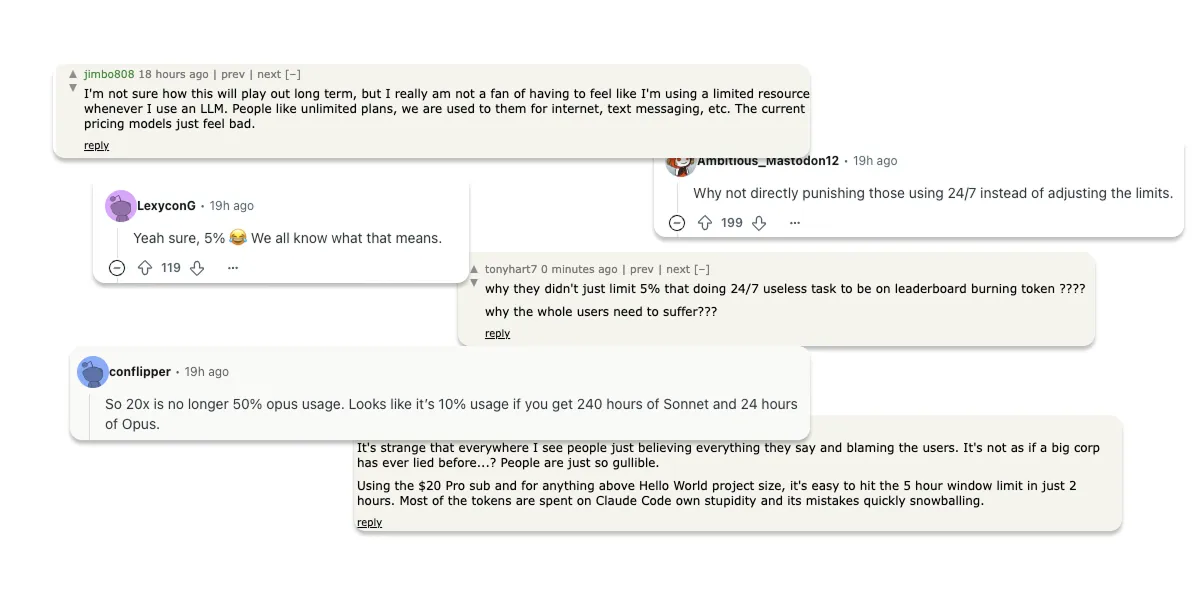

Claude Max, Anthropic’s flagship AI assistant, recently hit a wall. Users pushed back when new usage limits were introduced, just like they did with coding tools Cursor and Windsurf. The backlash wasn’t about deception; it was about disruption. Developers need flow state, not capped tokens that shut off mid-debug. While SEO or copywriting tools can handle credit systems (since their work is broken into short tasks), building out a 5-hour feature or solving an elusive bug is a different beast. Hitting a usage wall inside your IDE is infuriating. These companies aren’t trying to nickel-and-dime people — they’re stuck. AI is simply too expensive to offer in the “unlimited” form developers want. Just like mobile data in the early 2000s, we’ll look back on this phase and laugh once the infrastructure catches up. This article covers it well.

Everyone assumes we’re heading toward unlimited AI usage — and it’s easy to see why. The narrative from government and Big Tech is about “winning the AI race.” But how do we actually get there? What breakthroughs will make AI cheap enough to remove usage caps altogether? What infrastructure has to change to support a world where intelligence is as available as electricity? We’re not just talking about cheaper models — we’re talking about a re-engineering of how AI is accessed and delivered.

To get there, we’ll need better chips, more localized inference, and a lot more electricity. We’ll need to build hardware that runs models more efficiently — likely with a new generation of accelerators that outperform current GPUs in power-per-token and latency. Data centers must scale sustainably, especially as demand grows. That means innovations in cooling, power usage, and edge deployment. We’ll also need breakthroughs in model compression and quantization — squeezing performance from smaller architectures without sacrificing quality. If we stopped developing smarter models entirely and just focused on making inference cheaper, we could still unlock tremendous gains by refining the supply chain from silicon to socket. I wonder how many individuals truly know where to allocate the capital most effectively.

If you're relying on Google, Amazon, or Microsoft to store your files, run your apps, or host your services, you don't own anything. You're renting access. That’s the hard truth Drew Lytton realized when he tried to escape the corporate cloud by building his own home server. While empowering, self-hosting was isolating, brittle, and impractical for most people. So he proposed a different idea: a public internet infrastructure run by small communities — like a co-op cloud. Instead of everyone going it alone or handing everything to a trillion-dollar company, local groups could pool resources and fund reliable, community-hosted alternatives. Read the full article here.

It sounds idealistic, but is it even possible? How long would it take to build? Would it require thousands or millions of people? And if something like this gained momentum, would the big cloud providers squash it or try to co-opt it? The path isn’t clear yet, but it’s not impossible. History shows that small, motivated groups can spark real change if they pick the right moment. There may be a tipping point — like a major cloud outage, price spike, or privacy scandal — that drives enough people to try something new. Once adoption reaches a critical mass, the economics and network effects could shift in favor of community-hosted clouds.

The article outlines a few actionable first steps. Start with lightweight hosting cooperatives — small groups of 5 to 50 people who pool money to run a shared server with encrypted backups and fault-tolerant uptime guarantees. Use open-source tools like Nix, Fly.io, and Tailscale to make deployment, upgrades, and networking seamless. Build culture around contribution, not consumption. As more communities join, they can connect through federation — sharing services while retaining local autonomy. The real battle will be fighting the inertia of convenience. Cloud companies will offer “community-like” plans, discount pricing, or new privacy features to prevent people from leaving. But if a real alternative ecosystem forms, the next decade could mirror what happened with Linux. It started in basements — and now it runs the world.

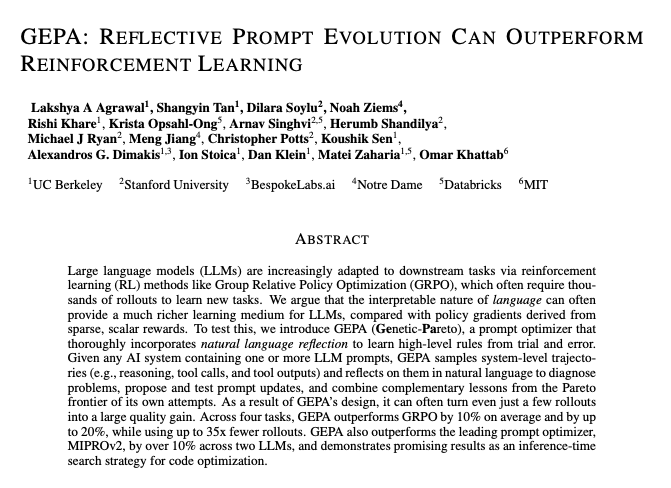

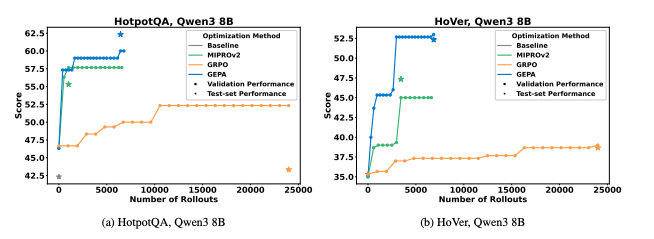

A new research paper proposes a powerful new approach for training language models to complete tasks using far fewer attempts. Traditionally, reinforcement learning systems require thousands of expensive trial-and-error runs, relying only on a basic success or failure signal. The new method, called GEPA (Genetic-Pareto), changes that by introducing natural language reflection. Instead of blindly retrying, the system asks itself what went wrong and uses those insights to evolve better prompts. It then applies a Pareto-based strategy to select the best ones. The result? GEPA outperforms both top-tier reinforcement learning techniques like GRPO and existing prompt optimization methods like MIPROv2, all while using up to 35 times fewer training runs. Full paper here.

This isn’t just an academic win — it’s a big deal for the future of AI efficiency and capability. If large models can learn new tasks faster, cheaper, and more intelligently by reflecting in language rather than brute-forcing solutions, it could radically change how we build, train, and deploy them. Instead of requiring huge compute budgets, we might start seeing compact, self-improving agents that optimize themselves on the fly with much less trial-and-error. The entire cost curve of training and tuning AI could shift.

Imagine a personal coding assistant that not only writes code but explains its mistakes to itself and then rewrites its approach without needing you to re-prompt it 10 times. Or a tutoring bot that learns to teach better each time a student gets confused — not after 10,000 examples, but after 10. Even hardware control agents in factories or labs could iterate on strategies faster with less wear on equipment. GEPA could help usher in a smarter, more introspective generation of AI — ones that don’t just learn, but learn how to learn better.

A pair of researchers—a data scientist and an immunologist—used AI to design entirely new cancer-fighting proteins. In just a few weeks, they created custom T cell receptor-like molecules that successfully killed cancer cells in lab tests. This is a huge leap forward in speed, considering the same process usually takes over a year.

A new pill could soon offer relief to millions suffering from sleep apnea. In Phase 3 clinical trials, Apnimed’s nightly oral medication helped keep airways open, offering an easier alternative to CPAP machines. These devices are effective but often noisy and uncomfortable—leading many to stop using them. This pill could be a quieter, simpler solution to improve rest and quality of life.

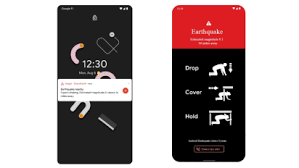

Your smartphone might already be saving lives. Google’s Android Earthquake Alerts System has turned millions of devices into a global early warning network. By detecting vibrations through built-in sensors and triangulating them in real time, the system can issue alerts seconds before a quake hits. It’s now active in 98 countries—bringing life-saving tech to places without sirens or expensive infrastructure.

India has achieved major progress against leprosy, cutting new cases by 41% over the past decade—from 130,000 in 2012 to just 76,000 in 2022. The drop is even sharper in children, with a 59% decrease. This success stems from earlier diagnoses, accessible multi-drug treatments, and public awareness campaigns. The next challenge: improving post-treatment care and fighting stigma.

A drug that could regrow human teeth is now being tested in clinical trials in Japan. The science is based on the discovery that humans still have dormant tooth buds capable of forming a third set. The drug works by blocking a protein that prevents these teeth from developing. If trials go well, this treatment could be available to the public by 2030.

Enjoying The Hillsberg Report? Share it with friends who might find it valuable!

Haven't signed up for the weekly notification?

Subscribe Now