Quote of the week

“We must all either wear out or rust out - every one of us. My choice is to wear out.”

- Theodore Roosevelt

Edition 30 - July 27, 2025

“We must all either wear out or rust out - every one of us. My choice is to wear out.”

- Theodore Roosevelt

A stablecoin is a type of digital money that’s designed to always be worth the same amount. Most of the time, stablecoins are linked (or "pegged") to the value of a real-world currency like the U.S. dollar. That means one stablecoin is supposed to always equal one dollar. You can send, receive, or store them online, just like using PayPal or Venmo, but they live on blockchains like crypto does. The big difference from other cryptocurrencies like Bitcoin? Stablecoins don’t jump wildly in price every day — the price is always set and they stay stable.

People like stablecoins because they’re fast, easy to send across borders, and don’t need a bank to work. Let’s say you want to send money to a friend in another country. With traditional banks, it could take days and cost a chunk in fees. With stablecoins, it’s instant and nearly free. Apps, online shops, and even charities are starting to use them because they combine the speed and flexibility of crypto with the price stability of regular money.

Here’s where it gets interesting for the U.S. dollar. Most stablecoins are pegged to the dollar, which means they help spread the use of the dollar across the world. If people in other countries start using dollar-based stablecoins for everyday payments, savings, and business, it strengthens the dollar's role as the world’s top currency. That’s a huge deal — it gives the U.S. more influence in global finance and makes it easier to export American-made financial products, even without banks or borders. That's why recent legislation that's passed has been bipartisan and so important.

But not everyone is cheering. Traditional banks are nervous. Stablecoin companies are becoming competitors, especially for payments and savings. Stablecoin companies are required to keep every dollar of deposits fully backed by safe assets like cash or Treasury bonds. That means if you give them $100, they can’t lend it out like a bank would. In contrast, banks only have to keep a small fraction of deposits on hand (called fractional reserves) and lend out the rest. That model works until there’s a panic. Stablecoins could start to look safer by comparison — and that could be a serious threat to the way traditional banking has always worked. On the other hand, being required to have 1:1 capital makes it very difficult for these companies to scale. Over time, the traditional bank model for currency transaction just doesn't make a ton of sense.

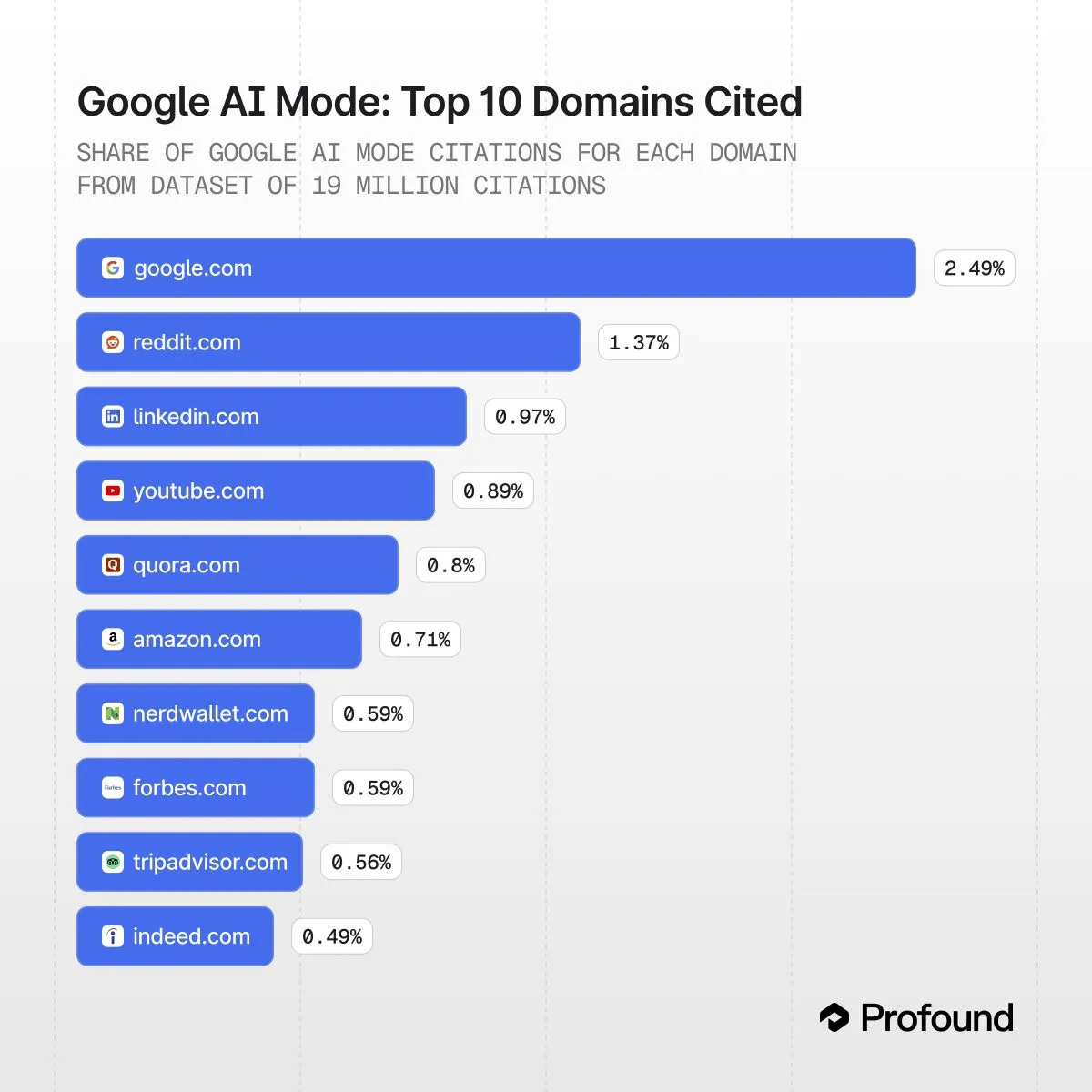

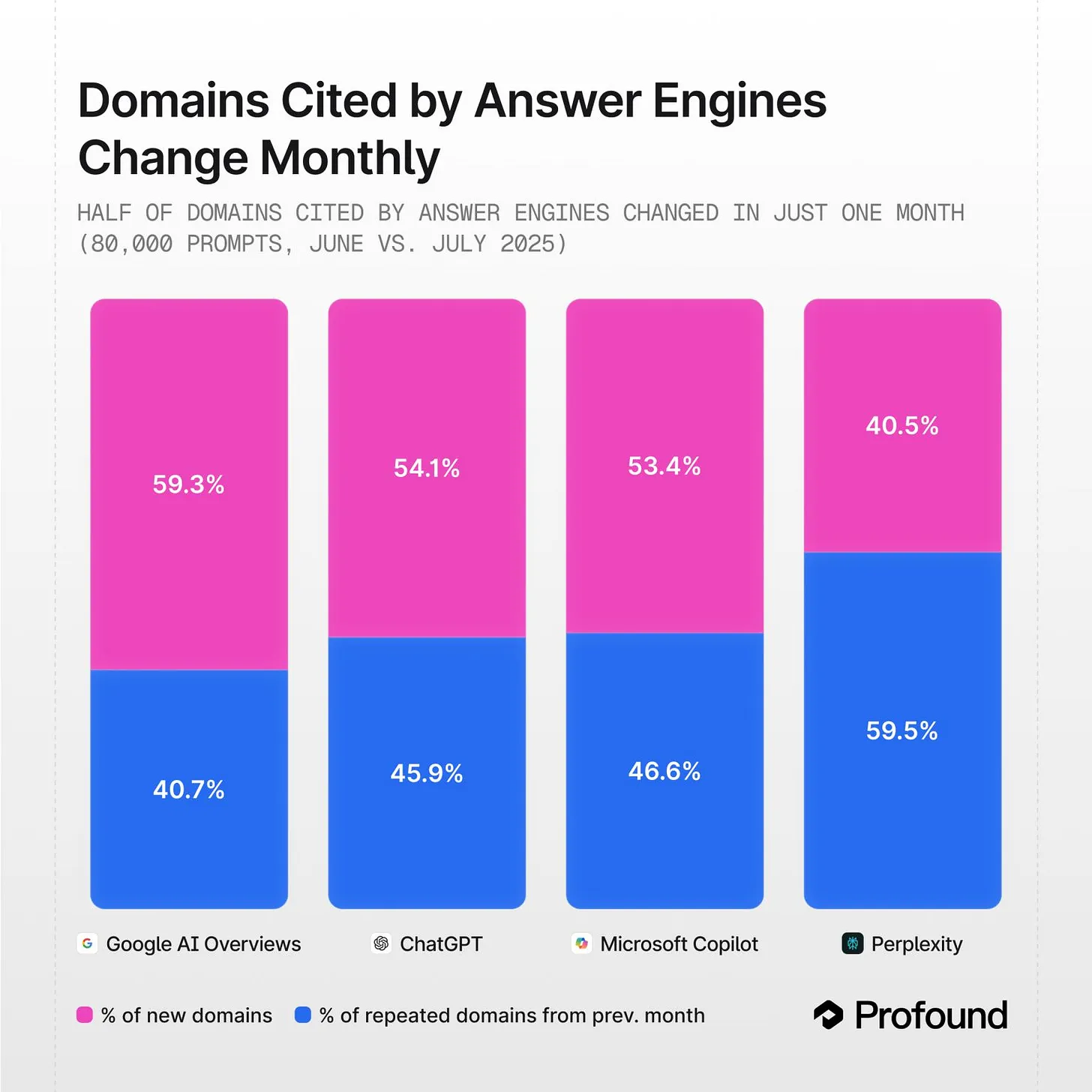

Answer Engine Optimization (AEO) — also known as LLM Engine Optimization or GenAI Engine Optimization or a million other things — is the new frontier of digital visibility. As tools like ChatGPT, Claude, and Perplexity replace Google (and as Google replaces itself) as the first stop for many information seekers, companies need to shift how they structure and distribute content. Rather than optimizing for search engines, you're now optimizing for language models. This tactical guide by Dear Stage 2 and this companion piece by Growth Unhinged explain how marketers are adapting to make their brands show up in AI responses.

Why does it matter? Because LLMs are becoming distribution channels. The Growth Unhinged article highlights that being mentioned by ChatGPT is now a legitimate customer acquisition strategy. Companies like Loom and Notion have already started seeing measurable impact from being frequently recommended by LLMs. Meanwhile, buyers are skipping Google and going straight to AI for recommendations — especially in B2B SaaS. Leads sourced this way convert faster and more reliably than those from traditional SEO!

Jonathan Kvarfordt, Head of GTM at Momentum, starts his AEO process by mining sales call transcripts from closed-won and lost deals. The goal is to uncover authentic buyer pain points, which are then fed into prompts like: “If I had this problem, what would I ask ChatGPT?” The team then uses Claude to analyze competitor websites, forums like Reddit, and third-party research to compile a “deep research artifact.” This single document informs blog posts, landing pages, demo scripts, and more. It’s not just about content — it’s about buyer empathy and AI discoverability.

Content built for LLMs is structured more like a smart conversation than a keyword dump:

For SMBs, the easiest way to get started is by mining your inbox, support tickets, and sales call notes. Use free tools like ChatGPT or Claude to brainstorm the kinds of questions your prospects might be asking an AI. Then structure your homepage, feature pages, and blog posts to clearly answer those questions. Don’t forget About and Pricing pages — ChatGPT often pulls recommendations from these, not just your blog. Make your value prop brutally obvious and avoid jargon. You want clarity, not cleverness.

For enterprises, AEO needs to be operationalized across marketing, sales, and product. Centralize transcripts, interviews, reviews, and market research in a shared location. Build internal “deep research artifacts” that multiple teams can pull from. Assign a team to monitor where your brand is appearing in LLM outputs and how often. You can even experiment with lightly prompting AI models during key decision stages (e.g. “Ask ChatGPT why customers choose us over X”). Use tagging to track what content gets picked up — and iterate fast.

AEO isn’t just a new SEO tactic. It’s a full-stack transformation in how digital presence is earned. The best marketers will meet LLMs halfway: structure content in ways that are helpful, precise, and instantly parsable. As chat interfaces become the dominant way we navigate information, the winners won’t just show up on Google — they’ll be name-dropped by AI.

A few years ago, I heard a story that stuck with me. Tamara Auer gave a talk at a Microsoft education summit during the Steve Ballmer era. Executives spent most of their time fixated on one thing: “how to beat Apple.” A few months later, she spoke at an Apple education summit. The difference? Apple didn’t mention competitors once. Every session focused on helping teachers teach and students learn. After the event, she got in a taxi with an Apple exec and decided to poke fun, telling him Microsoft gave her a Zune and joking it was better than her iPod Touch. The Apple exec shrugged and said, “I have no doubt.” He didn’t care what Microsoft was doing. Apple, back then, didn’t chase competitors — it chased innovation.

Apple has been a generational inspiration. It made design beautiful, computing simple, and technology feel human. But that sense of mission seems gone from releases over the last few years. Today, the conversation is about Apple falling behind. They missed the foundational AI wave, despite having one of the largest cash stockpiles on the planet. They still haven’t figured out how to run a top-tier LLM natively on-device. Siri still sucks. Apple music still doesn't have AI recommendations. Instead of creating new product categories or solving real problems, they’re perfecting margins and tweaking specs.

And now, they’re working on a foldable iPhone. Who the hell wants their iPhone to fold? If I wanted a bigger screen, I’d buy an iPad. It’s not innovation — it’s feature parity. Meanwhile, Apple has ignored obvious opportunities. What about a high-end digital camera? A true gaming Mac? These are real product gaps, but instead we’re getting curved hinges and titanium finishes. It feels like a company with world-class engineering, but no compass.

Apple isn’t dead. But something inside is rotting. And if they don’t cut it out, it will spread. I hope they turn things around — not because I want to criticize them, but because I love the company. I want them to succeed. I want the next generation to feel about Apple the way I did. But right now, it’s hard to shake the feeling that they’ve bitten the poison apple — and they don’t even realize it.

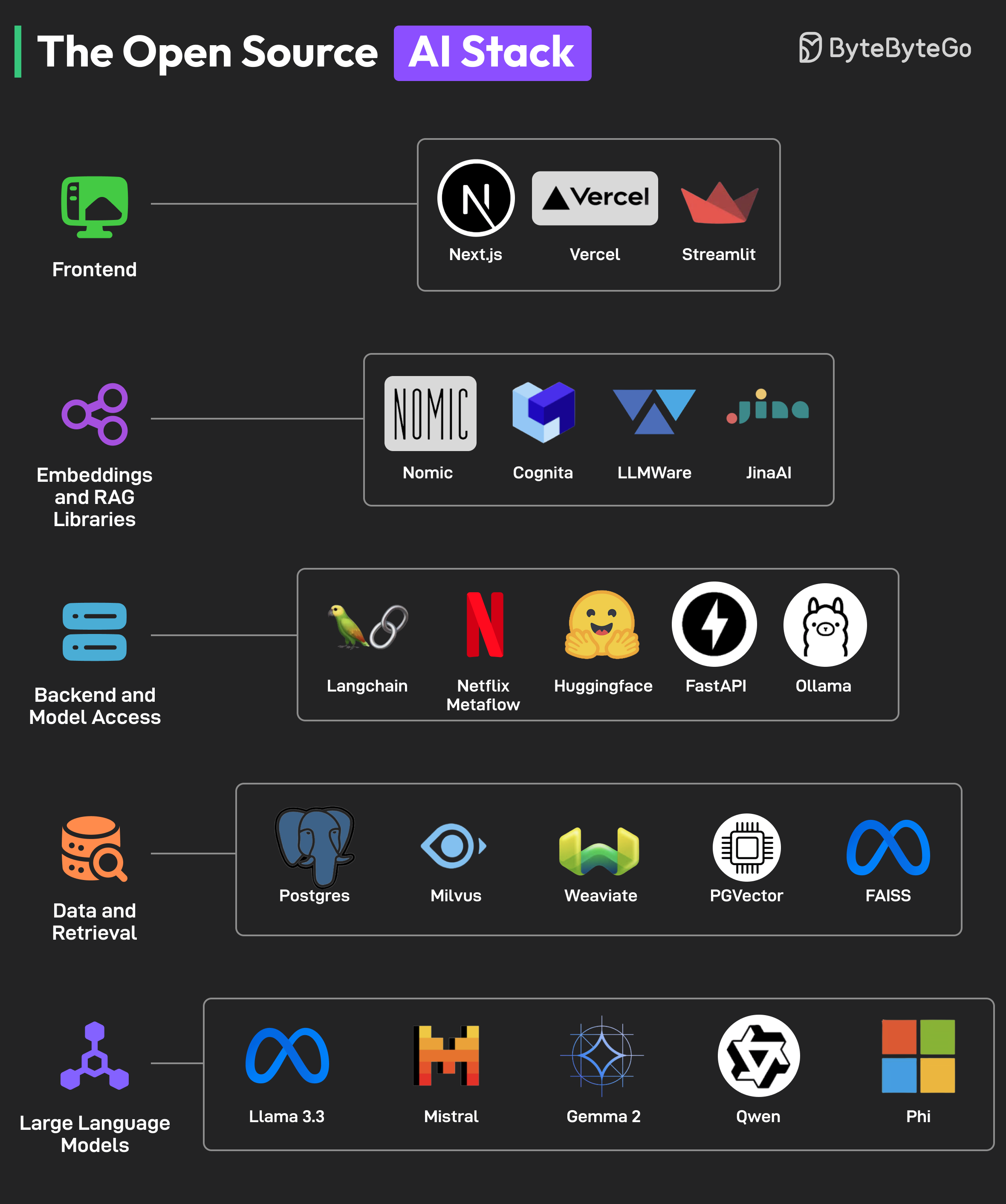

A large language model (LLM) is a type of AI that can understand and generate human language. When it’s open source, that means anyone can see how it works, download it, and use it for free. Think of it like the difference between a secret recipe (closed) and one that’s shared with everyone (open). People might want to use open source LLMs so they can customize them, run them privately, or avoid paying for expensive tools like ChatGPT. It also gives you full control — perfect for developers, hobbyists, or students who want to learn and build.

Open source LLMs come in all shapes and sizes. Some are small enough to run on a laptop, while others need a powerful graphics card or cloud server. You can find them on sites like Hugging Face, GitHub, or platforms like Google’s Vertex AI Model Garden. To use them, you usually need some basic knowledge of Python, access to a computer with a decent GPU (or cloud tools like Google Colab or Vertex AI), and a way to load and interact with the model — usually through a simple script or API.

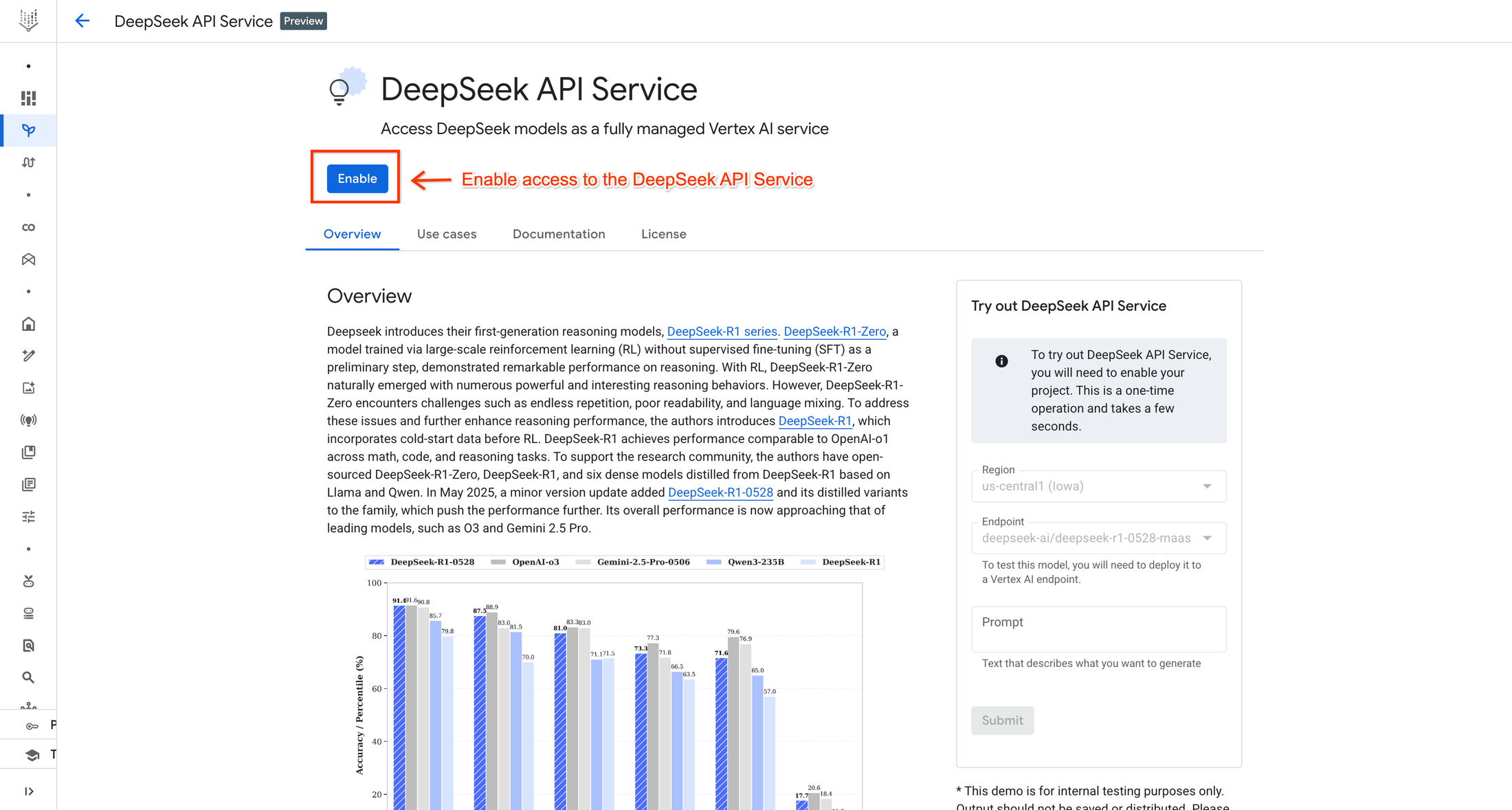

The easiest way to get started with an open source LLM is using one that’s already hosted in the cloud — like Deepseek R1, which is now available in Google’s Vertex AI Model Garden. Here’s how to try it out for free or cheap:

Step 1: Create a free Google Cloud account at cloud.google.com. You may get some free credits when you sign up.

Step 2: Go to the Vertex AI Model Garden and search for “Deepseek R1.” Click on it, then choose the “Try in Notebook” or “Deploy” option. This opens a ready-to-go coding environment where everything is pre-installed.

Step 3: Use the example notebook provided to test the model. You’ll see a few lines of code that let you type in a question and get an answer. You don’t need to write much yourself — the notebook walks you through it. If you don't understand code, ask ChatGPT or Gemini what each line does.

Step 4: If you want to build something with it (like a chatbot or a summarizer), copy the code into your own Colab notebook or Google Cloud project. You can tweak the prompts or plug it into a basic website.

If you prefer to run an open source LLM on your own computer instead of the cloud, you’ll need a machine with at least 16GB of RAM and preferably an NVIDIA GPU. Download a smaller model from Hugging Face (like Mistral 7B or TinyLlama), install Python and PyTorch, and follow the setup instructions. But for most people, using Vertex AI or Google Colab is cheaper, easier, and requires less setup.

Open source LLMs are getting better every month. Tools like Deepseek R1 make it possible for anyone to explore the future of AI without needing to be an expert. If you’re excited about building cool things or just want to learn how this tech works, this is a great place to start.

Right now, artificial intelligence is powerful, but expensive. The cost to train and run large AI models is so high that most companies can’t afford to experiment too far outside of business-critical needs. Investors want ROI, not curiosity. But that won’t be the case forever. As energy costs fall and the price of intelligence approaches zero, we’ll enter a world where AI isn’t just a tool for productivity — it becomes a playground for creativity, exploration, and joy.

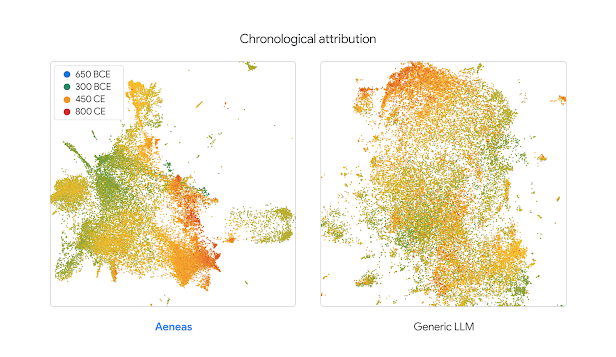

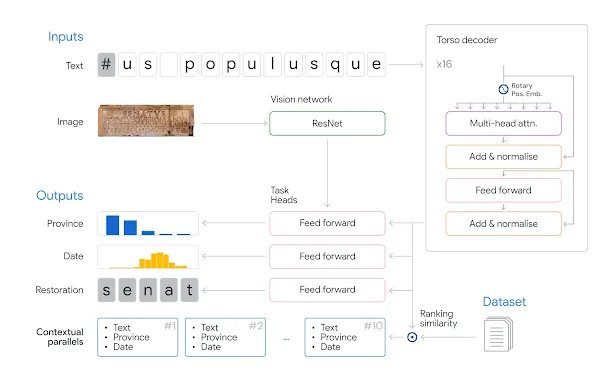

Every once in a while, though, a company finds a little room to explore. Google’s DeepMind recently did just that with the launch of Aeneas, a generative AI model designed to decode ancient Roman inscriptions. Trained on more than 176,000 artifacts and images, Aeneas doesn’t just translate Latin — it uncovers patterns in language, geography, and historical context across the Roman world. It can even reconstruct missing portions of text, pinpoint where an inscription came from, and estimate its age within 13 years.

Aeneas was evaluated by 23 professional historians, and in 90% of cases, it sped up their work dramatically. Tasks that would normally take days were done in minutes. Even better, the tool doesn’t replace historians — it works with them, offering transparent insights like saliency maps and parallel matches across ancient texts. The tool is open and free to use at predictingthepast.com, with code and datasets available for further study and experimentation. It’s a small glimpse into what the future of human-AI collaboration could look like when we’re free to build for wonder instead of just efficiency.

Imagine a world where students build museum-grade tools as school projects. Where artists use ancient language models to create living, breathing histories. Where AI helps us rediscover our past, not just automate our present. We’re not there yet, but models like Aeneas show how beautiful the path can be once we start walking it with a little more freedom.

Scientists have discovered a way to transform Earth’s most abundant mineral into valuable, zero-waste battery materials. This mineral, olivine—known for its beauty as the gemstone peridot but otherwise of little practical use—can now be dissolved by New Zealand engineers to produce silica, magnesium, and nickel-manganese-cobalt hydroxide for lithium-ion cathodes, with the only byproduct being brine.

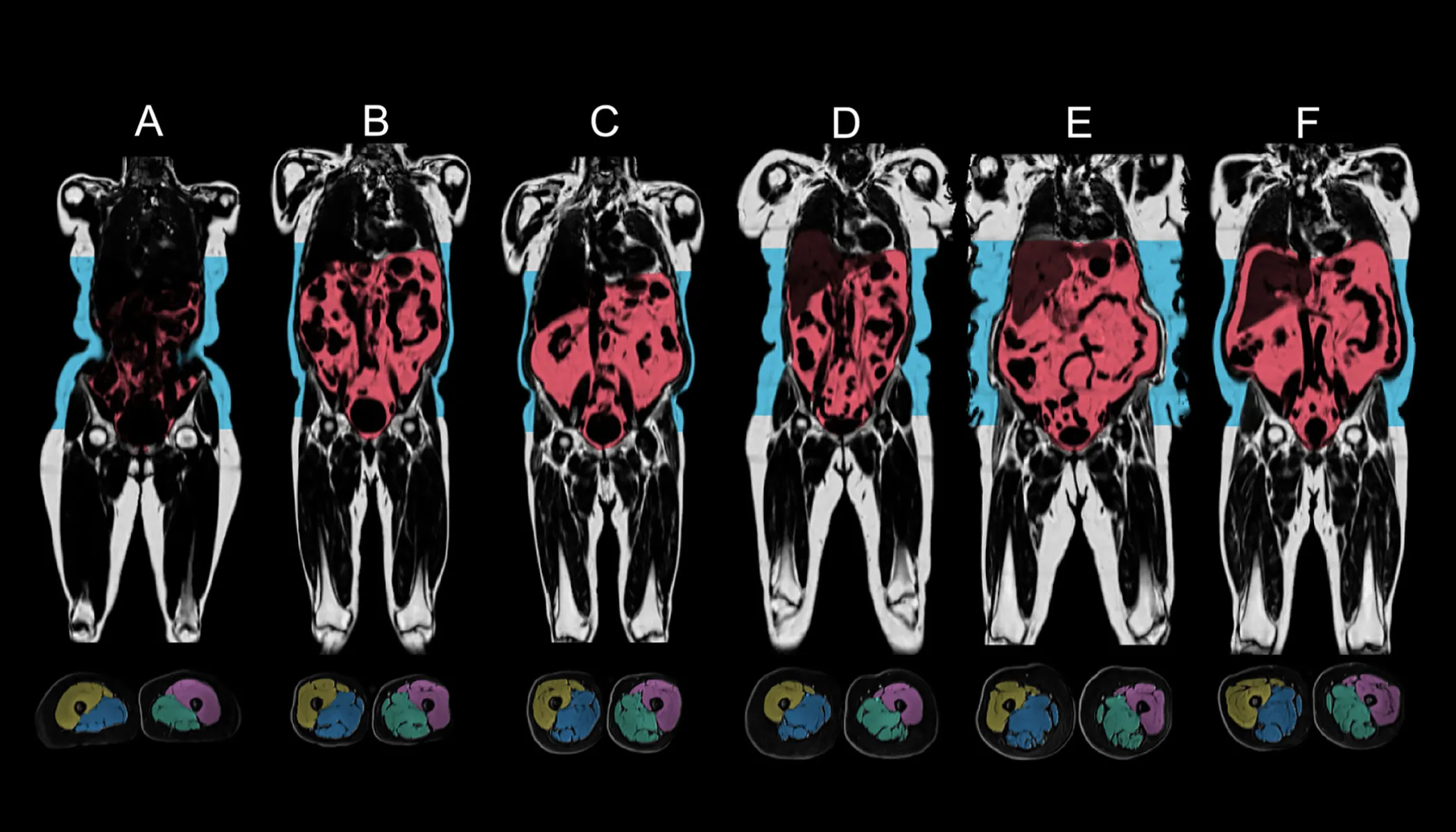

A massive collection of one billion MRI images is now available to researchers around the globe. The UK Biobank has completed a decade-long whole-body imaging initiative, gathering 12,000 scans from 100,000 volunteers. Early findings suggest these scans can detect dozens of diseases years before symptoms appear, and scientists believe this dataset will revolutionize preventive medicine.

A single gene reset has enabled adult mice to regenerate damaged organs. In a breakthrough, Chinese scientists reactivated a long-dormant vitamin A metabolism pathway, allowing rodents to fully repair ear tissue within just 30 days. The study suggests this may revive ancient regenerative programs preserved for over 300 million years.

Ozzy Osbourne has died just weeks after his farewell show shattered records as the highest-grossing charity concert in history. The performance raised over $190 million for Birmingham Children’s Hospital, Acorns Children’s Hospice, and Cure Parkinson’s, streamed by 5.8 million viewers worldwide.

Japanese scientists have used CRISPR-Cas9 to eliminate the extra copy of chromosome 21 from affected cells in the lab—a breakthrough that could redefine future treatments for Down syndrome. This tool targets the additional chromosome while leaving the normal pair untouched, offering promise for regenerative medicine.

Doctors at the Cleveland Clinic have replaced heart valves through a tiny neck incision with robotic technology, eliminating the need for open-chest surgery. Four patients have undergone the new technique and were discharged within days, with one returning to the gym just a week later.

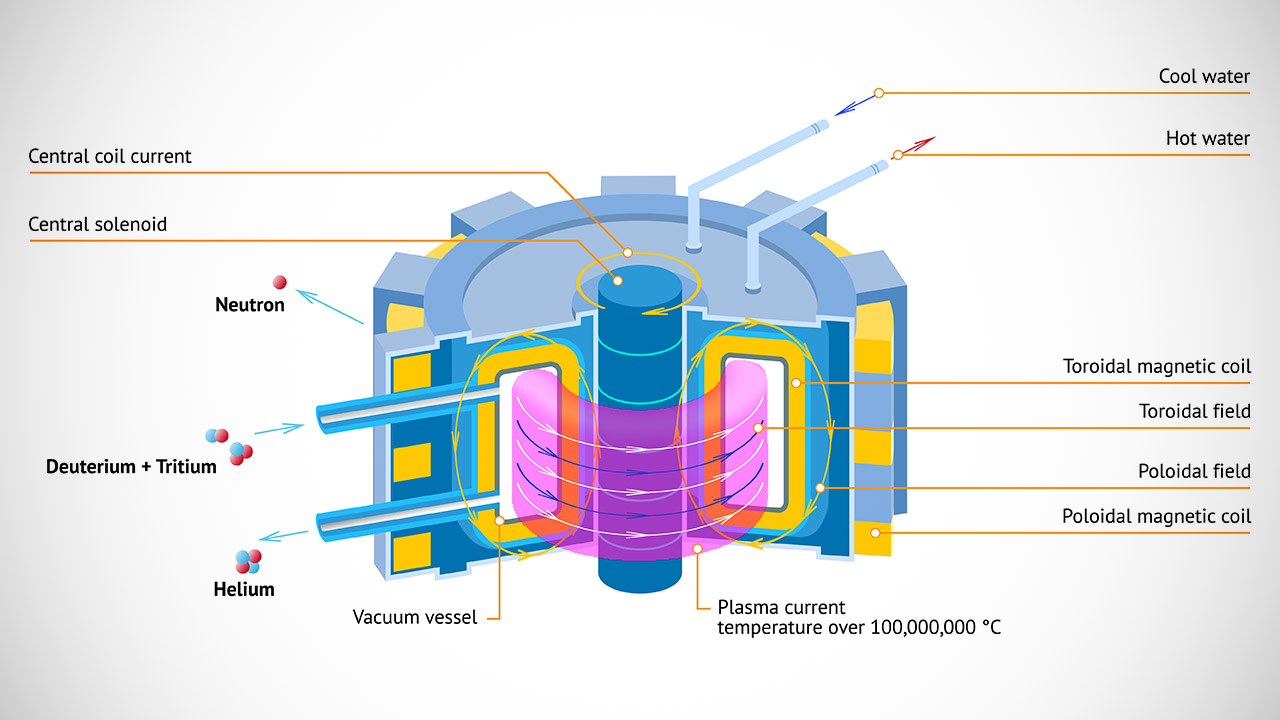

Marathon Fusion has developed a way to use fast neutrons from fusion reactions to turn mercury into stable gold. A single 1 GW fusion plant could yield thousands of kilograms of gold each year, potentially accelerating investment in clean energy by improving its economics.

Researchers have found that traces of tumor DNA can be detected in the blood over three years before diagnosis. This ultra-sensitive genome sequencing breakthrough could lead to blood tests that catch cancer early—giving patients a critical advantage and boosting survival rates.

Enjoying The Hillsberg Report? Share it with friends who might find it valuable!

Haven't signed up for the weekly notification?

Subscribe Now