Quote of the week

“He who has a why to live can bear almost any how.”

- Friedrich Nietzsche

Edition 28 - July 13, 2025

“He who has a why to live can bear almost any how.”

- Friedrich Nietzsche

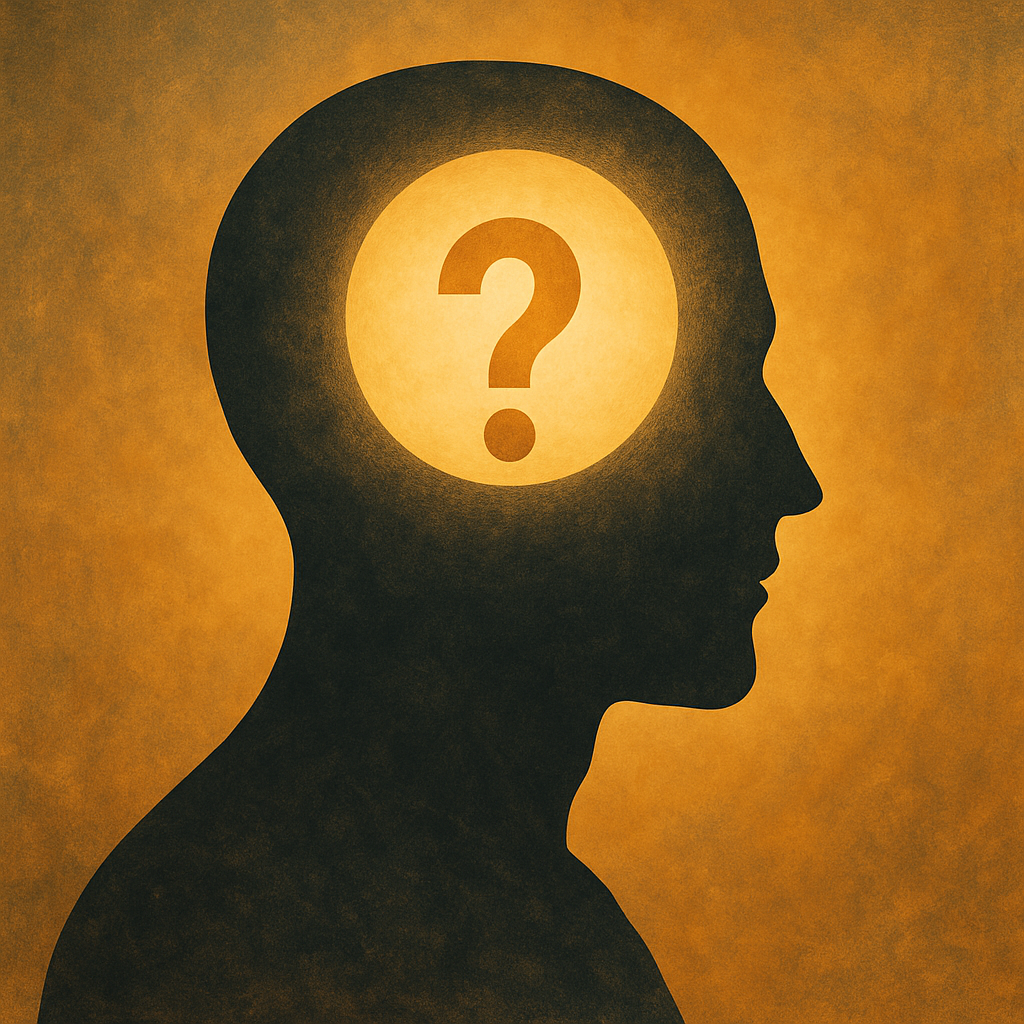

TLDR: Apple and Microsoft see AI as tools to help you work better. Meta and Anthropic want AI to do the work for you. OpenAI is straddling both. Google believes in the power of AI but is stuck between mission and monetization.

Every tech giant has its own philosophy when it comes to artificial intelligence. Some want to help you get more done. Others want to take the work off your plate entirely. And a few aren’t even sure which camp they’re in yet. Here’s a quick breakdown of where the major players stand — and where their bets might take them.

Apple is taking the slow and steady route. They believe technology should support humans, not replace them. That means AI will show up quietly as enhancements to hardware, not as a bold standalone product. Don’t expect Apple to lead in AI breakthroughs. They’re cautious, focused on tools, and apparently scaring off researchers with their lack of urgency.

Microsoft wants to make you more productive. With tools like Copilot, they’re betting on AI as a way to supercharge workflows across their massive enterprise customer base. But there’s a catch — people need to actually use the tools. Copilot has power, but it’s often underused. Plus, they face internal competition from OpenAI’s ChatGPT, which is weird considering Microsoft is their biggest investor.

Speaking of OpenAI, they’re still primarily consumer-focused. ChatGPT is everywhere, making them the go-to name for general AI tools. But they’re clearly eyeing the agent space, which could put them in even more direct competition with Microsoft. For now, they’re the most accessible platform — but the strategy is evolving fast.

Anthropic doesn’t want to help you work better. They want to replace the work entirely. Their focus is on building software agents that automate full business functions — think call centers or repetitive office work. They don’t really care about being a household name. They care about business impact.

Meta is going all in on agents too. Their goal is to have computers act on your behalf. That means proactive, independent AI that handles tasks without waiting for instructions. They’re poaching top researchers and betting big on long-term AI dominance, even as they acknowledge the risks to attention and behavior.

And then there’s Google. They’ve been an AI-first company for years, with infrastructure and talent to back it up. But their business model is tied to search ads, and that creates a big problem — AI that gives you the right answer fast isn’t great for a model that makes money by offering options. It’s a classic case of mission versus margin, and it’s slowing them down.

This article explores how a new wave of startups is capitalizing on the rise of AI chatbots like ChatGPT and Perplexity, which are starting to replace traditional Google search as the primary way people seek information online. These startups — such as Athena, Profound, and Scrunch — are building tools to help companies understand how generative AI models source and present information about brands. Their goal is to optimize content for visibility within AI-generated search summaries, a next-gen version of SEO. This shift comes as consumers increasingly rely on chatbot answers rather than clicking on links, prompting marketers to rethink how they attract traffic and conversions.

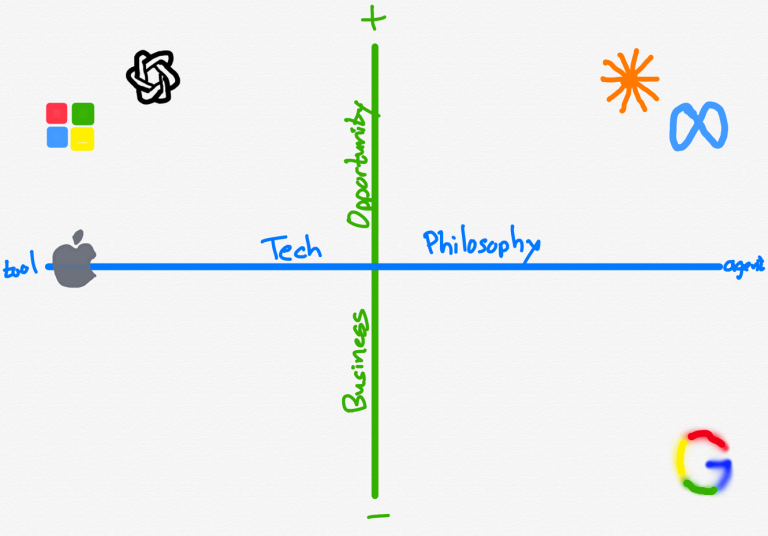

Companies like Google are also adapting, with features like AI Overviews and AI Mode summarizing results directly in the search interface. While Google maintains a dominant share of global search traffic, its core business is under pressure from AI-native experiences that reduce user reliance on link-based exploration. Startups in this space have collectively raised tens of millions of dollars and are already signing on major customers like Chime and Paperless Post. Though still early-stage, this growing ecosystem signals a broader transition to a “zero-click” internet, where bots — not humans — are the primary audience for most web content.

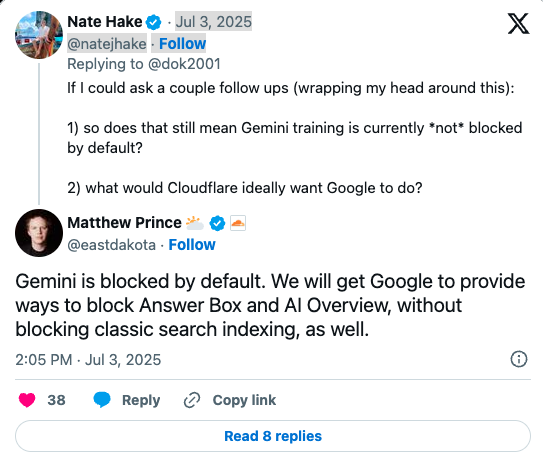

Cloudflare CEO Matthew Prince claims the company will convince Google to let website owners block AI Overviews and Answer Boxes — features that summarize content — without affecting their traditional search indexing. Currently, Google's controls like "nosnippet" hinder both AI features and standard rankings, leaving publishers with little control. Cloudflare recently began blocking AI bots by default and introduced a pay-per-crawl model, escalating pressure on AI companies. While Prince asserts confidence in reaching a solution with Google, it's unclear how or when this level of content control will materialize.

But all of this opens up bigger questions. What does the future of the internet look like when we no longer have to seek information ourselves? Is the endgame a single app that knows what we want before we do? And if so, who gets to build it? Who gets to distribute it? Businesses are already starting to rewire their content for AI readability. Will entire websites soon be written for crawlers, not humans? If AI tools are the new gatekeepers, do we still need browsers at all?

Perplexity has launched Comet, a new AI-powered web browser designed to replace traditional browsing with a more intelligent, conversational experience. Instead of juggling tabs and apps, users can interact naturally with Comet, asking it questions as they explore the web. Comet understands context, manages tasks, compares information, and even executes workflows like booking meetings or making purchases — all within a single, unified interface that adapts to the way users think. Built on Perplexity’s foundation of trustworthy information, it aims to be more than a search tool. It’s trying to become your second brain. Available now to Max subscribers via invite, Comet signals a big step toward AI-native internet navigation.

OpenAI is also preparing to launch a browser, built on Chromium and loaded with ChatGPT-like interfaces and AI agents that can handle user tasks automatically. It’s a direct shot at Google Chrome and an obvious attempt to pull users deeper into OpenAI’s ecosystem. With over 500 million weekly ChatGPT users already, they’ve got the distribution muscle. The move positions OpenAI to challenge Google not just on search, but on the entire experience of browsing itself.

But here’s the thing: do we even need a browser? Traditional browsers were built to help humans organize and navigate an ocean of links. But AI doesn’t need links. If these new interfaces are truly conversational and task-completing, then the whole concept of a browser might be outdated. What’s the point of tabbing between pages when an agent can just get you the answer or do the job?

All these companies building new browsers are just repainting the same old walls. If you really believe the future is AI-native, then stop trying to force the new world to live inside the old container. We don’t need a better browser. We need to move beyond the browser.

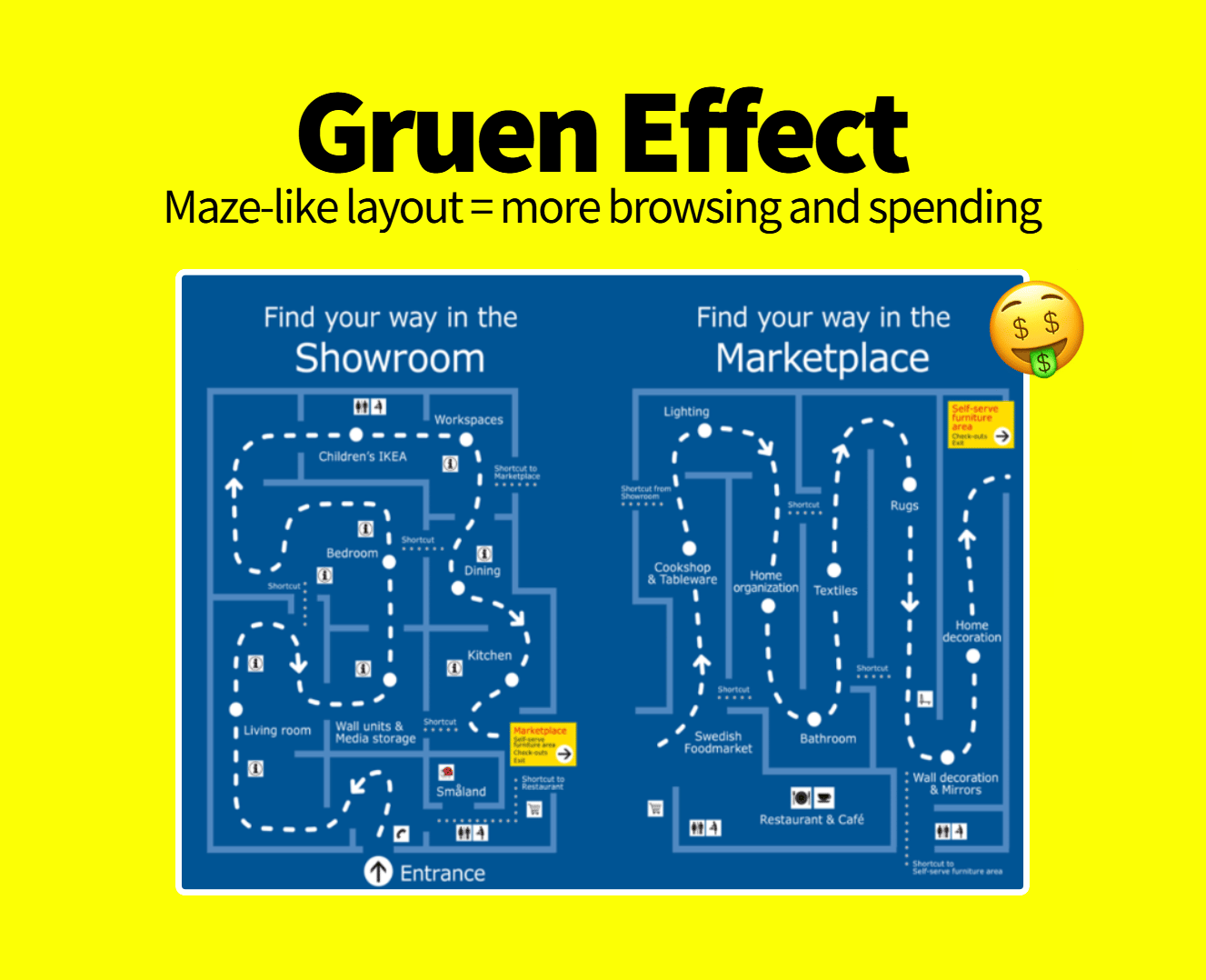

This article explains the Gruen Effect, a psychological phenomenon where shoppers make impulse purchases after being subtly disoriented by a store’s layout or sensory cues. First observed by architect Victor Gruen, the effect kicks in when environments like Target or your local grocery store disrupt your plan-following mindset. The result? You end up exploring aisles you never meant to visit and buying things you didn’t come for. Over 50% of purchases fall into this unplanned category — and yes, that includes the third candle you didn’t need.

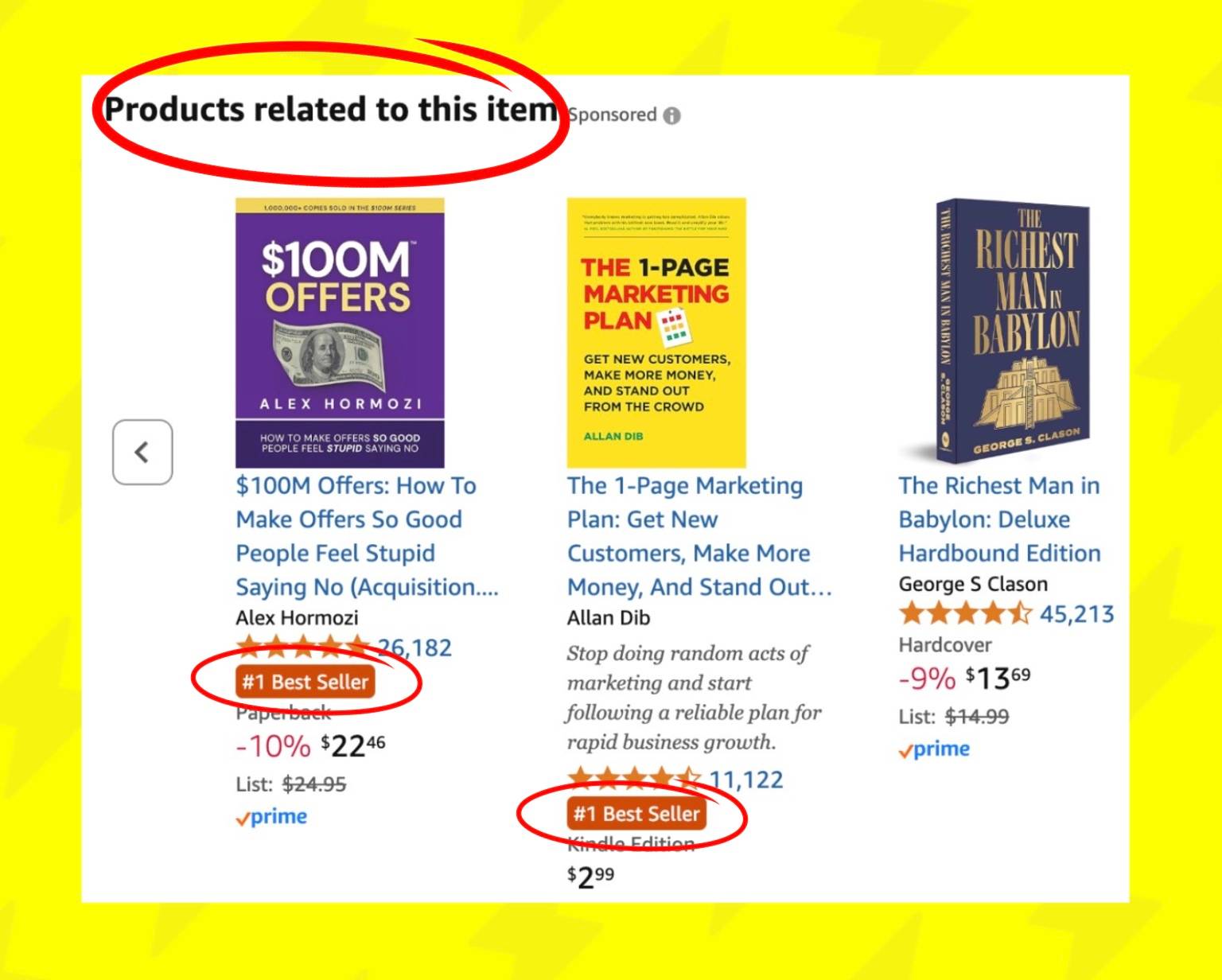

Businesses use the same playbook online. eCommerce brands add last-minute cross-sells (like Branch Basics). Amazon floods you with “related items” tagged with urgency. Even SaaS tools like Notion throw in surprise upgrades or features mid-use. Done well, this tactic introduces customers to things they actually want — just hadn’t thought about yet.

My latest run-in with the Gruen Effect was at Ikea. My wife and I went in for dressers and had to march halfway across Scandinavia to find them. On the way out, we tried to follow the shortcut signs like normal, logical adults. Somehow, we ended up back in office chairs...twice. At one point, I was positive we’d been cursed to wander the lighting section forever. So yes, the Gruen Effect is real. Just make sure it doesn’t turn into a maze that makes people regret stepping inside.

In a recent episode of the My First Million podcast, SaaStr founder Jason Lemkin laid out 10 insights on how AI is reshaping SaaS and B2B. He talked about the rise of AI clones — like the SaaStr AI trained on his own content — that often outperform their human originals thanks to perfect memory and broader context. People also confide more in AI than they do in real advisors or coaches, which opens up new business opportunities. Interfaces are shifting too: conversational, AI-first tools are taking over, and getting to $1M in ARR is now more doable than ever. The hard part is staying there.

Lemkin didn’t hold back. He said ghostwriters, BDRs, and designers are already being replaced. SaaStr, for example, brings in $25M with just five employees. AI talent is impossible to retain, young people want nothing to do with traditional jobs, and VCs are placing $100B bets. Content without AI? Dead. Investing in AI? Total fog of war. His takeaway: this isn’t a minor change. It’s the biggest shake-up in knowledge work since the internet. Listen or read the summary here.

10 Key Takeaways:

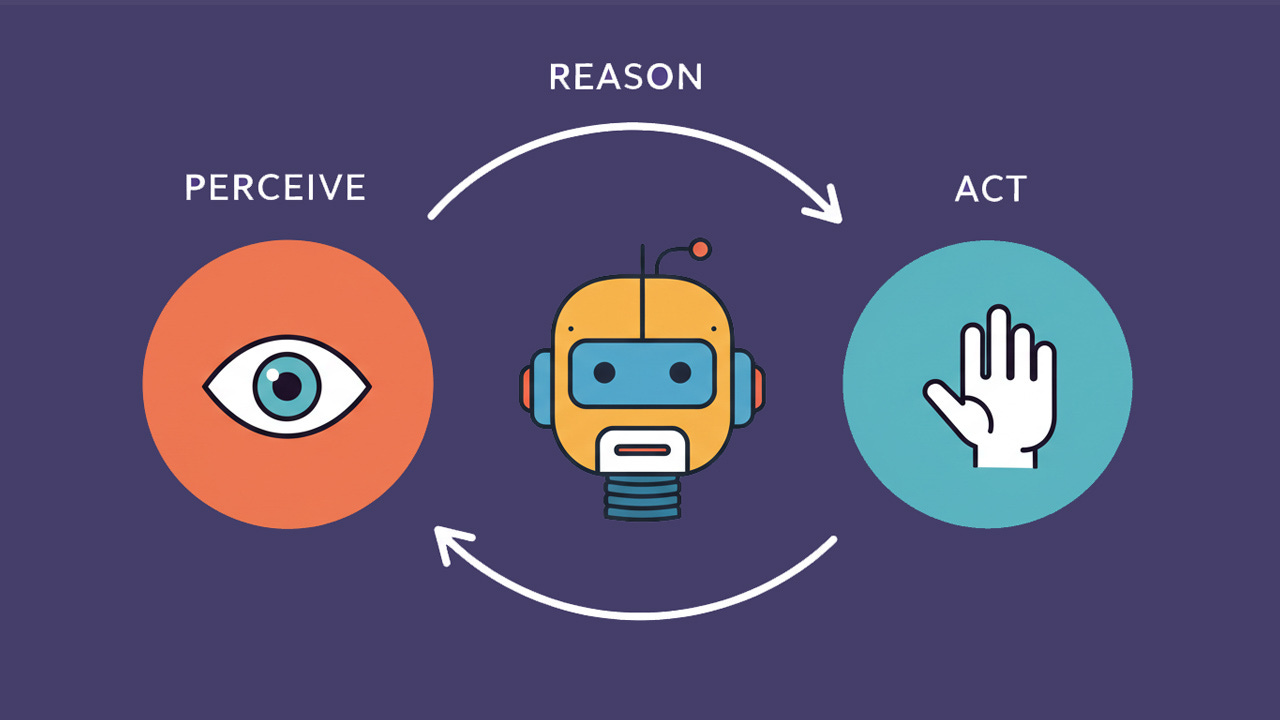

The article "What can agents actually do?" gives one of the best no-nonsense breakdowns of AI agents I’ve seen so far. Instead of hyping them up as magic solutions, the author focuses on how they really work: they evaluate context, call tools when needed, manage those tools with smart flow control, and operate like well-built software. In other words, agents are not silver bullets. They're extensions of product design. If your systems are sloppy, agents will just amplify the mess. But if your systems are tight, agents can scale them beautifully.

The article walks through clear examples like customer service and incident triage, showing that agents need more than just an LLM — they need logic, permissioning, monitoring, and human oversight. They’re not one-click automations. They’re software products that need to be built and maintained. The punchline? Don’t expect agents to replace good design. Expect them to reward it. If you treat them as shortcuts, they’ll make everything worse. If you treat them as systems, they’ll give you superpowers.

So where can agents actually shine? In healthcare, they could manage pre-visit intake and post-visit follow-ups — collecting patient symptoms, scheduling tests, summarizing doctor notes, and reminding patients of next steps. In finance, an agent could triage incoming expense reports or financial disclosures, flag anomalies, and trigger review workflows based on thresholds or internal rules. In HR, agents could handle job applicant screening, follow-up communications, and onboarding tasks like setting up system access or explaining benefits.

These are not science fiction. These are boring, operationally critical workflows that take up real time and money. Done right, agents won’t look flashy. They’ll look invisible — and that’s the whole point.

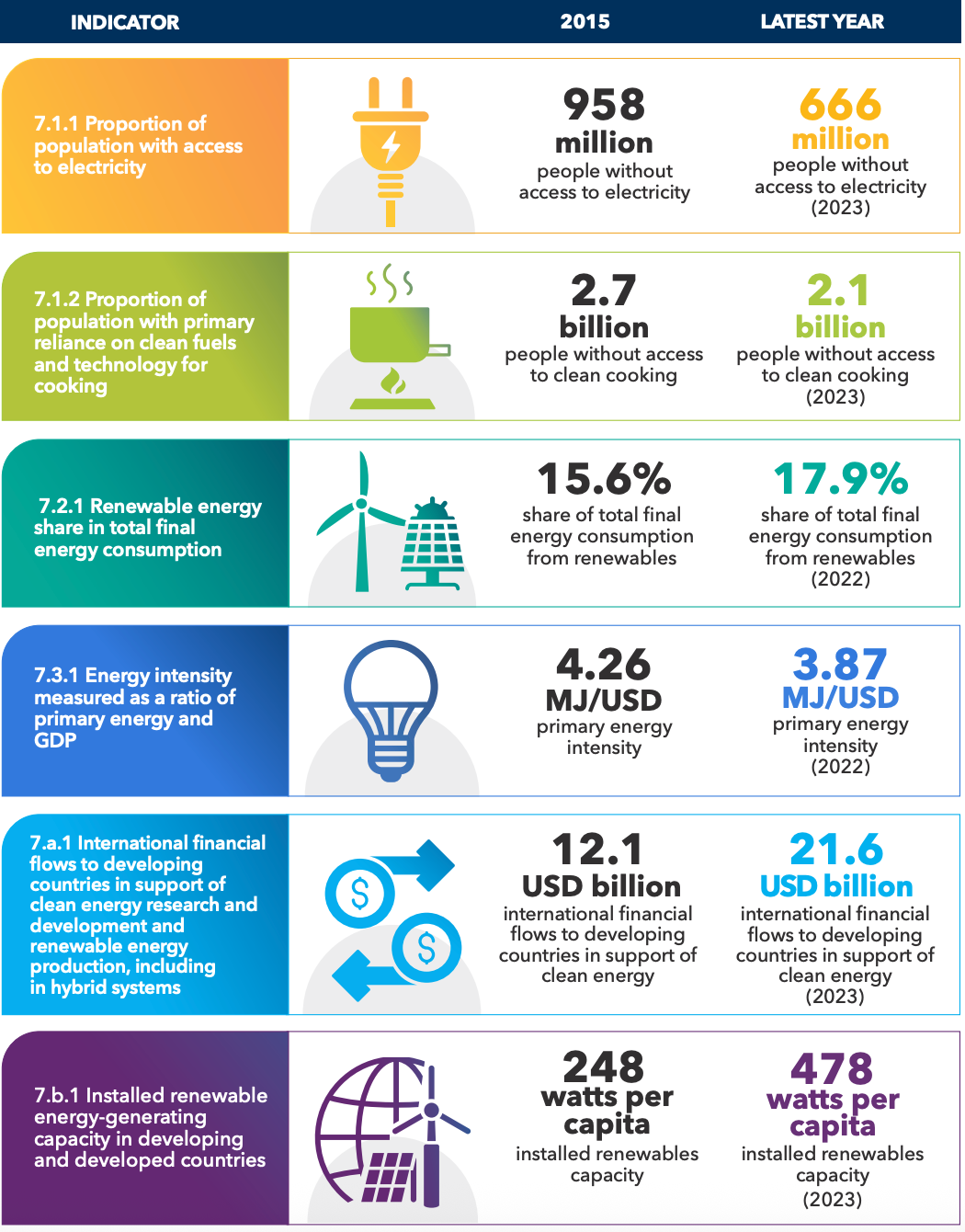

In the past decade, 292 million more people have gained access to electricity — even as the global population grew by about 760 million. Today, 92% of the world has electricity, up from 87% in 2010. Much of this progress is being powered by renewable energy, and hundreds of millions no longer rely on open fires for cooking.

A Maryland startup is set to begin producing “superwood” panels next month — compressed pine boards that are lighter than titanium, stronger than steel, and have just one-third the embodied carbon of concrete. Backed by $50 million in funding, including a U.S. Energy Department grant, the company will first target the façade and door markets before pursuing structural certification.

Paris has reopened the Seine for swimming after a €1.4 billion cleanup, ending a 102-year ban. Three free public bathing areas now welcome up to 1,000 swimmers daily, made possible by upgraded sewers, rainwater reservoirs, and daily water-quality testing introduced ahead of the Olympics. Fourteen more sites along the Seine and Marne are in the works, and President Macron has promised to take a swim himself.

Atmospheric water harvesters — capable of pulling up to 20 liters of water from the air each day — are moving from the lab to production lines next year. Enabled by advances in metal-organic frameworks and biomass hydrogels, these devices are designed for communities lacking reliable water access and are now being certified for household use.

Alphabet’s Isomorphic Labs, a spinout from DeepMind, is preparing for its first human trials of AI-designed drugs, aiming to revolutionize pharmaceutical development by making it faster, cheaper, and more precise. Emerging from the success of AlphaFold, which predicts protein structures with high accuracy, the company has paired AI researchers with experienced pharmaceutical professionals to tackle complex diseases like cancer. Backed by a $600 million funding round in April 2025 led by Thrive Capital, Isomorphic is now staffing up and preparing to move its AI-created compounds from lab development into clinical testing.

The U.S. Department of Energy is moving forward with testing ultra-compact nuclear microreactors, selecting Westinghouse’s eVinci and Radiant’s Kaleidos units for its new DOME facility at Idaho National Laboratory. These trailer-sized reactors, producing just 5 MW and 1.2 MW respectively, are designed to power remote sites like data centers or replace diesel generators, operating for years without refueling. Though still awaiting regulatory certification, the upcoming tests mark the first fueled experiments for microreactors and aim to gather data to support eventual commercialization. DOME is set to begin operations in early 2026.

Scientists at ETH Zurich have developed a groundbreaking CRISPR method that allows simultaneous editing of dozens of genes, enabling large-scale reprogramming of cells. Unlike traditional CRISPR techniques that typically edit one gene at a time, this new approach — using the Cas12a enzyme instead of Cas9—can target at least 25 gene sites at once, with potential for hundreds. By designing a custom plasmid containing multiple short RNA address sequences, the researchers achieved precise, multi-gene targeting in a single step. This advance opens new possibilities for exploring complex gene networks and treating multifactorial diseases.

YouTube is tightening its monetization rules starting July 15, 2025, aiming to shut the door on repetitive, low-quality content — much of it AI-generated. The platform is updating its Partner Program to make it clear that mass-produced spam and misleading AI videos won't earn money. The goal is to fight back against the flood of fake news clips, deepfakes, and AI-narrated garbage that’s been polluting the feed. Creators panicked that legitimate formats like reaction videos might get caught in the crossfire, but YouTube’s Rene Ritchie assured users that authentic content is safe. The new policy is focused squarely on the worst offenders clogging up the ecosystem.

The timing isn’t random. AI-generated scams and fake news videos are exploding, some even impersonating YouTube’s own CEO. This isn’t just a quality issue. It’s about trust, credibility, and keeping the advertising machine intact. YouTube’s trying to clean house before things spiral — and before regulators come knocking.

I think tagging all AI-generated content is critical. A few months ago, I wrote in this newsletter about how China is ahead of the curve here. They’re requiring all AI-generated content to be labeled. We should do the same. Audio, video, and images are easy — you can embed a signal humans can’t detect but machines can. The real problem is text. There's no foolproof way to tell if an article or comment was written by a bot or a person unless its run through a checker. But that's not a scalable approach.

Maybe the answer is something closer to what ARIA labels did for accessibility — built-in browser mechanisms that automatically flag text as AI-generated at the source. It wouldn’t solve everything, but it could at least start giving people a clue when they’re not reading something written by a human. We need infrastructure-level thinking, not just policy tweaks. Because right now, it’s still way too easy to flood the internet with convincing nonsense.

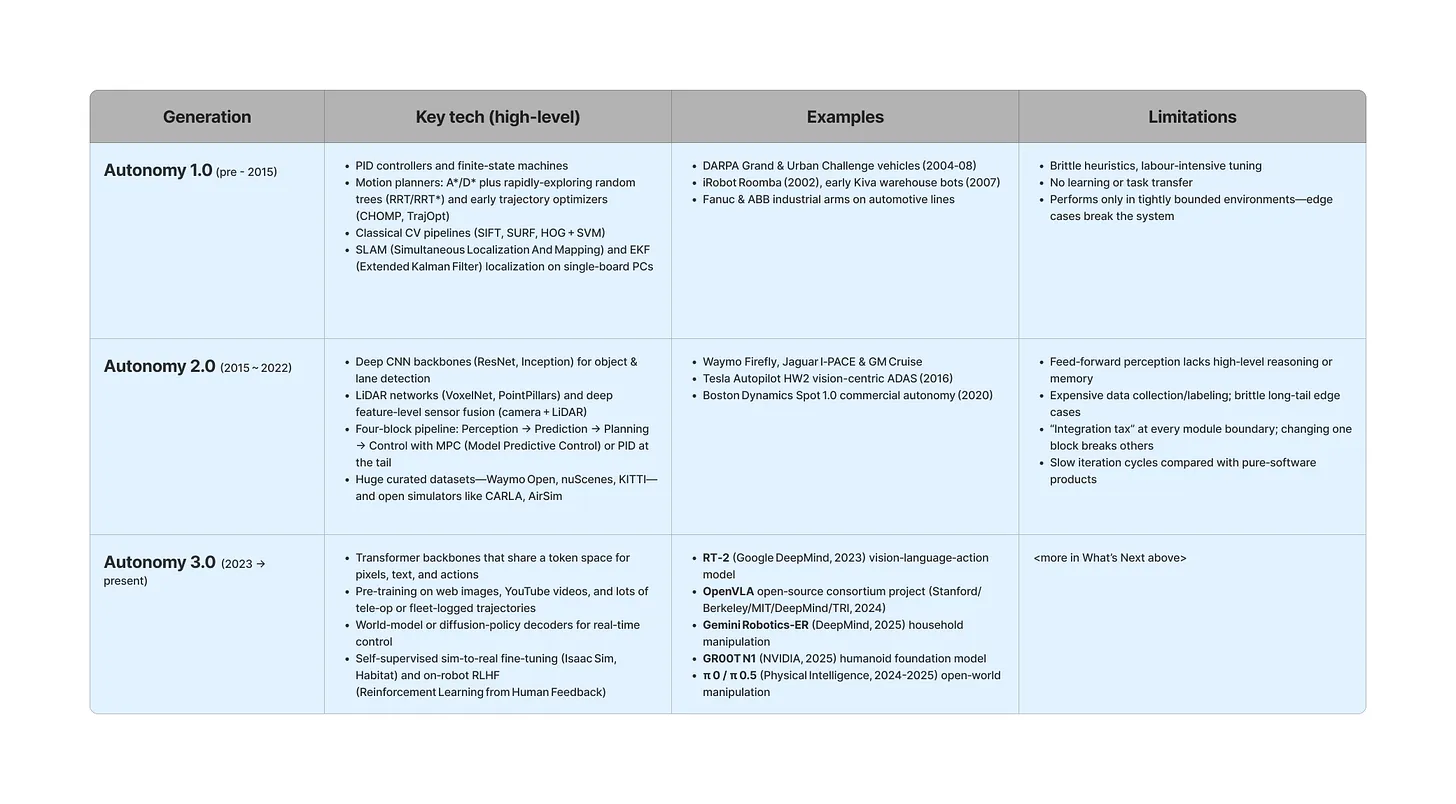

The robotics world is approaching its own “ChatGPT moment” — a point where general-purpose robots start doing useful things with almost no friction. What once required massive teams of engineers now just needs a good model and a webcam. Foundation models like Physical Intelligence’s π-0.5 are making it possible for robots to reason, perceive, and act in the physical world using unified transformer architectures. And these aren’t one-off demos. They’re starting to generalize across tasks, learn faster, and actually improve over time.

We’ve moved through the first two generations of robot autonomy — rigid rule-based bots and brittle deep-learning systems — and are now in Autonomy 3.0: vision-language-action models that can interpret, adapt, and execute. Autonomy 4.0 will bring fleets of robots working together, operating seamlessly with people, and learning collectively. But we’re not there yet. Problems like cognition bottlenecks, bad sim-to-real transfer, and unreliable hardware still slow us down. The real breakthroughs will come from startups solving annoying but valuable problems — like unloading a truck — rather than chasing only the humanoid hype. Tesla might win thanks to its scale. China’s hardware edge means it’ll be a major player, at the very least in providing critical parts. The West leads in embodied intelligence for now.

So what does life actually look like once we get there? Imagine Jalen, a warehouse supervisor in 2035. He walks into work, waves to a row of bots already halfway through restocking pallets. One of them adjusts its path slightly to avoid a coffee spill. In the breakroom, his home assistant pings to remind him the fridge filter needs replacing and the dishes have been washed. These robots won’t be flashy. They won’t be marketed with celebrity demos. They’ll just be there, doing stuff. Quietly useful, until we look up and realize the world has changed.

Amazon Web Services is launching a new AI agent marketplace on July 15 at the AWS Summit in New York, with Anthropic as a major partner. The platform will let developers list their AI agents for enterprises to browse, buy, and deploy — just like you would with SaaS tools. AWS will take a small cut, and Anthropic gets even deeper into the Amazon ecosystem. It’s also a direct shot at similar marketplaces from Google, Microsoft, and Salesforce, who have already started carving out territory in the space.

This is a big deal. The original AWS Marketplace turned into a serious revenue stream for startups that got in early and delivered real value. This new agent marketplace is the next iteration of that model. If you run a SaaS business, build a useful AI agent that mirrors your core functionality — support, triage, data entry, whatever — and list it. The companies that move fast and plant their flag now are going to be the ones with compounding returns later.

Enjoying The Hillsberg Report? Share it with friends who might find it valuable!

Haven't signed up for the weekly notification?

Subscribe Now