Quote of the week

“Tomorrow hopes we have learned something from yesterday.”

— John Wayne

Edition 25 - June 22, 2025

“Tomorrow hopes we have learned something from yesterday.”

— John Wayne

Let me start by saying: I’m not a robotics expert. I don’t know how to build a robot, and I’ve never worked on one. I couldn’t tell you how efficient their motors are or what kind of sensors they use to map a room. But I do know tech. And I’ve seen how talent moves when the ground starts to shift beneath it.

Here’s what I think is happening: The traditional tech world — especially SaaS — is being disrupted in a big way by LLMs. Roles are changing. Teams are shrinking. Entire product categories are being automated or absorbed. And at the same time, a massive new frontier is opening up - robotics. Not just hardware, but the software that powers it. The migration of talent from traditional tech into robotics tech is slowly beginning. And it might be the best thing that could happen for tech workers.

SaaS won’t vanish overnight. Some companies will stay strong. Some will become profitable cash cows. But the appetite for new SaaS tools is fading. Meanwhile, robotics startups are building fast. And they’ll need software: to control humanoids, manage industrial workflows, store and retrieve context, apply rules, troubleshoot failures, and scale across use cases. This is not a small shift. It’s an industry reset.

The disruption caused by LLMs is forcing a reallocation of talent. People who once built internal dashboards or onboarding flows will now design safety protocols for care robots or control loops for autonomous tractors. The work will feel harder. But it’ll also feel more meaningful. And the best talent will follow that feeling.

My prediction: Over the next 3–5 years we will see a massive shift in talent from traditional tech companies to robotics tech, where a massive demand for talent will arise alongside the adoption curve of robots. Most people still don’t understand how much things are going to change.

Amazon’s CEO told corporate teams to expect smaller headcounts as AI rolls out. The message was clear. This isn’t a maybe. This is happening. And the reason isn’t just cost. It’s about using AI to make teams more efficient.

But incentives matter. When leaders frame AI as a way to cut jobs, employees start making calculations. If using AI means making myself replaceable, why would I help? Some might ignore the tools. Some might slow-roll adoption. Others will only use AI where it’s visible. That’s not resistance. That’s rational self-preservation.

This puts companies in a tough spot. If your people don’t feel safe, you won’t get good data. You won’t hear what’s broken. You won’t know where AI actually helps. And when adoption is shallow, your investment doesn’t pay off.

Leaders need to think hard about how they introduce this shift. Not just the tools, but the rollout. Show people how AI helps them, not just the company. Give them a reason to explore, not fear. Create wins and share them. Let people lead the change from the inside. As a leader, you should map out your company goals and your current incentives, and make sure the incentives don't hinder the goals.

It’s easy to talk about AI as the future. But if your own incentives trip you up, you won’t get there. Not because the tech failed - but because your people never had a reason to care.

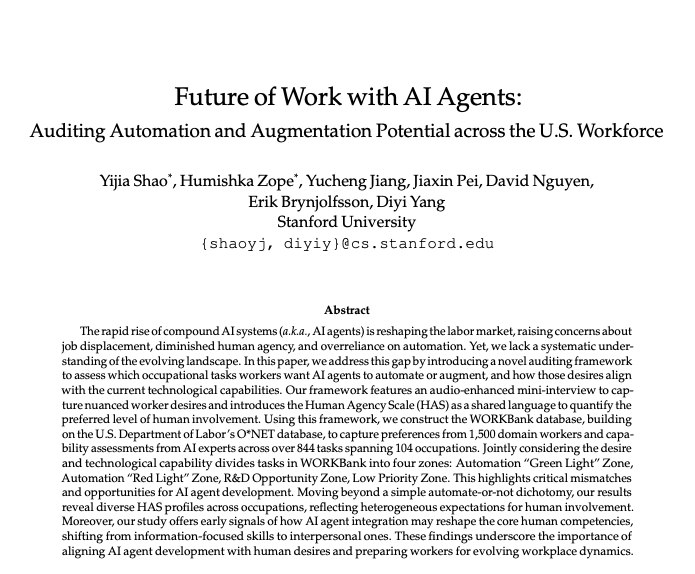

A new research paper, “Future of Work with AI Agents,” proposes a groundbreaking framework for evaluating which tasks across the U.S. workforce should be automated or augmented by artificial intelligence. Rather than focusing solely on technical feasibility, the study centers workers themselves — surveying 1,500 individuals across 104 occupations to understand what they want AI to do. These preferences were then audited by AI experts to determine whether today's technology can realistically deliver.

The study found a surprising disconnect. Workers overwhelmingly preferred that AI automate repetitive, low-skill tasks — those they see as tedious or time-consuming. But AI experts noted that many of those tasks remain beyond current AI capabilities, while more complex knowledge or communication-based tasks were often more automatable. The authors introduced a “Human Agency Scale” to help categorize tasks into zones — like “Green Light” for ready-to-automate tasks and “Red Light” for those that should remain human-led—for smarter decision-making.

The implications are clear: if AI deployment continues to ignore human preference, it could erode trust, lower morale, or even increase resistance to adoption. This research offers a better path — one that combines worker insight with technical assessment to guide where and how AI agents are introduced. As companies and policymakers face accelerating automation, this study suggests the future of work shouldn’t just be about what AI can do — but what people are willing to let it do.

You can read the full paper here.

For the first time, the U.S. Senate has passed a federal framework for regulating stablecoins. It’s a big step. The new bill, bipartisan and years in the making, sets clear rules for how stablecoins should be issued, backed, and redeemed.

At the core: 1:1 reserves. If a company issues a dollar-backed token, it needs to actually hold dollars or T-bills to back it. No lending those reserves out. No games. The rules also mandate audits, anti-money laundering controls, and guaranteed redemption rights for users. Both federal and state regulators will have a say in who gets to issue and how.

Some lawmakers raised flags around big tech involvement and conflicts of interest. But overall, the support was broad. The market needed guardrails, and now they’re coming. The bill moves to the House next, where it will be reconciled with a similar version.

This doesn’t settle every question about crypto regulation. But it does show that serious progress is being made — and that stablecoins are no longer operating in a legal vacuum.

The Ocean Cleanup has announced an ambitious new plan to reduce marine plastic pollution by targeting 30 of the world’s most polluted rivers. Using its Interceptor technology—belt-and-boat systems designed to scoop up waste—the organization hopes to cut plastic inflow into the ocean by one-third by 2030. Cities like Mumbai, Bangkok, and Los Angeles are among the first to benefit from this expansion, with strong backing from local governments and global philanthropic partners.

Colombia is taking a major step toward long-term peace by reopening negotiations with armed groups under its ‘total peace’ initiative. The discussions include ceasefires, rural development programs, and pathways for reintegration. While past efforts have stumbled, there’s renewed optimism that public fatigue with conflict could finally tip the scales in favor of resolution.

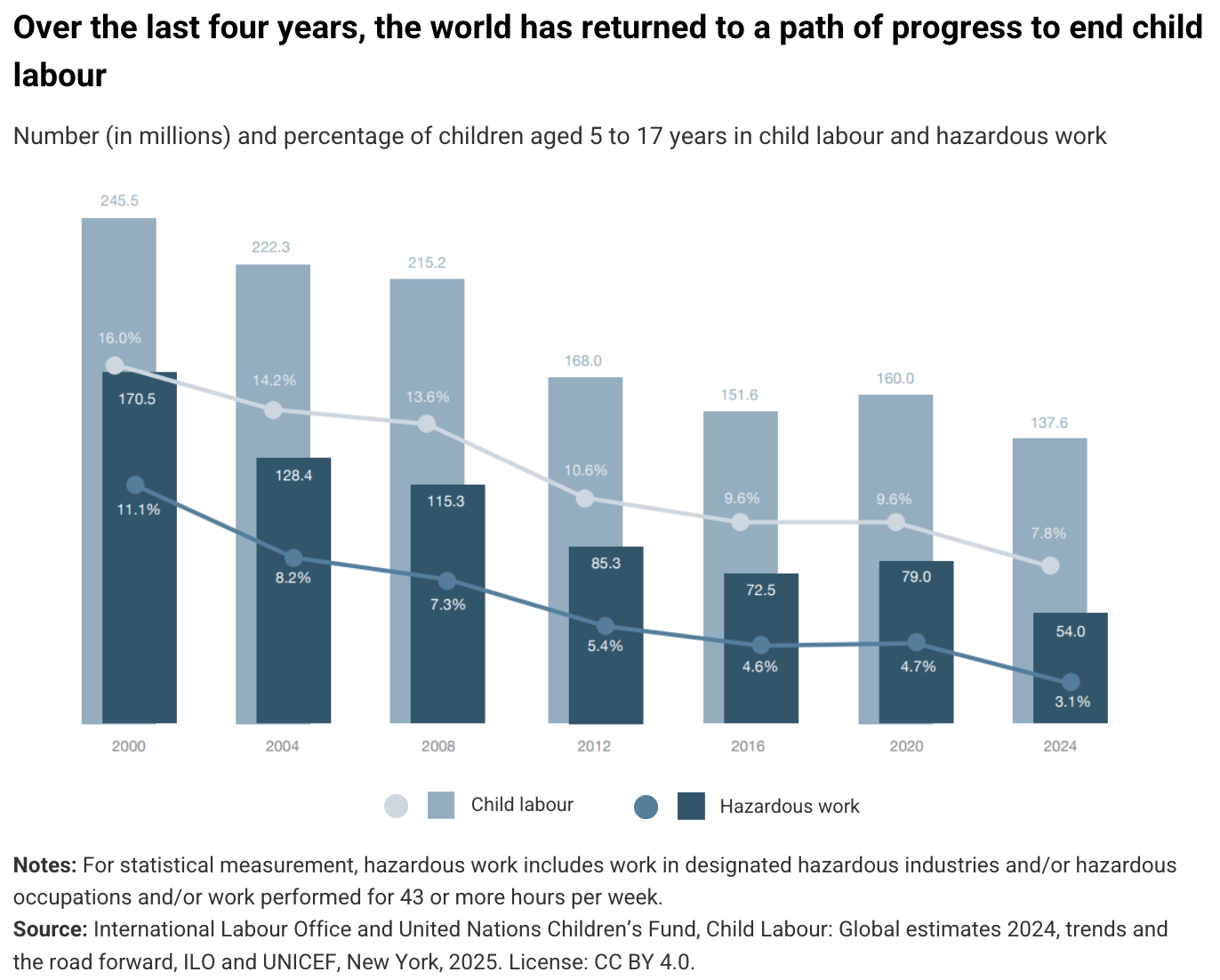

Global progress on child labor is accelerating. According to UNICEF, the percentage of children involved in labor has dropped by more than half since 2000. The decline is credited to better education access, stronger legal protections, and declining poverty rates around the world.

Researchers have uncovered a fascinating discovery: each individual has a unique “breathprint,” tied not only to BMI but also to psychological traits like anxiety, depression, and autism. Notably, those with higher depressive tendencies exhaled faster. This breakthrough could lead to entirely new, non-invasive screening tools for mental health conditions.

And finally, a quiet but meaningful win for animal welfare: the U.S. Navy has officially ended all medical testing involving cats and dogs. The policy shift follows years of pressure from White Coat Waste, a nonprofit working to end harmful government-funded animal experiments.

AGI stands for Artificial General Intelligence. It’s the idea of a system that can learn and reason across any domain, like a human. ASI, or Artificial Superintelligence, is the next step. It’s what happens when that system becomes smarter than us. Not just a little smarter. A lot.

But here’s the thing: none of that is real yet. What we have right now doesn’t think. It doesn’t imagine. It doesn’t wonder what it should build next. It takes input, does math, and predicts an output. Even the latest LLMs — even the ones that feel alive — are just very sophisticated autocomplete engines. It’s still just math.

In my opinion, the real leap to AGI or ASI will require something we haven’t discovered yet. A new kind of breakthrough. Something closer to neuroscience than computer science. We don’t fully understand how the brain works, let alone how to recreate it. Until we do, we’re building smarter tools, not minds.

So when people like Sam Altman say, “We’re close to AGI” I can’t help but roll my eyes. That’s marketing. That’s investor hype. And to be clear - I’m not a scientist or AI expert. This is just a layman’s take. But it feels like we’re still in the preface of the AGI story, not the final chapter.

When the real shift comes, we’ll know. It won’t just feel like better math. It’ll feel like something new has entered the room.

Enjoying The Hillsberg Report? Share it with friends who might find it valuable!

Haven't signed up for the weekly notification?

Subscribe Now