Quote of the week

“Only those who risk going too far can possibly find out how far one can go.”

— T.S. Eliot

Edition 24 - June 15, 2025

“Only those who risk going too far can possibly find out how far one can go.”

— T.S. Eliot

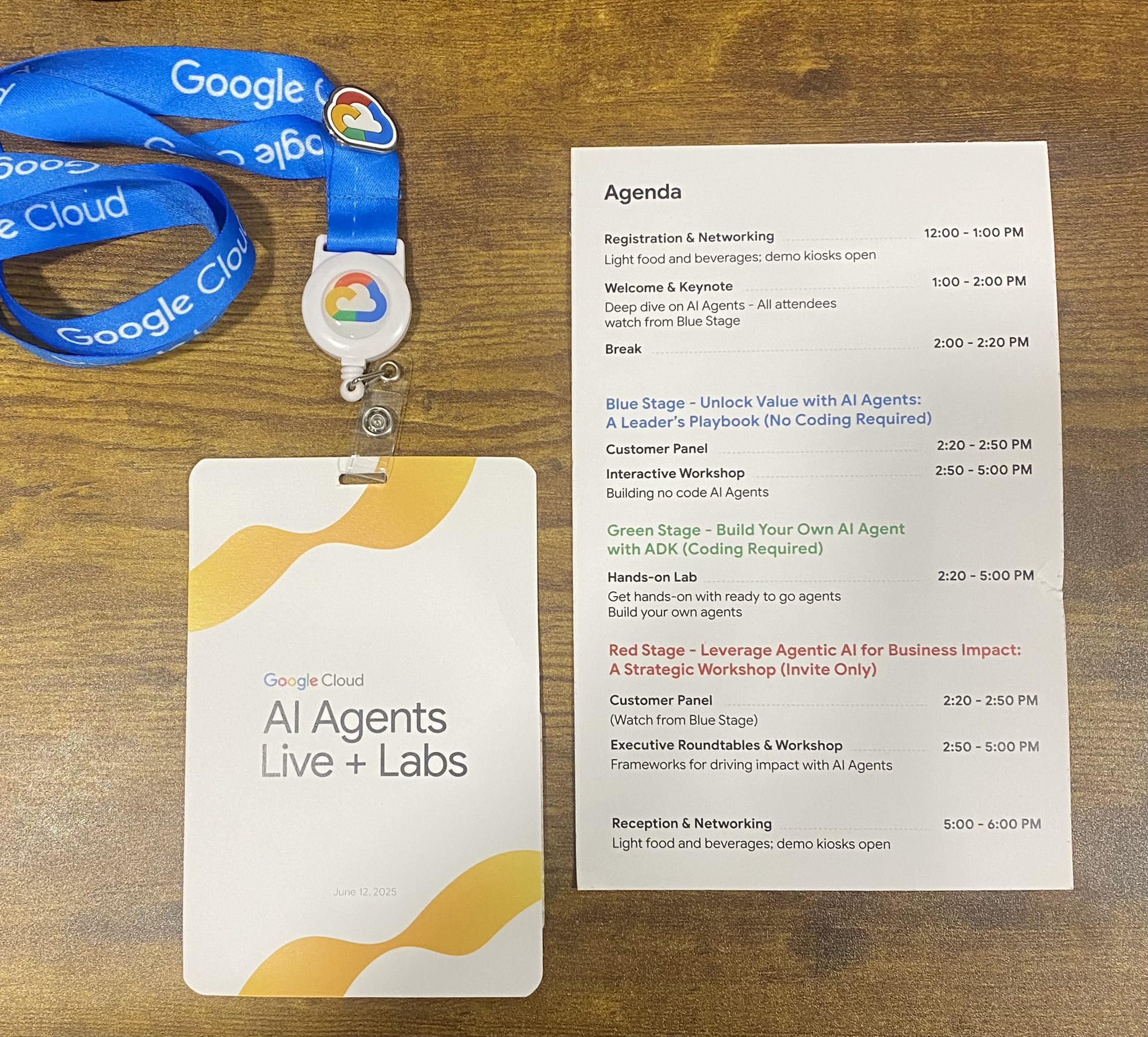

This past Thursday, I attended Google’s Cloud AI Agents Live + Labs event in NYC and one thing was crystal clear: Google is going hard after the enterprise market. While most major players are focused on improving their foundational models, Google has gone all-in on building the infrastructure and tooling around those models. If you’re a large org looking to implement AI at scale, Google is starting to look like the most comprehensive vendor in the game.

The standout announcement was Agentspace, a new agent builder platform that combines a company-specific knowledge graph, custom agent interfaces, and tight integration with Google Workspace and enterprise systems. It’s a robust, no-code, flexible environment for building internal AI tools and assistants, and it’s positioned as the employee-facing AI hub for many companies. In a market crowded with vertical search startups and fragmented agent builders, Google is unifying these functions under one roof — and it’s going to attract serious attention.

They also doubled down on MCP (Model Context Protocol) and A2A (Agent2Agent) messaging, the Agent Developer Kit (ADK), and their Vertex AI suite. Together, these form a powerful ecosystem — not just for running LLMs, but for deploying actual agents inside enterprise environments. Google seems to be betting that building the “model” isn’t enough. They also want to own the environment the model lives in, and they’re building every piece of that stack.

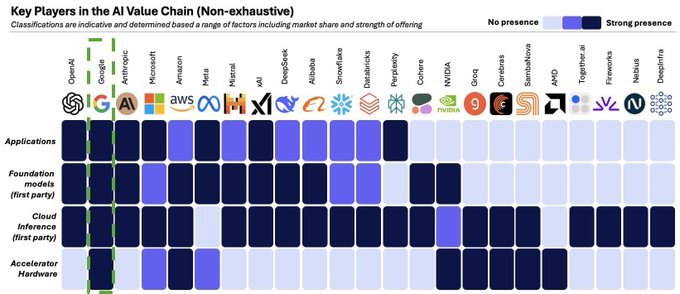

While dozens of startups are sprinting to deliver point solutions in knowledge retrieval, secure chatbots, and internal search, Google is bundling it all. And they’re doing it with deep integrations, massive infrastructure, and brand trust. I’d be surprised if they don’t capture a huge portion of the enterprise AI market over the next 12–18 months. If you’re building in this space, pay attention — Google’s not just in the race. They’re laying down the track.

TL;DR

WWDC 2025 showed Apple making thoughtful moves into local AI, finally cleaning up some old UX pain points, and tying the ecosystem together visually. Developers get more control, users get better privacy, and we all get a brand-new emoji blender we didn’t ask for. Here's a short video showing everything that was released.

🎬 Apple's F1 Movie

Kicking things off, Apple showed off its F1 original film. Honestly? It looks pretty sick. High production value, fast cars — what’s not to like?

🧠 Apple Intelligence: Local AI

Apple announced a few updates to “Apple Intelligence,” a suite of features powered primarily by on-device models. Key highlights include support for more languages, improved models, and expanded use cases across the OS. Third-party developers now get access to on-device LLMs — no cloud API costs, which is a big deal for performance and privacy.

🧼 A Major Redesign: Glassy, Unified, and Familiar

Apple rolled out its biggest design change since iOS 7 with a glassy “liquid” look across all platforms. It’s been getting a lot of hate, but it looks kinda cool. I think the bigger announcement is the unification of design across all Apple platforms:

📱 iOS Improvements

Lock Screen gets some mild 3D effects. Photos navigation is finally tolerable. Safari feels cleaner. CarPlay got a revamp, and “CarPlay Ultra” lets manufacturers build custom UI layouts—cool in theory, niche in practice.

Phone App:

New layout, call screening for unknown numbers, and Hold Assist that waits on hold for you. All welcome changes that make you wonder why they didn’t exist already.

Messages:

Native support for polls, Apple Cash integration, and spam filtering. But of course, the green bubble curse remains.

🎵 Music

AutoMix uses AI to blend tracks. Still no recommendations based on your library though — what’s the holdup?

🗺 Maps

Smarter routing, delay predictions, and “visited places” history. All genuinely helpful upgrades.

💳 Wallet

Now supports digital IDs in 9 states and introduces a TSA-accepted Digital Passport ID for domestic travel. Just don’t toss your real passport yet.

⌚ watchOS

Not much progress compared to Whoop or other fitness trackers. But “Workout Buddy” gives motivational nudges using your own data, which might actually be useful. Creepy, but useful.

📺 tvOS + Apple TV+

Minor UI changes. Content is strong, as usual. Profitability? Still anyone’s guess.

💻 macOS “Tahoe”

Gets the new design, introduces “Live Activities” from iPhone, and a smarter Spotlight that understands context. Developers get deeper Spotlight integration via the App Intents API.

🕶 visionOS

Still no AR glasses. Let’s be honest, that’s all we cared about.

🧑💻 iPadOS

New windowing system improves multitasking — great for the fraction of people who treat iPads like laptops.

🛠 Developer Tools

Icon Composer brings slick layered icon design. Xcode now supports natural language coding with OpenAI-powered models. More details promised in the Platforms State of the Union.

People are more likely to follow through on goals when they feel like they’ve already made progress — even if that progress isn’t real. This is called the Endowed Progress Effect, and it’s a powerful tool in product design. Give someone a punch card with two stamps already filled, and suddenly they’re more motivated to finish the rest. The same goes for digital onboarding flows that show early wins right away.

Notion nails this. When new users sign up, it asks what they’re trying to accomplish, then fills the workspace with templates tailored to that goal. It’s subtle, but effective. That small sense of momentum makes users feel like they’re already on their way, and that’s often enough to keep them coming back.

Two recent innovations are reshaping how large language models (LLMs) are trained, updated, and improved — without relying on constant human supervision. The first is Reinforcement Pre‑Training (RPT), which reimagines next-token prediction as a reasoning task rather than a rote autocomplete exercise. Instead of simply guessing the next word, the model “plans” and reasons through potential outputs, earning intrinsic rewards when it correctly predicts the next token. This reinforcement-based approach doesn’t require labeled data or human-generated rewards, making it highly scalable across the open web.

RPT isn’t just a clever training trick—it yields significant gains. By encouraging the model to reason internally before outputting a token, RPT improves generalization and reasoning ability. It scales consistently with increased compute, and acts as a better launchpad for future reinforcement learning and downstream tasks. In short, it helps models think before they speak—and that’s proving to be powerful.

The second innovation, SEAL (Self-Edits for Autonomous Learning), takes things even further by allowing LLMs to modify themselves. Given a new input or task, the model generates a “self-edit” — an internal directive that might involve fine-tuning on new examples, adjusting its learning parameters, or modifying its internal weights. It then applies those edits and evaluates the outcome through a reinforcement loop, gradually learning how to improve itself autonomously over time.

What makes SEAL groundbreaking is that it turns the LLM into both the student and the teacher. Instead of relying on external developers to update or re-train it, the model proposes and applies its own updates. This opens the door to continuous, real-time learning across use cases like updating facts, improving generalization on low-data tasks, or adapting to new environments — all without starting from scratch.

Taken together, RPT and SEAL signal a shift toward more adaptive, self-improving AI systems. As impressive as current LLMs are, they’re still largely static once deployed. These breakthroughs bring us closer to models that learn on the fly, reflect on their own performance, and continuously evolve without waiting on engineers. That’s not just more powerful — it’s a different paradigm entirely.

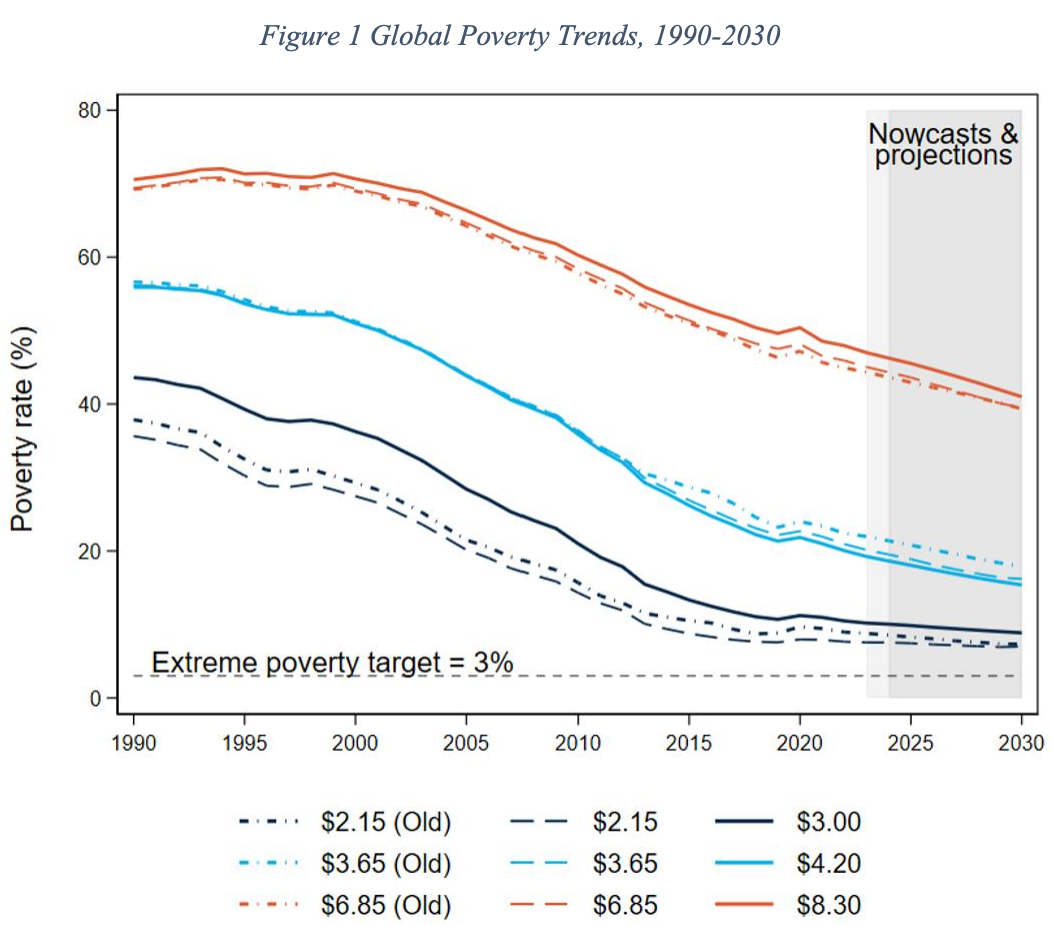

Global poverty is declining — even with the World Bank’s newly raised poverty benchmarks. India is driving much of this progress, now representing over one-third of all people worldwide who have escaped extreme poverty in the past ten years. Under the updated $3-per-day threshold, India’s extreme poverty rate has fallen from 27.1% in 2012 to just 5.3% in 2023 — meaning nearly 270 million people have moved out of extreme poverty in just over a decade.

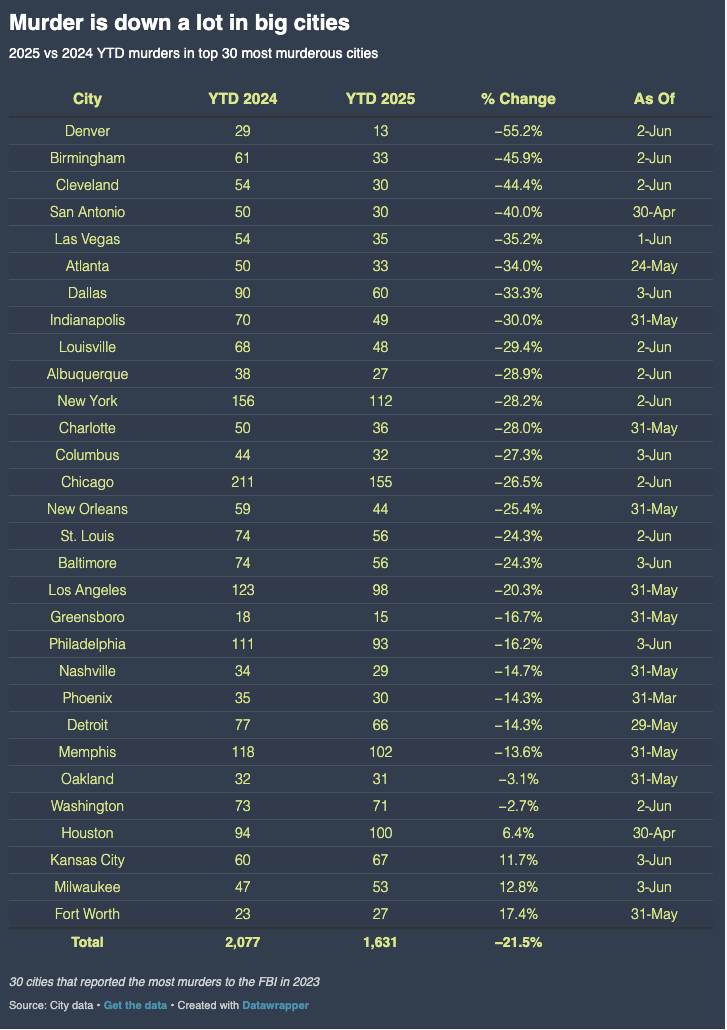

Violent crime in the United States has dropped dramatically — so why don’t Americans feel safer? Across the country, city after city is seeing steep declines, and by the end of 2025, the national murder rate may hit its lowest point since recordkeeping began in 1960. If those were the "good old days," then by the numbers, so are these. Check out these numbers:

In a quiet corner of the medical world, a technology called Focused Ultrasound Stimulation (FUS) is redefining how we treat disease — without needles, pills, or surgery. FUS works by directing low-intensity sound waves to stimulate nerve cells, triggering natural biological responses. Early studies have shown it can reduce inflammation by targeting the spleen, curb appetite and lower blood sugar in animal models by activating nerve pathways from the liver, and even reduce lung pressure in cardiopulmonary disease. It’s noninvasive, already uses FDA-cleared hardware, and has a roadmap toward home-based wearables for daily treatment. Major players like GE HealthCare and Novo Nordisk are already placing bets with upcoming clinical trials.

What makes FUS especially exciting is that it flips the treatment paradigm: instead of chemically disrupting pathways with drugs, it taps into the body’s own communication network — the nervous system — to restore balance. Whether managing metabolic disorders like obesity and diabetes or chronic inflammation, FUS hints at a future where bioelectronic medicine replaces long-term prescriptions with targeted, real-time neural modulation. For millions living with chronic illness, this could change everything.

Meanwhile, progress in the fight against cancer continues to impress. Since the early 1990s, U.S. cancer death rates have dropped by nearly one-third, sparing over four million lives. Childhood leukemia, once a near-certain death sentence, now has a survival rate above 90%. The overall five-year cancer survival rate has climbed from about 49% in the 1970s to nearly 69% today. These numbers reflect not just better treatments, but smarter policy: reduced smoking and earlier detection thanks to advanced screening and diagnostics.

On the treatment side, advances like CAR-T immunotherapy have delivered astonishing results—offering long-lasting remission for even late-stage patients. New drugs and monoclonal antibodies have also extended survival in difficult blood cancers. Yes, there’s still work to do — emerging trends like rising GI cancers in younger people remain concerning — but the trajectory is clear: we’re living through a time when once-impossible medical outcomes are becoming routine. Between bioelectronic therapy and increasingly personalized cancer treatments, the future of health looks less like a hospital ward — and more like hope.

Enjoying The Hillsberg Report? Share it with friends who might find it valuable!

Haven't signed up for the weekly notification?

Subscribe Now